Vertex AI pricing

Prices are listed in US Dollars (USD). If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Vertex AI pricing compared to legacy product pricing

The costs for Vertex AI remain the same as they are for the legacy AI Platform and AutoML products that Vertex AI supersedes, with the following exceptions:

- Legacy AI Platform Prediction and AutoML Tables predictions supported lower-cost, lower-performance machine types that aren't supported for Vertex AI Inference and AutoML tabular.

- Legacy AI Platform Prediction supported scale-to-zero, which isn't supported for Vertex AI Inference.

Vertex AI also offers more ways to optimize costs, such as the following:

- Optimized TensorFlow runtime.

- Support for co-hosting models.

- No minimum usage duration for Training and Prediction. Instead, usage is charged in 30 second increments.

Pricing for Generative AI on Vertex AI

For Generative AI on Vertex AI pricing information, see Pricing for Generative AI on Vertex AI.

Pricing for AutoML models

For Vertex AI AutoML models, you pay for three main activities:

- Training the model

- Deploying the model to an endpoint

- Using the model to make predictions

Vertex AI uses predefined machine configurations for Vertex AutoML models, and the hourly rate for these activities reflects the resource usage.

The time required to train your model depends on the size and complexity of your training data. Models must be deployed before they can provide online predictions or online explanations.

You pay for each model deployed to an endpoint, even if no prediction is made. You must undeploy your model to stop incurring further charges. Models that are not deployed or have failed to deploy are not charged.

You pay only for compute hours used; if training fails for any reason other than a user-initiated cancellation, you are not billed for the time. You are charged for training time if you cancel the operation.

Select a model type below for pricing information.

Image data

Image data

Operation | Price (classification) (USD) | Price (object detection) (USD) |

|---|---|---|

Training | $3.465 / 1 hour | $3.465 / 1 hour |

Training (Edge on-device model) | $18.00 / 1 hour | $18.00 / 1 hour |

Deployment and online prediction | $1.375 / 1 hour | $2.002 / 1 hour |

Batch prediction | $2.222 / 1 hour | $2.222 / 1 hour |

Tabular data

Tabular data

Operation | Price per node hour for classification/regression | Price for forecasting |

|---|---|---|

Training | $21.252 / 1 hour | Refer to Vertex AI Forecast |

Inference | Same price as inference for custom-trained models. Vertex AI performs batch inference using 40 n1-highmem-8 machines. | Refer to Vertex AI Forecast |

Inference charges for Vertex Explainable AI

Compute associated with Vertex Explainable AI is charged at same rate as inference. However, explanations take longer to process than normal inferences, so heavy usage of Vertex Explainable AI along with auto-scaling could result in more nodes being started, which would increase inference charges.

Vertex AI Forecast

AutoML

AutoML

Stage | Pricing |

|---|---|

Prediction | 0 count to 1,000,000 count $0.20 / 1,000 count, per 1 month / account 1,000,000 count to 50,000,000 count $0.10 / 1,000 count, per 1 month / account 50,000,000 count and above $0.02 / 1,000 count, per 1 month / account |

Training | $21.252 / 1 hour |

Explainable AI | Explainability using Shapley values. Refer to Vertex AI Inference and Explanation pricing page. |

* A prediction data point is one time point in the forecast horizon. For example, with daily granularity a 7-day horizon is 7 points per each time series.

- Up to 5 prediction quantiles can be included at no additional cost.

- The number of data points consumed per tier is refreshed monthly.

ARIMA+

ARIMA+

Stage | Pricing |

|---|---|

Prediction | $5.00 / 1,000 count |

Training | $250.00 per TB x Number of Candidate Models x Number of Backtesting Windows* |

Explainable AI | Explainability with time series decomposition does not add any additional cost. Explainability using Shapley values is not supported. |

Refer to the BigQuery ML pricing page for additional details. Each training and prediction job incurs the cost of 1 managed pipeline run, as described in Vertex AI pricing.

* A backtesting window is created for each period in the test set. The AUTO_ARIMA_MAX_ORDER used determines the number of candidate models. It ranges from 6-42 for models with multiple time series.

Custom-trained models

Training

Training

The tables below provide the approximate price per hour of various training configurations. You can choose a custom configuration of selected machine types. To calculate pricing, sum the costs of the virtual machines you use.

If you use Compute Engine machine types and attach accelerators, the cost of the accelerators is separate. To calculate this cost, multiply the prices in the table of accelerators below by how many machine hours of each type of accelerator you use.

Machine types

Machine types

You can use Spot VMs with Vertex AI custom training. Spot VMs are billed according to Compute Engine Spot VMs pricing. There are Vertex AI custom training management fees in addition to your infrastructure usage, captured in the following tables.

You can use Compute Engine reservations with Vertex AI custom training. When using Compute Engine reservations, you're billed according to Compute Engine Pricing, including any applicable committed use discounts (CUDs). There are Vertex AI custom training management fees in addition to your infrastructure usage, captured in the following tables.

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Delhi (asia-south2)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Alabama (us-east7)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

- Phoenix (us-west8)

Machine type | Price (USD) |

|---|---|

g4-standard-48 | $5.1750851 / 1 hour |

g4-standard-96 | $10.3501702 / 1 hour |

g4-standard-192 | $20.7003404 / 1 hour |

g4-standard-384 | $41.4006808 / 1 hour |

n1-standard-4 | $0.21849885 / 1 hour |

n1-standard-8 | $0.4369977 / 1 hour |

n1-standard-16 | $0.8739954 / 1 hour |

n1-standard-32 | $1.7479908 / 1 hour |

n1-standard-64 | $3.4959816 / 1 hour |

n1-standard-96 | $5.2439724 / 1 hour |

n1-highmem-2 | $0.13604845 / 1 hour |

n1-highmem-4 | $0.2720969 / 1 hour |

n1-highmem-8 | $0.5441938 / 1 hour |

n1-highmem-16 | $1.0883876 / 1 hour |

n1-highmem-32 | $2.1767752 / 1 hour |

n1-highmem-64 | $4.3535504 / 1 hour |

n1-highmem-96 | $6.5303256 / 1 hour |

n1-highcpu-16 | $0.65180712 / 1 hour |

n1-highcpu-32 | $1.30361424 / 1 hour |

n1-highcpu-64 | $2.60722848 / 1 hour |

n1-highcpu-96 | $3.91084272 / 1 hour |

a2-highgpu-1g* | $4.425248914 / 1 hour |

a2-highgpu-2g* | $8.850497829 / 1 hour |

a2-highgpu-4g* | $17.700995658 / 1 hour |

a2-highgpu-8g* | $35.401991315 / 1 hour |

a2-megagpu-16g* | $65.707278915 / 1 hour |

a3-highgpu-8g* | $101.007352 / 1 hour |

a3-megagpu-8g* | $106.0464232 / 1 hour |

a3-ultragpu-8g* | $99.7739296 / 1 hour |

a4-highgpu-8g* | $148.212 / 1 hour |

e2-standard-4 | $0.154126276 / 1 hour |

e2-standard-8 | $0.308252552 / 1 hour |

e2-standard-16 | $0.616505104 / 1 hour |

e2-standard-32 | $1.233010208 / 1 hour |

e2-highmem-2 | $0.103959618 / 1 hour |

e2-highmem-4 | $0.207919236 / 1 hour |

e2-highmem-8 | $0.415838472 / 1 hour |

e2-highmem-16 | $0.831676944 / 1 hour |

e2-highcpu-16 | $0.455126224 / 1 hour |

e2-highcpu-32 | $0.910252448 / 1 hour |

n2-standard-4 | $0.2233714 / 1 hour |

n2-standard-8 | $0.4467428 / 1 hour |

n2-standard-16 | $0.8934856 / 1 hour |

n2-standard-32 | $1.7869712 / 1 hour |

n2-standard-48 | $2.6804568 / 1 hour |

n2-standard-64 | $3.5739424 / 1 hour |

n2-standard-80 | $4.467428 / 1 hour |

n2-highmem-2 | $0.1506661 / 1 hour |

n2-highmem-4 | $0.3013322 / 1 hour |

cloud-tpu | Pricing is determined by the accelerator type. See 'Accelerators'. |

n2-highmem-8 | $0.6026644 / 1 hour |

n2-highmem-16 | $1.2053288 / 1 hour |

n2-highmem-32 | $2.4106576 / 1 hour |

n2-highmem-48 | $3.6159864 / 1 hour |

n2-highmem-64 | $4.8213152 / 1 hour |

n2-highmem-80 | $6.026644 / 1 hour |

n2-highcpu-16 | $0.6596032 / 1 hour |

n2-highcpu-32 | $1.3192064 / 1 hour |

n2-highcpu-48 | $1.9788096 / 1 hour |

n2-highcpu-64 | $2.6384128 / 1 hour |

n2-highcpu-80 | $3.298016 / 1 hour |

c2-standard-4 | $0.2401292 / 1 hour |

c2-standard-8 | $0.4802584 / 1 hour |

c2-standard-16 | $0.9605168 / 1 hour |

c2-standard-30 | $1.800969 / 1 hour |

c2-standard-60 | $3.601938 / 1 hour |

m1-ultramem-40 | $7.237065 / 1 hour |

m1-ultramem-80 | $14.47413 / 1 hour |

m1-ultramem-160 | $28.94826 / 1 hour |

m1-megamem-96 | $12.249984 / 1 hour |

*This amount includes GPU price, since this instance type always requires a fixed number of GPU accelerators.

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Accelerators

Accelerators

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Delhi (asia-south2)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Berlin (europe-west10)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

- Phoenix (us-west8)

Machine type | Price (USD) | Vertex Management Fee |

|---|---|---|

NVIDIA_TESLA_A100 | $2.933908 / 1 hour | $0.4400862 / 1 hour |

NVIDIA_TESLA_A100_80GB | $3.92808 / 1 hour | $0.589212 / 1 hour |

NVIDIA_H100_80GB | $9.79655057 / 1 hour | $1.4694826 / 1 hour |

NVIDIA_H200_141GB | $10.708501 / 1 hour | Unavailable |

NVIDIA_H100_MEGA_80GB | $11.8959171 / 1 hour | Unavailable |

NVIDIA_TESLA_L4 | $0.644046276 / 1 hour | Unavailable |

NVIDIA_TESLA_P4 | $0.69 / 1 hour | Unavailable |

NVIDIA_TESLA_P100 | $1.679 / 1 hour | Unavailable |

NVIDIA_TESLA_T4 | $0.4025 / 1 hour | Unavailable |

NVIDIA_TESLA_V100 | $2.852 / 1 hour | Unavailable |

TPU_V2 Single (8 cores) | $5.175 / 1 hour | Unavailable |

TPU_V2 Pod (32 cores)* | $27.60 / 1 hour | Unavailable |

TPU_V3 Single (8 cores) | $9.20 / 1 hour | Unavailable |

TPU_V3 Pod (32 cores)* | $36.80 / 1 hour | Unavailable |

tpu7x-standard-4t (1 chip) | $13.80 / 1 hour | Unavailable |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

* The price for training using a Cloud TPU Pod is based on the number of cores in the Pod. The number of cores in a pod is always a multiple of 32. To determine the price of training on a Pod that has more than 32 cores, take the price for a 32-core Pod, and multiply it by the number of cores, divided by 32. For example, for a 128-core Pod, the price is (32-core Pod price) * (128/32). For information about which Cloud TPU Pods are available for a specific region, see System Architecture in the Cloud TPU documentation.

Disks

Disks

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Delhi (asia-south2)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

pd-standard | $0.000063014 / 1 gibibyte hour |

pd-ssd | $0.000267808 / 1 gibibyte hour |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

- All use is subject to the Vertex AI quota policy.

- You are required to store your data and program files in Google Cloud Storage buckets during the Vertex AI lifecycle. See more about Cloud Storage usage.

You are charged for training your models from the moment when resources are provisioned for a job until the job finishes.

Warning: Your training jobs are limited by the Vertex AI quota policy. If you choose a very powerful processing cluster for your first training jobs, it's likely you will exceed your quota.

Scale tiers for predefined configurations (AI Platform Training)

You can control the type of processing cluster to use when training your model. The simplest way is to choose from one of the predefined configurations called scale tiers. Read more about scale tiers.

Machine types for custom configurations

If you use Vertex AI or select CUSTOM as your scale tier for AI Platform Training, you have control over the number and type of virtual machines to use for the cluster's master, worker and parameter servers. Read more about machine types for Vertex AI and machine types for AI Platform Training.

The cost of training with a custom processing cluster is the sum of all the machines you specify. You are charged for the total time of the job, not for the active processing time of individual machines.

Gen AI Evaluation Service

For model-based metrics, charges are applied only for the prediction costs associated with the underlying autorater model. They are billed based on the input tokens that you provide in your evaluation dataset and the autorater output.

Gen AI Evaluation Service is generally available (GA). Pricing change took effect on April 14, 2025.

Metric | Pricing |

|---|---|

Pointwise | Default autorater model Gemini 2.0 Flash |

Pairwise | Default autorater model Gemini 2.0 Flash |

Computation-based metrics are charged at $0.00003 per 1k characters for input and $0.00009 per 1k characters for output. They are referred to as Automatic Metric in SKU.

Metric Name | Type |

|---|---|

Exact Match | Computation-based |

Bleu | Computation-based |

Rouge | Computation-based |

Tool Call Valid | Computation-based |

Tool Name Match | Computation-based |

Tool Parameter Key Match | Computation-based |

Tool Parameter KV Match | Computation-based |

Prices are listed in US Dollars (USD). If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Legacy model-based metrics are charged at $0.005 per 1k characters for input and $0.015 per 1k characters for output.

Metric Name | Type |

|---|---|

Coherence | Pointwise |

Fluency | Pointwise |

Fulfillment | Pointwise |

Safety | Pointwise |

Groundedness | Pointwise |

Summarization Quality | Pointwise |

Summarization Helpfulness | Pointwise |

Summarization Verbosity | Pointwise |

Question Answering Quality | Pointwise |

Question Answering Relevance | Pointwise |

Question Answering Helpfulness | Pointwise |

Question Answering Correctness | Pointwise |

Pairwise Summarization Quality | Pairwise |

Pairwise Question Answering Quality | Pairwise |

Prices are listed in US Dollars (USD). If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Vertex AI Agent Engine

Vertex AI Agent Engine is a set of services for developers to scale agents in production. Services can be used together or a la carte. You only pay for what you use. Today, you pay for the Agent Engine runtime.

Starting January 28, 2026, billing will begin for Code Execution, Sessions, and Memory Bank.

Runtime

Pricing is based on compute (vCPU hours) and memory (GiB hours) resources used by agents that are deployed to the Agent Engine runtime. Billing is rounded to the nearest second of usage. Idle time for an agent is not billed.

Free Tier

To help you get started with the runtime, we offer a monthly free tier.

- vCPU - First 180,000 vCPU-seconds (50 hours) free per month

- RAM - First 360,000 GiB-seconds (100 hours) free per month

Usage based pricing

Once your monthly usage exceeds the free tier, billing begins per the rates below.

Resource | Price (USD) |

|---|---|

vCPU | 0 hour to 50 hour $0.00 (Free) / 3,600 second, per 1 month / project 50 hour and above $0.0864 / 3,600 second, per 1 month / project |

RAM | 0 gibibyte hour to 100 gibibyte hour $0.00 (Free) / 3,600 gibibyte second, per 1 month / project 100 gibibyte hour and above $0.009 / 3,600 gibibyte second, per 1 month / project |

Code Execution

Similar to the runtime, you pay for the compute and memory required to run a sandbox. Billing is rounded to the nearest second of usage. Idle time is not billed.

- Compute: $0.0864 per vCPU hour

- Memory: $0.0090 per GiB-hour

Sessions

You pay based on the number of events stored in the session service. We bill for stored session events that include content. This includes the initial user request, model responses, function calls, and function responses. We do not bill for system control events (such as checkpoints) that are stored in the session service.

- $0.25 per 1,000 events stored

Memory Bank

Pay based on the number of memories stored and returned.

- Memory Stored: $0.25 per 1,000 memories stored / month (+ LLM costs to generate memories, paid separately)

- Memory retrieval: $0.50 per 1,000 memories returned; the first 1000 memories returned per month are free

Pricing scenarios

Pricing scenarios

To help you understand the cost of using Agent Engine services, we offer two hypothetical agents: a Lightweight Agent and a Standard Agent. For both scenarios, we make the following assumptions:

- Free tiers: For these calculations, we assume that the runtime and memory bank free tiers have already been used within a month for prior experimentation.

- Runtime Requests per Session: A "full session" or conversation consists of 10 runtime requests.

- Sessions: Each runtime request generates an average of 3 session events.

- Memory Bank:

- Storage: At the end of each full session, 1 memory is extracted and stored.

- Retrieval: We assume an average of 1 memory is returned per runtime request.

- Code Execution is invoked for 30% of all runtime requests.

- Billing Month: All monthly calculations are based on a 30-day month.

Additional notes:

- Service modularity: While the below scenarios show the cost of Agent Engine services used together, you can choose to use services individually. For example, you can use the Agent Engine session and memory bank services without using the Agent Engine runtime.

- Additional model costs: Agents require LLMs to reason and plan. LLM tokens consumed by agents are billed separately and not included in the below scenarios.

- Additional tool costs: Agents require tools to take action. Tools used by agents (e.g. API calls, storage) are billed separately and not included in the below scenarios.

Hypothetical Scenarios

Hypothetical Scenarios

Scenario 1: Lightweight Internal Agent

This scenario represents agents handling low-volume, sporadic traffic.

- Use Case Examples: An IT helpdesk bot for a small company, a personal productivity agent that drafts emails, or a Slack bot that provides answers from documentation.

- Compute and memory required for runtime and code execution: 1 vCPU / 1 GiB RAM.

- Traffic: 0.16 queries per second (10 queries per minute), totaling 432,000 requests per month.

- Average Request Duration: 3 seconds

Service | Calculation | Monthly Cost |

|---|---|---|

Runtime | (432,000 requests × 3 sec/req ÷ 3600 sec/hr) = 360 hours vCPU: (360 hrs × 1 vCPU × $0.0864/hr) = $31.10 RAM: (360 hrs × 1 GiB × $0.0090/hr) = $3.24 | $34.34 |

Code Execution | (360 runtime hours × 30% usage) = 108 hrs vCPU: (108 hrs × 1 vCPU × $0.0864/hr) = $9.33 RAM: (108 hrs × 1 GiB × $0.0090/hr) = $0.97 | $10.30 |

Sessions | 432,000 requests x 3 events ÷ 1,000 × $0.25 | $324 |

Memory Bank | Stored: (432,000 reqs ÷ 10 reqs/session × 1 memory/session ÷ 1,000) × $0.25 = $10.80 Retrieval: (432,000 reqs × 1 returned memory ÷ 1,000) × $0.50 = $216.00 | $226.80 |

Total Estimated Monthly Cost | $595.44 |

Scenario 2: Standard Agent

This scenario represents a production agent integrated into a business application, handling consistent user traffic.

- Use Case Examples: A customer service agent on an e-commerce site, a lead qualification bot on a B2B website, or an internal data analysis agent for sales teams.

- Compute: 2 vCPU / 5 GiB RAM

- Traffic: 10 queries per second (600 queries per minute), totaling 25,920,000 requests per month.

- Average Request Duration: 5 seconds

Service | Calculation | Monthly Cost |

|---|---|---|

Runtime | (25,920,000 requests × 5 sec/req ÷ 3600 sec/hr) = 36,000 hours vCPU: (36,000 hrs × 2 vCPU × $0.0864/hr) = $6,220.80 RAM: (36,000 hrs × 5 GiB × $0.0090/hr) = $1,620.00 | $7,840.80 |

Code Execution | (36,000 runtime hours × 30% usage) = 10,800 hours vCPU: (10,800 hrs × 2 vCPU × $0.0864/hr) = $1,866.24 RAM: (10,800 hrs × 5 GiB × $0.0090/hr) = $486 | $2,352.24 |

Sessions | 25,920,000 requests * 3 events ÷ 1,000 × $0.25 | $19,440 |

Memory Bank | Stored: (25,920,000 reqs ÷ 10 reqs/session × 1 memory/session ÷ 1,000) × $0.25 = $648.00 Retrieval: (25,920,000 reqs × 1 returned memory ÷ 1,000) × $0.50 = $12,960.00 | $13,608 |

Total Estimated Monthly Cost | $43,241.04 |

Ray on Vertex AI

Training

Training

The tables below provide the approximate price per hour of various training configurations. You can choose a custom configuration of selected machine types. To calculate pricing, sum the costs of the virtual machines you use.

If you use Compute Engine machine types and attach accelerators, the cost of the accelerators is separate. To calculate this cost, multiply the prices in the table of accelerators below by how many machine hours of each type of accelerator you use.

Machine types

Machine types

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

- Phoenix (us-west8)

Machine type | Price (USD) |

|---|---|

n1-standard-4 | $0.2279988 / 1 hour |

n1-standard-8 | $0.4559976 / 1 hour |

n1-standard-16 | $0.9119952 / 1 hour |

n1-standard-32 | $1.8239904 / 1 hour |

n1-standard-64 | $3.6479808 / 1 hour |

n1-standard-96 | $5.4719712 / 1 hour |

n1-highmem-2 | $0.1419636 / 1 hour |

n1-highmem-4 | $0.2839272 / 1 hour |

n1-highmem-8 | $0.5678544 / 1 hour |

n1-highmem-16 | $1.1357088 / 1 hour |

n1-highmem-32 | $2.2714176 / 1 hour |

n1-highmem-64 | $4.5428352 / 1 hour |

n1-highmem-96 | $6.8142528 / 1 hour |

n1-highcpu-16 | $0.68014656 / 1 hour |

n1-highcpu-32 | $1.36029312 / 1 hour |

n1-highcpu-64 | $2.72058624 / 1 hour |

n1-highcpu-96 | $4.08087936 / 1 hour |

a2-highgpu-1g* | $4.408062 / 1 hour |

a2-highgpu-2g* | $8.816124 / 1 hour |

a2-highgpu-4g* | $17.632248 / 1 hour |

a2-highgpu-8g* | $35.264496 / 1 hour |

a2-highgpu-16g* | $70.528992 / 1 hour |

a3-highgpu-8g* | $105.39898088 / 1 hour |

a3-megagpu-8g* | $110.65714224 / 1 hour |

a4-highgpu-8g* | $148.212 / 1 hour |

e2-standard-4 | $0.16082748 / 1 hour |

e2-standard-4 | $0.32165496 / 1 hour |

e2-standard-16 | $0.64330992 / 1 hour |

e2-standard-32 | $1.28661984 / 1 hour |

e2-highmem-2 | $0.10847966 / 1 hour |

e2-highmem-4 | $0.21695932 / 1 hour |

e2-highmem-8 | $0.43391864 / 1 hour |

e2-highmem-16 | $0.86783728 / 1 hour |

e2-highcpu-16 | $0.4749144 / 1 hour |

e2-highcpu-32 | $0.9498288 / 1 hour |

n2-standard-4 | $0.2330832 / 1 hour |

n2-standard-8 | $0.4661664 / 1 hour |

n2-standard-16 | $0.9323328 / 1 hour |

n2-standard-32 | $1.8646656 / 1 hour |

n2-standard-48 | $2.7969984 / 1 hour |

n2-standard-64 | $3.7293312 / 1 hour |

n2-standard-80 | $4.661664 / 1 hour |

n2-highmem-2 | $0.1572168 / 1 hour |

n2-highmem-4 | $0.3144336 / 1 hour |

n2-highmem-8 | $0.6288672 / 1 hour |

n2-highmem-16 | $1.2577344 / 1 hour |

n2-highmem-32 | $2.5154688 / 1 hour |

n2-highmem-48 | $3.7732032 / 1 hour |

n2-highmem-64 | $5.0309376 / 1 hour |

n2-highmem-80 | $6.288672 / 1 hour |

n2-highcpu-16 | $0.6882816 / 1 hour |

n2-highcpu-32 | $1.3765632 / 1 hour |

n2-highcpu-48 | $2.0648448 / 1 hour |

n2-highcpu-64 | $2.7531264 / 1 hour |

n2-highcpu-80 | $3.441408 / 1 hour |

c2-standard-4 | $0.2505696 / 1 hour |

c2-standard-8 | $0.5011392 / 1 hour |

c2-standard-16 | $1.0022784 / 1 hour |

c2-standard-30 | $1.879272 / 1 hour |

c2-standard-60 | $3.758544 / 1 hour |

m1-ultramem-40 | $7.55172 / 1 hour |

m1-ultramem-80 | $15.10344 / 1 hour |

m1-ultramem-160 | $30.20688 / 1 hour |

m1-megamem-96 | $12.782592 / 1 hour |

cloud-tpu | Pricing is determined by the accelerator type. See 'Accelerators'. |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Accelerators

Accelerators

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Finland (europe-north1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

GPU type | Price (USD) |

|---|---|

NVIDIA_TESLA_A100 | $3.5206896 / 1 hour |

NVIDIA_TESLA_A100_80GB | $4.517292 / 1 hour |

NVIDIA_H100_80GB | $11.75586073 / 1 hour |

NVIDIA_TESLA_P4 | $0.72 / 1 hour |

NVIDIA_TESLA_P100 | $1.752 / 1 hour |

NVIDIA_TESLA_T4 | $0.42 / 1 hour |

NVIDIA_TESLA_V100 | $2.976 / 1 hour |

TPU_V2 Single (8 cores) | $5.40 / 1 hour |

TPU_V2 Pod (32 cores)* | $28.80 / 1 hour |

TPU_V3 Single (8 cores) | $9.60 / 1 hour |

TPU_V3 Pod (32 cores)* | $38.40 / 1 hour |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

* The price for training using a Cloud TPU Pod is based on the number of cores in the Pod. The number of cores in a pod is always a multiple of 32. To determine the price of training on a Pod that has more than 32 cores, take the price for a 32-core Pod, and multiply it by the number of cores, divided by 32. For example, for a 128-core Pod, the price is (32-core Pod price) * (128/32). For information about which Cloud TPU Pods are available for a specific region, see System Architecture in the Cloud TPU documentation.

Disks

Disks

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Disk type | Price (USD) |

|---|---|

pd-standard | $0.000065753 / 1 gibibyte hour |

pd-ssd | $0.000279452 / 1 gibibyte hour |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

- All use is subject to the Vertex AI quota policy.

- You are required to store your data and program files in Google Cloud Storage buckets during the Vertex AI lifecycle. See more about Cloud Storage usage.

You are charged for training your models from the moment when resources are provisioned for a job until the job finishes.

Warning: Your training jobs are limited by the Vertex AI quota policy. If you choose a very powerful processing cluster for your first training jobs, it's likely you will exceed your quota.

Prediction and explanation

The following tables provide the prices of batch prediction, online prediction, and online explanation per node hour. A node hour represents the time a virtual machine spends running your prediction job or waiting in an active state (an endpoint with one or more models deployed) to handle prediction or explanation requests.

You can use Spot VMs with Vertex AI Inference. Spot VMs are billed according to Compute Engine Spot VMs pricing. There are Vertex AI Inference management fees in addition to your infrastructure usage, captured in the following tables.

You can use Compute Engine reservations with Vertex AI Inference. When using Compute Engine reservations, you're billed according to Compute Engine Pricing, including any applicable committed use discounts (CUDs). There are Vertex AI Inference management fees in addition to your infrastructure usage, captured in the following tables.

E2 Series

E2 Series

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

e2-standard-2 | $0.0770564 / 1 hour |

e2-standard-4 | $0.1541128 / 1 hour |

e2-standard-8 | $0.3082256 / 1 hour |

e2-standard-16 | $0.6164512 / 1 hour |

e2-standard-32 | $1.2329024 / 1 hour |

e2-highmem-2 | $0.1039476 / 1 hour |

e2-highmem-4 | $0.2078952 / 1 hour |

e2-highmem-8 | $0.4157904 / 1 hour |

e2-highmem-16 | $0.8315808 / 1 hour |

e2-highcpu-2 | $0.056888 / 1 hour |

e2-highcpu-4 | $0.113776 / 1 hour |

e2-highcpu-8 | $0.227552 / 1 hour |

e2-highcpu-16 | $0.455104 / 1 hour |

e2-highcpu-32 | $0.910208 / 1 hour |

N1 Series

N1 Series

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Milan (europe-west8)

- Paris (europe-west9)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

n1-standard-2 | $0.1095 / 1 hour |

n1-standard-4 | $0.219 / 1 hour |

n1-standard-8 | $0.438 / 1 hour |

n1-standard-16 | $0.876 / 1 hour |

n1-standard-32 | $1.752 / 1 hour |

n1-highmem-2 | $0.137 / 1 hour |

n1-highmem-4 | $0.274 / 1 hour |

n1-highmem-8 | $0.548 / 1 hour |

n1-highmem-16 | $1.096 / 1 hour |

n1-highcpu-2 | $0.081 / 1 hour |

n1-highcpu-4 | $0.162 / 1 hour |

n1-highcpu-8 | $0.324 / 1 hour |

n1-highcpu-16 | $0.648 / 1 hour |

n1-highcpu-32 | $1.296 / 1 hour |

N2 Series

N2 Series

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

n2-standard-2 | $0.1116854 / 1 hour |

n2-standard-4 | $0.2233708 / 1 hour |

n2-standard-8 | $0.4467416 / 1 hour |

n2-standard-16 | $0.8934832 / 1 hour |

n2-standard-32 | $1.7869664 / 1 hour |

n2-highmem-2 | $0.1506654 / 1 hour |

n2-highmem-4 | $0.3013308 / 1 hour |

n2-highmem-8 | $0.6026616 / 1 hour |

n2-highmem-16 | $1.2053232 / 1 hour |

n2-highcpu-2 | $0.0824504 / 1 hour |

n2-highcpu-4 | $0.1649008 / 1 hour |

n2-highcpu-8 | $0.3298016 / 1 hour |

n2-highcpu-16 | $0.6596032 / 1 hour |

n2-highcpu-32 | $1.3192064 / 1 hour |

N2D Series

N2D Series

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

n2d-standard-2 | $0.0971658 / 1 hour |

n2d-standard-4 | $0.1943316 / 1 hour |

n2d-standard-8 | $0.3886632 / 1 hour |

n2d-standard-16 | $0.7773264 / 1 hour |

n2d-standard-32 | $1.5546528 / 1 hour |

n2d-highmem-2 | $0.131077 / 1 hour |

n2d-highmem-4 | $0.262154 / 1 hour |

n2d-highmem-8 | $0.524308 / 1 hour |

n2d-highmem-16 | $1.048616 / 1 hour |

n2d-highcpu-2 | $0.0717324 / 1 hour |

n2d-highcpu-4 | $0.1434648 / 1 hour |

n2d-highcpu-8 | $0.2869296 / 1 hour |

n2d-highcpu-16 | $0.5738592 / 1 hour |

n2d-highcpu-32 | $1.1477184 / 1 hour |

C2 Series

C2 Series

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Sydney (australia-southeast1)

- Finland (europe-north1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

c2-standard-4 | $0.240028 / 1 hour |

c2-standard-8 | $0.480056 / 1 hour |

c2-standard-16 | $0.960112 / 1 hour |

c2-standard-30 | $1.80021 / 1 hour |

c2-standard-60 | $3.60042 / 1 hour |

C2D Series

C2D Series

- Taiwan (asia-east1)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

c2d-standard-2 | $0.1044172 / 1 hour |

c2d-standard-4 | $0.2088344 / 1 hour |

c2d-standard-8 | $0.4176688 / 1 hour |

c2d-standard-16 | $0.8353376 / 1 hour |

c2d-standard-32 | $1.6706752 / 1 hour |

c2d-standard-56 | $2.9236816 / 1 hour |

c2d-standard-112 | $5.8473632 / 1 hour |

c2d-highmem-2 | $0.1408396 / 1 hour |

c2d-highmem-4 | $0.2816792 / 1 hour |

c2d-highmem-8 | $0.5633584 / 1 hour |

c2d-highmem-16 | $1.1267168 / 1 hour |

c2d-highmem-32 | $2.2534336 / 1 hour |

c2d-highmem-56 | $3.9435088 / 1 hour |

c2d-highmem-112 | $7.8870176 / 1 hour |

c2d-highcpu-2 | $0.086206 / 1 hour |

c2d-highcpu-4 | $0.172412 / 1 hour |

c2d-highcpu-8 | $0.344824 / 1 hour |

c2d-highcpu-16 | $0.689648 / 1 hour |

c2d-highcpu-32 | $1.379296 / 1 hour |

c2d-highcpu-56 | $2.413768 / 1 hour |

c2d-highcpu-112 | $4.827536 / 1 hour |

C3 Series

C3 Series

- Singapore (asia-southeast1)

- Belgium (europe-west1)

- Netherlands (europe-west4)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

Machine type | Price (USD) |

|---|---|

c3-highcpu-4 | $0.19824 / 1 hour |

c3-highcpu-8 | $0.39648 / 1 hour |

c3-highcpu-22 | $1.09032 / 1 hour |

c3-highcpu-44 | $2.18064 / 1 hour |

c3-highcpu-88 | $4.36128 / 1 hour |

c3-highcpu-176 | $8.72256 / 1 hour |

A2 Series

A2 Series

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Singapore (asia-southeast1)

- Netherlands (europe-west4)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Oregon (us-west1)

- Salt Lake City (us-west3)

Machine type | Price (USD) |

|---|---|

a2-highgpu-1g | $4.2244949 / 1 hour |

a2-highgpu-2g | $8.4489898 / 1 hour |

a2-highgpu-4g | $16.8979796 / 1 hour |

a2-highgpu-8g | $33.7959592 / 1 hour |

a2-megagpu-16g | $64.1020592 / 1 hour |

a2-ultragpu-1g | $5.7818474 / 1 hour |

a2-ultragpu-2g | $11.5636948 / 1 hour |

a2-ultragpu-4g | $23.1273896 / 1 hour |

a2-ultragpu-8g | $46.2547792 / 1 hour |

When consuming from a reservation or spot capacity, billing is spread across two SKUs: the GCE SKU with the label 'vertex-ai-online-prediction' and the Vertex AI Management Fee SKU. This enables you to use your Committed Use Discounts (CUDs) in Vertex AI.

A3 Series

A3 Series

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Delhi (asia-south2)

- Singapore (asia-southeast1)

- Sydney (australia-southeast1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Toronto (northamerica-northeast2)

- Iowa (us-central1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

a3-ultragpu-8g | $96.015616 / 1 hour |

a3-megagpu-8g | $106.65474 / 1 hour |

When consuming from a reservation or spot capacity, billing is spread across two SKUs: the GCE SKU with the label 'vertex-ai-online-prediction' and the Vertex AI Management Fee SKU. This enables you to use your Committed Use Discounts (CUDs) in Vertex AI.

A4 Series

A4 Series

- Singapore (asia-southeast1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Los Angeles (us-west2)

Machine type | Price (USD) |

|---|---|

a4-highgpu-8g | $148.212 / 1 hour |

When consuming from a reservation or spot capacity, billing is spread across two SKUs: the GCE SKU with the label 'vertex-ai-online-prediction' and the Vertex AI Management Fee SKU. This enables you to use your Committed Use Discounts (CUDs) in Vertex AI.

A4X Series

A4X Series

- Netherlands (europe-west4)

- Iowa (us-central1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

Machine type | Price (USD) |

|---|---|

a4x-highgpu-4g | $74.75 / 1 hour |

When consuming from a reservation or spot capacity, billing is spread across two SKUs: the GCE SKU with the label 'vertex-ai-online-prediction' and the Vertex AI Management Fee SKU. This enables you to use your Committed Use Discounts (CUDs) in Vertex AI.

a4x-highgpu-4g requires at least 18 VMs.

G2 Series

G2 Series

- Taiwan (asia-east1)

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Dammam (me-central2)

- Toronto (northamerica-northeast2)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

g2-standard-4 | $0.81293 / 1 hour |

g2-standard-8 | $0.98181 / 1 hour |

g2-standard-12 | $1.15069 / 1 hour |

g2-standard-16 | $1.31957 / 1 hour |

g2-standard-24 | $2.30138 / 1 hour |

g2-standard-32 | $1.99509 / 1 hour |

g2-standard-48 | $4.60276 / 1 hour |

g2-standard-96 | $9.20552 / 1 hour |

When consuming from a reservation or spot capacity, billing is spread across two SKUs: the GCE SKU with the label 'vertex-ai-online-prediction' and the Vertex AI Management Fee SKU. This enables you to use your Committed Use Discounts (CUDs) in Vertex AI.

G4 Series

G4 Series

- Taiwan (asia-east1)

- Delhi (asia-south2)

- Singapore (asia-southeast1)

- Finland (europe-north1)

- Belgium (europe-west1)

- Netherlands (europe-west4)

- Milan (europe-west8)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Alabama (us-east7)

- Oregon (us-west1)

- Salt Lake City (us-west3)

Machine type | Price (USD) |

|---|---|

g4-standard-48 | $5.1750851 / 1 hour |

g4-standard-96 | $10.3501702 / 1 hour |

g4-standard-192 | $20.7003404 / 1 hour |

g4-standard-384 | $41.4006808 / 1 hour |

TPU v5e pricing

TPU v5e pricing

- Singapore (asia-southeast1)

- Netherlands (europe-west4)

- Iowa (us-central1)

- South Carolina (us-east1)

- Oregon (us-west1)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

ct5lp-hightpu-1t | $1.38 / 1 hour |

ct5lp-hightpu-4t | $5.52 / 1 hour |

ct5lp-hightpu-8t | $5.52 / 1 hour |

TPU v6e pricing

TPU v6e pricing

- Tokyo (asia-northeast1)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- London (europe-west2)

- Netherlands (europe-west4)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

Machine type | Price (USD) |

|---|---|

ct6e-standard-1t | $3.105 / 1 hour |

ct6e-standard-4t | $12.42 / 1 hour |

ct6e-standard-8t | $24.84 / 1 hour |

Each machine type is charged as the following SKUs on your Google Cloud bill:

- vCPU cost: measured in vCPU hours

- RAM cost: measured in GB hours

- GPU cost: if either built into the machine or optionally configured, measured in GPU hours

The prices for machine types are used to approximate the total hourly cost for each prediction node of a model version using that machine type.

For example, a machine type of n1-highcpu-32 includes 32 vCPUs and 32 GB of RAM. Therefore, the hourly pricing equals 32 vCPU hours + 32 GB hours.

E2 Series

E2 Series

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.0250826 / 1 hour |

RAM | $0.0033614 / 1 gibibyte hour |

N1 Series

N1 Series

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.036 / 1 hour |

RAM | $0.005 / 1 gibibyte hour |

N2 Series

N2 Series

- Johannesburg (africa-south1)

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Doha (me-central1)

- Dammam (me-central2)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Toronto (northamerica-northeast2)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.0363527 / 1 hour |

RAM | $0.0048725 / 1 gibibyte hour |

N2D Series

N2D Series

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Melbourne (australia-southeast2)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Milan (europe-west8)

- Paris (europe-west9)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.0316273 / 1 hour |

RAM | $0.0042389 / 1 gibibyte hour |

C2 Series

C2 Series

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Sydney (australia-southeast1)

- Finland (europe-north1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Santiago (southamerica-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.039077 / 1 hour |

RAM | $0.0052325 / 1 gibibyte hour |

C2D Series

C2D Series

- Taiwan (asia-east1)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.0339974 / 1 hour |

RAM | $0.0045528 / 1 gibibyte hour |

C3 Series

C3 Series

- Singapore (asia-southeast1)

- Belgium (europe-west1)

- Netherlands (europe-west4)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

Item | Price (USD) |

|---|---|

vCPU | $0.03908 / 1 hour |

RAM | $0.00524 / 1 gibibyte hour |

A2 Series

A2 Series

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Singapore (asia-southeast1)

- Netherlands (europe-west4)

- Tel Aviv (me-west1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Oregon (us-west1)

- Salt Lake City (us-west3)

Item | Price (USD) |

|---|---|

vCPU | $0.0363527 / 1 hour |

RAM | $0.0048725 / 1 gibibyte hour |

GPU (A100 40 GB) | $3.3741 / 1 hour |

GPU (A100 80 GB) | $4.51729 / 1 hour |

A3 Series

A3 Series

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Delhi (asia-south2)

- Singapore (asia-southeast1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Toronto (northamerica-northeast2)

- Iowa (us-central1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.0293227 / 1 hour |

RAM | $0.0025534 / 1 gibibyte hour |

GPU (H100 80 GB) | $11.2660332 / 1 hour |

GPU (H200) | $10.708501 / 1 hour |

G2 Series

G2 Series

- Taiwan (asia-east1)

- Tokyo (asia-northeast1)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Dammam (me-central2)

- Toronto (northamerica-northeast2)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Las Vegas (us-west4)

Item | Price (USD) |

|---|---|

vCPU | $0.02874 / 1 hour |

RAM | $0.00337 / 1 gibibyte hour |

GPU (L4) | $0.64405 / 1 hour |

Some machine types allow you to add optional GPU accelerators for prediction. Optional GPUs incur an additional charge, separate from those described in the previous table. View each pricing table, which describes the pricing for each type of optional GPU.

Accelerators - price per hour

Accelerators - price per hour

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Warsaw (europe-central2)

- Finland (europe-north1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Tel Aviv (me-west1)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

GPU type | Price (USD) |

|---|---|

NVIDIA_TESLA_P4 | $0.69 / 1 hour |

NVIDIA_TESLA_P100 | $1.679 / 1 hour |

NVIDIA_TESLA_T4 | $0.402 / 1 hour |

NVIDIA_TESLA_V100 | $2.852 / 1 hour |

Pricing is per GPU. If you use multiple GPUs per prediction node (or if your version scales to use multiple nodes),the costs scale accordingly.

AI Platform Prediction serves predictions from your model by running a number of virtual machines ("nodes"). By default, Vertex AI automatically scales the number of nodes running at any time. For online prediction, the number of nodes scales to meet demand. Each node can respond to multiple prediction requests. For batch prediction, the number of nodes scales to reduce the total time it takes to run a job. You can customize how prediction nodes scale.

You are charged for the time that each node runs for your model, including:

- When the node is processing a batch prediction job.

- When the node is processing an online prediction request.

- When the node is in a ready state for serving online predictions.

The cost of one node running for one hour is a node hour. The table of prediction prices describes the price of a node hour, which varies across regions and between online prediction and batch prediction.

You can consume node hours in fractional increments. For example, one node running for 30 minutes costs 0.5 node hours.

Cost calculations for Compute Engine (N1) machine types

- The running time of a node is billed in 30-second increments. This means that every 30 seconds, your project is billed for 30 seconds worth of whatever vCPU, RAM, and GPU resources that your node is using at that moment.

More about automatic scaling of prediction nodes

Online prediction | Batch prediction |

|---|---|

The priority of the scaling is to reduce the latency of individual requests. The service keeps your model in a ready state for a few idle minutes after servicing a request. | The priority of the scaling is to reduce the total elapsed time of the job. |

Scaling affects your total charges each month: the more numerous and frequent your requests, the more nodes will be used. | Scaling should have little effect on the price of your job, though there is some overhead involved in bringing up a new node. |

You can choose to let the service scale in response to traffic (automatic scaling) or you can specify a number of nodes to run constantly to avoid latency (manual scaling).

| You can affect scaling by setting a maximum number of nodes to use for a batch prediction job, and by setting the number of nodes to keep running for a model when you deploy it. |

Batch prediction jobs are charged after job completion

Batch prediction jobs are charged after job completion, not incrementally during the job. Any Cloud Billing budget alerts that you have configured aren't triggered while a job is running. Before starting a large job, consider running some cost benchmark jobs with small input data first.

Example of a prediction calculation

A real-estate company in an Americas region runs a weekly prediction of housing values in areas it serves. In one month, it runs predictions for four weeks in batches of 3920, 4277, 3849, and 3961. Jobs are limited to one node and each instance takes an average of 0.72 seconds of processing.

First calculate the length of time that each job ran:

- Calculations

Each job ran for more than ten minutes, so it is charged for each minute of processing:

- Calculations

The total charge for the month is $0.26.

This example assumed jobs ran on a single node and took a consistent amount of time per input instance. In real usage, make sure to account for multiple nodes and use the actual amount of time each node spends running for your calculations.

Charges for Vertex Explainable AI

Charges for Vertex Explainable AI

Feature-based explanations

Feature-based explanations come at no extra charge to prediction prices. However, explanations take longer to process than normal predictions, so heavy usage of Vertex Explainable AI along with auto-scaling could result in more nodes being started, which would increase prediction charges.

Example-based explanations

Pricing for example-based explanations consists of the following:

- When you upload a model or update a model's dataset, you are billed:

- per node hour for the batch prediction job that is used to generate the latent space representations of examples. This is billed at the same rate as prediction.

- a cost for building or updating indexes. This cost is the same as the indexing costs for Vector Search, which is number of examples * number of dimensions * 4 bytes per float * $3.00 per GB. For example, if you have 1 million examples and 1,000 dimension latent space, the cost is $12 (1,000,000 * 1,000 * 4 * 3.00 / 1,000,000,000).

- When you deploy to an endpoint, you are billed per node hour for each node in your endpoint. All compute associated with the endpoint is charged at same rate as prediction. However, because Example-based explanations require additional compute resources to serve the Vector Search index, this results in more nodes being started which increases prediction charges.

Vertex AI Neural Architecture Search

The following tables summarize the pricing in each region where Neural Architecture Search is available.

Prices

Prices

The following tables provide the price per hour of various configurations.

You can choose a predefined scale tier or a custom configuration of selected machine types. If you choose a custom configuration, sum the costs of the virtual machines you use.

Accelerator-enabled legacy machine types include the cost of the accelerators in their pricing. If you use Compute Engine machine types and attach accelerators, the cost of the accelerators is separate. To calculate this cost, multiply the prices in the following table of accelerators by the number of each type of accelerator you use.

Machine types

Machine types

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Finland (europe-north1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Machine type | Price (USD) |

|---|---|

n1-standard-4 | $0.2849985 / 1 hour |

n1-standard-8 | $0.569997 / 1 hour |

n1-standard-16 | $1.139994 / 1 hour |

n1-standard-32 | $2.279988 / 1 hour |

n1-standard-64 | $4.559976 / 1 hour |

n1-standard-96 | $6.839964 / 1 hour |

n1-highmem-2 | $0.1774545 / 1 hour |

n1-highmem-4 | $0.1774545 / 1 hour |

n1-highmem-8 | $0.709818 / 1 hour |

n1-highmem-16 | $1.419636 / 1 hour |

n1-highmem-32 | $2.839272 / 1 hour |

n1-highmem-64 | $5.678544 / 1 hour |

n1-highmem-96 | $8.517816 / 1 hour |

n1-highcpu-16 | $0.8501832 / 1 hour |

n1-highcpu-32 | $1.7003664 / 1 hour |

n1-highcpu-64 | $3.4007328 / 1 hour |

n1-highcpu-96 | $5.1010992 / 1 hour |

a2-highgpu-1g | $5.641070651 / 1 hour |

a2-highgpu-2g | $11.282141301 / 1 hour |

a2-highgpu-4g | $22.564282603 / 1 hour |

a2-highgpu-8g | $45.128565205 / 1 hour |

a2-highgpu-16g | $90.257130411 / 1 hour |

e2-standard-4 | $0.20103426 / 1 hour |

e2-standard-8 | $0.40206852 / 1 hour |

e2-standard-16 | $0.80413704 / 1 hour |

e2-standard-32 | $1.60827408 / 1 hour |

e2-highmem-2 | $0.13559949 / 1 hour |

e2-highmem-4 | $0.27119898 / 1 hour |

e2-highmem-8 | $0.54239796 / 1 hour |

e2-highmem-16 | $1.08479592 / 1 hour |

e2-highcpu-16 | $0.59364288 / 1 hour |

e2-highcpu-32 | $1.18728576 / 1 hour |

n2-standard-4 | $0.291354 / 1 hour |

n2-standard-8 | $0.582708 / 1 hour |

n2-standard-16 | $1.165416 / 1 hour |

n2-standard-32 | $2.330832 / 1 hour |

n2-standard-48 | $3.496248 / 1 hour |

n2-standard-64 | $4.661664 / 1 hour |

n2-standard-80 | $5.82708 / 1 hour |

n2-highmem-2 | $0.196521 / 1 hour |

n2-highmem-4 | $0.393042 / 1 hour |

n2-highmem-8 | $0.786084 / 1 hour |

n2-highmem-16 | $1.572168 / 1 hour |

n2-highmem-32 | $3.144336 / 1 hour |

n2-highmem-48 | $4.716504 / 1 hour |

n2-highmem-64 | $6.288672 / 1 hour |

n2-highmem-80 | $7.86084 / 1 hour |

n2-highcpu-16 | $0.860352 / 1 hour |

n2-highcpu-32 | $1.720704 / 1 hour |

n2-highcpu-64 | $3.441408 / 1 hour |

n2-highcpu-80 | $4.30176 / 1 hour |

c2-standard-4 | $0.313212 / 1 hour |

c2-standard-8 | $0.626424 / 1 hour |

c2-standard-16 | $1.252848 / 1 hour |

c2-standard-30 | $2.34909 / 1 hour |

c2-standard-60 | $4.69818 / 1 hour |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Prices for a2-highgpu instances include the charges for the attached NVIDIA_TESLA_A100 Accelerators.

Accelerators

Accelerators

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Finland (europe-north1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

GPU type | Price (USD) |

|---|---|

NVIDIA_TESLA_A100 | $4.400862 / 1 hour |

NVIDIA_TESLA_P4 | $0.90 / 1 hour |

NVIDIA_TESLA_P100 | $2.19 / 1 hour |

NVIDIA_TESLA_T4 | $0.525 / 1 hour |

NVIDIA_TESLA_V100 | $3.72 / 1 hour |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Disks

Disks

- Taiwan (asia-east1)

- Hong Kong (asia-east2)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Jakarta (asia-southeast2)

- Sydney (australia-southeast1)

- Finland (europe-north1)

- Belgium (europe-west1)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Zurich (europe-west6)

- Montreal (northamerica-northeast1)

- Sao Paulo (southamerica-east1)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

- Las Vegas (us-west4)

Disk type | Price (USD) |

|---|---|

pd-standard | $0.000082192 / 1 gibibyte hour |

pd-ssd | $0.000349315 / 1 gibibyte hour |

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Notes:

- All use is subject to the Neural Architecture Search quota policy.

- You are required to store your data and program files in Cloud Storage buckets during the Neural Architecture Search lifecycle. See more about Cloud Storage usage.

- For volume-based discounts, contact the Sales team.

- The disk price is only charged when you configure the disk size of each VM to be larger than 100 GB. There is no charge for the first 100 GB (the default disk size) of disk for each VM. For example, if you configure each VM to have 105 GB of disk, then you are charged for 5 GB of disk for each VM.

Required use of Cloud Storage

Required use of Cloud Storage

In addition to the costs described in this document, you are required to store data and program files in Cloud Storage buckets during the Neural Architecture Search lifecycle. This storage is subject to the Cloud Storage pricing policy.

Required use of Cloud Storage includes:

- Staging your training application package.

- Storing your training input data.

Note: You can use another Google Cloud service to store your input data, such as BigQuery, which has its own associated pricing.

- Storing the output of your jobs. Neural Architecture Search doesn't require long-term storage of these items. You can remove the files as soon as the operation is complete.

Free operations for managing your resources

Free operations for managing your resources

The resource management operations provided by Neural Architecture Search are available free of charge. The Neural Architecture Search quota policy does limit some of these operations.

Resource | Free operations |

|---|---|

jobs | get, list, cancel |

operations | get, list, cancel, delete |

Vertex AI Pipelines

Vertex AI Pipelines charges a run execution fee of $0.03 per Pipeline Run. You are not charged the execution fee during the Preview release. You also pay for Google Cloud resources you use with Vertex AI Pipelines, such as Compute Engine resources consumed by pipeline components (charged at the same rate as for Vertex AI training). Finally, you are responsible for the cost of any services (such as Dataflow) called by your pipeline.

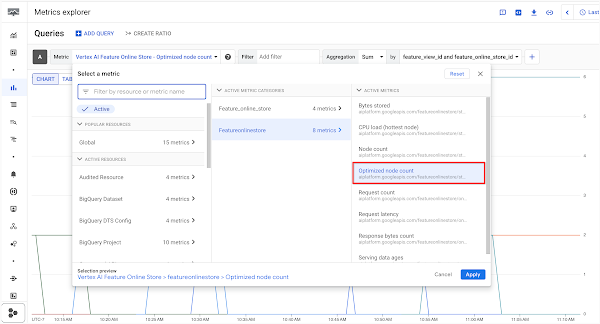

Vertex AI Feature Store

Vertex AI Feature Store is Generally Available (GA) since November 2023. For information on the previous version of the product go to Vertex AI Feature Store (Legacy).

New Vertex AI Feature Store

New Vertex AI Feature Store

The new Vertex AI Feature Store supports functionality across 2 types of operations:

- Offline operations are operations to transfer, store, retrieve and transform data in the offline store (BigQuery)

- Online operations are operations to transfer data into the online store(s) and operations on data while it is in the online store(s).

Offline Operations Pricing

Since BigQuery is used for offline operations, please refer to BigQuery pricing for functionality such as ingestion to the offline store, querying the offline store, and offline storage.

Online Operations Pricing

For online operations, Vertex AI Feature Store charges for any GA features to transfer data into the online store, serve data or store data. A node-hour represents the time a virtual machine spends to complete an operation, charged to the minute.

- Johannesburg (africa-south1)

- Tokyo (asia-northeast1)

- Osaka (asia-northeast2)

- Seoul (asia-northeast3)

- Mumbai (asia-south1)

- Singapore (asia-southeast1)

- Warsaw (europe-central2)

- Madrid (europe-southwest1)

- Belgium (europe-west1)

- Turin (europe-west12)

- London (europe-west2)

- Frankfurt (europe-west3)

- Netherlands (europe-west4)

- Milan (europe-west8)

- Doha (me-central1)

- Dammam (me-central2)

- Iowa (us-central1)

- South Carolina (us-east1)

- Northern Virginia (us-east4)

- Columbus (us-east5)

- Dallas (us-south1)

- Oregon (us-west1)

- Los Angeles (us-west2)

- Salt Lake City (us-west3)

Operation | Price (USD) |

|---|---|

Data processing node Data processing (e.g. ingesting into any online store, monitoring, etc.) | $0.08 / 1 hour |

Optimized online serving node Low latency serving and embeddings serving Each node includes 200GB of storage | $0.30 / 1 hour |

Bigtable online serving node Serving with Cloud Bigtable | $0.94 / 1 hour |

Bigtable online serving storage Storage for serving with Cloud Bigtable | $0.000342466 / 1 gibibyte hour |

Optimized online serving and Bigtable online serving use different architectures, so their nodes are not comparable.

If you pay in a currency other than USD, the prices listed in your currency on Cloud Platform SKUs apply.

Online Operations Workload Estimates

Consider the following guidelines when estimating your workloads. The number of nodes required for a given workload may differ across each serving approach.

- Data processing: