This document describes options for connecting to and from the internet using Compute Engine resources that have private IP addresses. This is helpful for developers who create Google Cloud services and for network administrators of Google Cloud environments.

This tutorial assumes you are familiar with deploying VPCs, with Compute Engine, and with basic TCP/IP networking.

Objectives

- Learn about the options available for connecting between private VMs outside their VPC.

- Create an instance of Identity-Aware Proxy (IAP) for TCP tunnels that's appropriate for interactive services such as SSH.

- Create a Cloud NAT instance to enable VMs to make outbound connections to the internet.

- Configure an HTTP load balancer to support inbound connections from the internet to your VMs.

Costs

This tutorial uses billable components of Google Cloud, including:

Use the pricing calculator to generate a cost estimate based on your projected usage. We calculate that the total to run this tutorial is less than US$5 per day.

Before you begin

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

Introduction

Private IP addresses provide a number of advantages over public (external) IP addresses, including:

- Reduced attack surface. Removing external IP addresses from VMs makes it more difficult for attackers to reach the VMs and exploit potential vulnerabilities.

- Increased flexibility. Introducing a layer of abstraction, such as a load balancer or a NAT service, allows more reliable and flexible service delivery when compared with static, external IP addresses.

This solution discusses three scenarios, as described in the following table:

| Interactive | Fetching | Serving |

|---|---|---|

| An SSH connection is initiated from a remote host directly to a VM using

IAP for

TCP.

Example: Remote administration using SSH or RDP |

A connection is initiated by a VM to an external host on the internet using

Cloud NAT.

Example: OS updates, external APIs |

A connection is initiated by a remote host to a VM through a global Google Cloud

load balancer.

Example: Application frontends, WordPress |

Some environments might involve only one of these scenarios. However, many environments require all of these scenarios, and this is fully supported in Google Cloud.

The following sections describe a multi-region environment with an HTTP load-balanced service backed by two VMs in two regions. These VMs use Cloud NAT for outgoing communications. For administration, the VMs are accessible through SSH tunneled over IAP.

The following diagram provides an overview of all three use cases and the relevant components.

Creating VM instances

To begin the tutorial, you create a total of four virtual machine (VM) instances—two instances per region in two different regions. You give all of the instances the same tag, which is used later by a firewall rule to allow incoming traffic to reach your instances.

The following diagram shows the VM instances and instance groups you create, distributed in two zones.

The startup script that you add to each instance installs Apache and creates a unique home page for each instance.

The procedure includes instructions for using both the Google Cloud console

and gcloud commands. The easiest way to use gcloud commands is to use

Cloud Shell.

Console

In the Google Cloud console, go to the VM instances page:

Click Create instance.

Set Name to

www-1.Set the Zone to us-central1-b.

Click Management, Security, Disks, Networking, Sole Tenancy.

Click Networking and make the following settings:

- For HTTP traffic, in the Network tags box, enter

http-tag. - Under Network Interfaces, click edit.

- Under External IP, select None.

- For HTTP traffic, in the Network tags box, enter

Click Management and set Startup script to the following:

sudo apt-get update sudo apt-get install apache2 -y sudo a2ensite default-ssl sudo a2enmod ssl sudo service apache2 restart echo '<!doctype html><html><body><h1>server 1</h1></body></html>' | sudo tee /var/www/html/index.htmlClick Create.

Create

www-2with the same settings, except set Startup script to the following:sudo apt-get update sudo apt-get install apache2 -y sudo a2ensite default-ssl sudo a2enmod ssl sudo service apache2 restart echo '<!doctype html><html><body><h1>server 2<h1></body></html>' | sudo tee /var/www/html/index.htmlCreate

www-3with the same settings, except set Zone toeurope-west1-band set Startup script to the following:sudo apt-get update sudo apt-get install apache2 -y sudo a2ensite default-ssl sudo a2enmod ssl sudo service apache2 restart echo '<!doctype html><html><body><h1>server 3</h1></body></html>' | sudo tee /var/www/html/index.htmlCreate

www-4with the same settings, except set Zone toeurope-west1-band set Startup script to the following:sudo apt-get update sudo apt-get install apache2 -y sudo a2ensite default-ssl sudo a2enmod ssl sudo service apache2 restart echo '<!doctype html><html><body><h1>server 4</h1></body></html>' | sudo tee /var/www/html/index.html

gcloud

Open Cloud Shell:

Create an instance named

www-1inus-central1-bwith a basic startup script:gcloud compute instances create www-1 \ --image-family debian-9 \ --image-project debian-cloud \ --zone us-central1-b \ --tags http-tag \ --network-interface=no-address \ --metadata startup-script="#! /bin/bash sudo apt-get update sudo apt-get install apache2 -y sudo service apache2 restart echo '<!doctype html><html><body><h1>www-1</h1></body></html>' | tee /var/www/html/index.html EOF"Create an instance named

www-2inus-central1-b:gcloud compute instances create www-2 \ --image-family debian-9 \ --image-project debian-cloud \ --zone us-central1-b \ --tags http-tag \ --network-interface=no-address \ --metadata startup-script="#! /bin/bash sudo apt-get update sudo apt-get install apache2 -y sudo service apache2 restart echo '<!doctype html><html><body><h1>www-2</h1></body></html>' | tee /var/www/html/index.html EOF"Create an instance named

www-3, this time ineurope-west1-b:gcloud compute instances create www-3 \ --image-family debian-9 \ --image-project debian-cloud \ --zone europe-west1-b \ --tags http-tag \ --network-interface=no-address \ --metadata startup-script="#! /bin/bash sudo apt-get update sudo apt-get install apache2 -y sudo service apache2 restart echo '<!doctype html><html><body><h1>www-3</h1></body></html>' | tee /var/www/html/index.html EOF"Create an instance named

www-4, this one also ineurope-west1-b:gcloud compute instances create www-4 \ --image-family debian-9 \ --image-project debian-cloud \ --zone europe-west1-b \ --tags http-tag \ --network-interface=no-address \ --metadata startup-script="#! /bin/bash sudo apt-get update sudo apt-get install apache2 -y sudo service apache2 restart echo '<!doctype html><html><body><h1>www-4</h1></body></html>' | tee /var/www/html/index.html EOF"

Terraform

Open Cloud Shell:

Clone the repository from GitHub:

git clone https://github.com/GoogleCloudPlatform/gce-public-connectivity-terraformChange the working directory to the repository directory:

cd iapInstall Terraform.

Replace

[YOUR-ORGANIZATION-NAME]in thescripts/set_env_vars.shfile with your Google Cloud organization name.Set environment variables:

source scripts/set_env_vars.shApply the Terraform configuration:

terraform apply

Configuring IAP tunnels for interacting with instances

To log in to VM instances, you connect to the instances using tools like SSH or RDP. In the configuration you're creating in this tutorial, you can't directly connect to instances. However, you can use TCP forwarding in IAP, which enables remote access for these interactive patterns.

For this tutorial, you use SSH.

In this section you do the following:

- Connect to a Compute Engine instance using the IAP tunnel.

- Add a second user with IAP tunneling permission in IAM.

The following diagram illustrates the architecture that you build in this section. The grey areas are discussed in other parts of this tutorial.

Limitations of IAP

- Bandwidth: The IAP TCP forwarding feature isn't intended for bulk transfer of data. IAP reserves the right to rate-limit users who are deemed to be abusing this service.

- Connection length: IAP won't disconnect active sessions unless required for maintenance.

- Protocol: IAP for TCP doesn't support UDP.

Create firewall rules to allow tunneling

In order to connect to your instances using SSH, you need to open an appropriate port on the firewall. IAP connections come from a specific set of IP addresses (35.235.240.0/20). Therefore, you can limit the rule to this CIDR range.

Console

In the Google Cloud console, go to the Firewall policies page:

Click Create firewall rule.

Set Name to

allow-ssh-from-iap.Leave VPC network as

default.Under Targets, select Specified target tags.

Set Target tags to

http-tag.Leave Source filter set to IP ranges.

Set Source IP Ranges to

35.235.240.0/20.Set Allowed protocols and ports to

tcp:22.Click Create.

It might take a moment for the new firewall rule to be displayed in the console.

gcloud

Create a firewall rule named

allow-ssh-from-iap:gcloud compute firewall-rules create allow-ssh-from-iap \ --source-ranges 35.235.240.0/20 \ --target-tags http-tag \ --allow tcp:22

Terraform

Copy the firewall rules Terraform file to the current directory:

cp iap/vpc_firewall_rules.tf .Apply the Terraform configuration:

terraform apply

Test tunneling

In Cloud Shell, connect to instance

www-1using IAP:gcloud compute ssh www-1 \ --zone us-central1-b \ --tunnel-through-iap

If the connection succeeds, you have an SSH session that is tunneled through IAP directly to your private VM.

Grant access to additional users

IAP uses your existing project roles and permissions when you connect to VM instances. By default, instance owners are the only users that have the IAP Secured Tunnel User role. If you want to allow other users to access your VMs using IAP tunneling, you need to grant this role to those users.

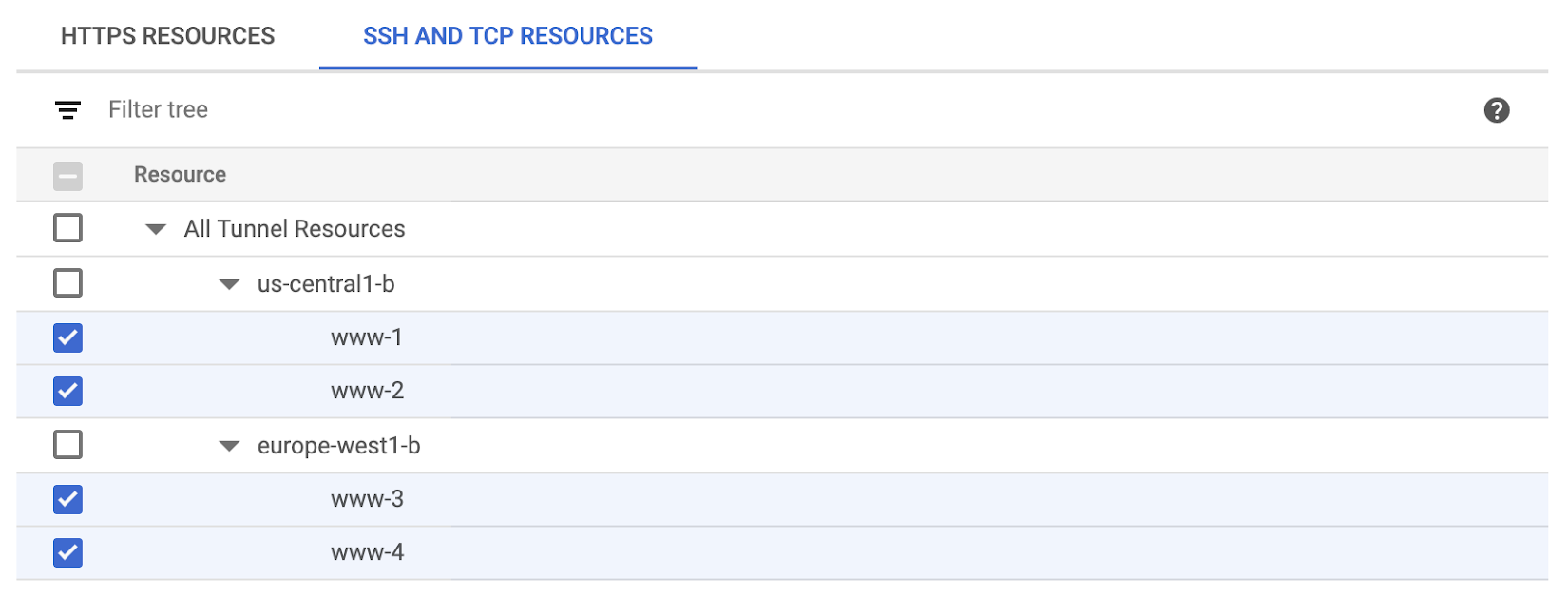

In the Google Cloud console, go to Security > Identity-Aware Proxy:

If you see a message that tells you that you need to configure the OAuth consent screen, disregard the message; it's not relevant to IAP for TCP.

Select the SSH and TCP Resources tab.

Select the VMs you've created:

On the right-hand side, click Add Principal.

Add the users you want to grant permissions to, select the IAP-secured Tunnel User role, and then click Save.

Summary

You can now connect to your instances using SSH to administer the instances or troubleshoot them.

Many applications need to make outgoing connections in order to download patches, connect with partners, or download resources. In the next section, you configure Cloud NAT to allow your VMs to reach these resources.

Deploying Cloud NAT for fetching

The Cloud NAT service allows Google Cloud VM instances that don't have external IP addresses to connect to the internet. Cloud NAT implements outbound NAT in conjunction with a default route to allow your instances to reach the internet. It doesn't implement inbound NAT. Hosts outside of your VPC network can respond only to established connections initiated by your instances; they cannot initiate their own connections to your instances using Cloud NAT. NAT is not used for traffic within Google Cloud.

Cloud NAT is a regional resource. You can configure it to allow traffic from all primary and secondary IP address ranges of subnets in a region, or you can configure it to apply to only some of those ranges.

In this section, you configure a Cloud NAT gateway in each region that you used earlier. The following diagram illustrates the architecture that you build in this section. The grey areas are discussed in other parts of this tutorial.

Create a NAT configuration using Cloud Router

You must create the Cloud Router instance in the same region as the instances that need to use Cloud NAT. Cloud NAT is only used to place NAT information onto the VMs; it's not used as part of the actual Cloud NAT gateway.

This configuration allows all instances in the region to use Cloud NAT for all primary and alias IP ranges. It also automatically allocates the external IP addresses for the NAT gateway. For more options, see the gcloud compute routers documentation.

Console

Go to the Cloud NAT page:

Click Get started or Create NAT gateway.

Set Gateway name to

nat-config.Set VPC network to

default.Set Region to

us-central1.Under Cloud Router, select Create new router, and then do the following:

- Set Name to

nat-router-us-central1. - Click Create.

- Set Name to

Click Create.

Repeat the procedure, but substitute these values:

- Name:

nat-router-europe-west1 - Region:

europe-west1

- Name:

gcloud

Create Cloud Router instances in each region:

gcloud compute routers create nat-router-us-central1 \ --network default \ --region us-central1 gcloud compute routers create nat-router-europe-west1 \ --network default \ --region europe-west1Configure the routers for Cloud NAT:

gcloud compute routers nats create nat-config \ --router-region us-central1 \ --router nat-router-us-central1 \ --nat-all-subnet-ip-ranges \ --auto-allocate-nat-external-ips gcloud compute routers nats create nat-config \ --router-region europe-west1 \ --router nat-router-europe-west1 \ --nat-all-subnet-ip-ranges \ --auto-allocate-nat-external-ips

Terraform

Copy the Terraform NAT configuration file to the current directory:

cp nat/vpc_nat_gateways.tf .Apply the Terraform configuration:

terraform apply

Test Cloud NAT configuration

You can now test that you're able to make outbound requests from your VM instances to the internet.

- Wait up to 3 minutes for the NAT configuration to propagate to the VM.

In Cloud Shell, connect to your instance using the tunnel you created:

gcloud compute ssh www-1 --tunnel-through-iapWhen you're logged in to the instance, use the

curlcommand to make an outbound request:curl example.comYou see the following output:

<html> <head> <title>Example Domain</title> ... ... ... </head> <body> <div> <h1>Example Domain</h1> <p>This domain is established to be used for illustrative examples in documents. You may use this domain in examples without prior coordination or asking for permission. </p> <p><a href="http://www.iana.org/domains/example">More information...</a></p> </div> </body> </html>

If the command is successful, you've validated that your VMs can connect to the internet using Cloud NAT.

Summary

Your instances can now make outgoing connections in order to download patches, connect with partners, or download resources.

In the next section, you add load balancing to your deployment and configure it to allow remote clients to initiate requests to your servers.

Creating an HTTP load-balanced service for serving

Using Cloud Load Balancing for your application has many advantages. It can provide seamless, scalable load balancing for over a million queries per second. It can also offload SSL overhead from your VMs, route queries to the best region for your users based on both location and availability, and support modern protocols such as HTTP/2 and QUIC.

For this tutorial, you take advantage of another key feature: global anycast IP connection proxying. This feature provides a single public IP address that's terminated on Google's globally distributed edge. Clients can then connect to resources hosted on private IP addresses anywhere in Google Cloud. This configuration helps protect instances from DDoS attacks and direct attacks. It also enables features such as Google Cloud Armor for even more security.

In this section of the tutorial, you do the following:

- Reset the VM instances to install the Apache web server.

- Create a firewall rule to allow access from load balancers.

- Allocate static, global IPv4 and IPv6 addresses for the load balancer.

- Create an instance group for your instances.

- Start sending traffic to your instances.

The following diagram illustrates the architecture that you build in this section. The grey areas are discussed in other parts of this tutorial.

Reset VM instances

When you created the VM instances earlier in this tutorial, they didn't have access to the internet, because no external IP address was assigned and Cloud NAT was not configured. Therefore, the startup script that installs Apache could not complete successfully.

The easiest way to re-run the startup scripts is to reset those instances so that the Apache webserver can be installed and used in the next section.

Console

In the Google Cloud console, go to the VM instances page:

Select

www-1,www-2,www-3, andwww-4.Click the Reset button at the top of the page.

If you don't see a Reset button, click More actions and choose Reset.

Confirm the reset of the four instances by clicking Reset in the dialog.

gcloud

Reset the four instances:

gcloud compute instances reset www-1 \ --zone us-central1-b gcloud compute instances reset www-2 \ --zone us-central1-b gcloud compute instances reset www-3 \ --zone europe-west1-b gcloud compute instances reset www-4 \ --zone europe-west1-b

Open the firewall

The next task is to create a firewall rule to allow traffic from the load

balancers to your VM instances. This rule allows traffic from the

Google Cloud address range that's used both by load balancers and health

checks. The firewall rule uses the http-tag tag that you created earlier; the

firewall rule allows traffic to the designated port to reach instances that have

the tag.

Console

In the Google Cloud console, go to the Firewall policies page:

Click Create firewall rule.

Set Name to

allow-lb-and-healthcheck.Leave the VPC network as

default.Under Targets, select Specified target tags.

Set Target tags to

http-tag.Leave Source filter set to IP ranges.

Set Source IP Ranges to

130.211.0.0/22and35.191.0.0/16.Set Allowed protocols and ports to

tcp:80.Click Create.

It might take a moment for the new firewall rule to be displayed in the console.

gcloud

Create a firewall rule named

allow-lb-and-healthcheck:gcloud compute firewall-rules create allow-lb-and-healthcheck \ --source-ranges 130.211.0.0/22,35.191.0.0/16 \ --target-tags http-tag \ --allow tcp:80

Terraform

Copy the Terraform load-balancing configuration files to the current directory:

cp lb/* .Apply the Terraform configuration:

terraform apply

Allocate an external IP address for load balancers

If you're serving traffic to the internet, you need to allocate an external address for the load balancer. You can allocate an IPv4 address, an IPv6 address, or both. In this section, you reserve static IPv4 and IPv6 addresses suitable for adding to DNS.

There is no additional charge for public IP addresses, because they are used with a load balancer.

Console

In the Google Cloud console, go to the External IP addresses page:

Click Reserve static address to reserve an IPv4 address.

Set Name to

lb-ip-cr.Leave Type set to Global.

Click Reserve.

Click Reserve static address again to reserve an IPv6 address.

Set Name to

lb-ipv6-cr.Set IP version to IPv6.

Leave Type set to Global.

Click Reserve.

gcloud

Create a static IP address named

lb-ip-crfor IPv4:gcloud compute addresses create lb-ip-cr \ --ip-version=IPV4 \ --globalCreate a static IP address named

lb-ipv6-crfor IPv6:gcloud compute addresses create lb-ipv6-cr \ --ip-version=IPV6 \ --global

Create instance groups and add instances

Google Cloud load balancers require instance groups to act as backends for traffic. In this tutorial, you use unmanaged instance groups for simplicity. However, you could also use managed instance groups to take advantage of features such as autoscaling, autohealing, regional (multi-zone) deployment, and auto-updating.

In this section, you create an instance group for each of the zones that you're using.

Console

In the Google Cloud console, go to the Instance groups page:

Click Create instance group.

On the left-hand side, click New unmanaged instance group.

Set Name to

us-resources-w.Set Region to

us-central1Set Zone to

us-central1-b.Select Network (default) and Subnetwork (default).

Under VM instances, do the following:

- Click Add an instance, and then select www-1.

- Click Add an instance again, and then select www-2.

- Click Create.

Repeat this procedure to create a second instance group, but use the following values:

- Name:

europe-resources-w - Zone:

europe-west1-b - Instances:

www-3andwww-4

- Name:

In the Instance groups page, confirm that you have two instance groups, each with two instances.

gcloud

Create the

us-resources-winstance group:gcloud compute instance-groups unmanaged create us-resources-w \ --zone us-central1-bAdd the

www-1andwww-2instances:gcloud compute instance-groups unmanaged add-instances us-resources-w \ --instances www-1,www-2 \ --zone us-central1-bCreate the

europe-resources-winstance group:gcloud compute instance-groups unmanaged create europe-resources-w \ --zone europe-west1-bAdd the

www-3andwww-4instances:gcloud compute instance-groups unmanaged add-instances europe-resources-w \ --instances www-3,www-4 \ --zone europe-west1-b

Configure the load balancing service

Load balancer functionality involves several connected services. In this section, you set up and connect the services. The services you will create are as follows:

- Named ports, which the load balancer uses to direct traffic to your instance groups.

- A health check, which polls your instances to see if they are healthy. The load balancer sends traffic only to healthy instances.

- Backend services, which monitor instance usage and health. Backend services know whether the instances in the instance group can receive traffic. If the instances can't receive traffic, the load balancer redirects traffic, provided that instances elsewhere have sufficient capacity. A backend defines the capacity of the instance groups that it contains (maximum CPU utilization or maximum queries per second).

- A URL map, which parses the URL of the request and can forward requests to specific backend services based on the host and path of the request URL. In this tutorial, because you aren't using content-based forwarding, the URL map contains only the default mapping.

- A target proxy, which receives the request from the user and forwards it to the URL map.

- Two global forwarding rules, one each for IPv4 and IPv6, that hold the global external IP address resources. Global forwarding rules forward the incoming request to the target proxy.

Create the load balancer

In this section you create the load balancer and configure a default backend service to handle your traffic. You also create a health check.

Console

Start your configuration

In the Google Cloud console, go to the Load balancing page.

- Click Create load balancer.

- For Type of load balancer, select Application Load Balancer (HTTP/HTTPS) and click Next.

- For Public facing or internal, select Public facing (external) and click Next.

- For Global or single region deployment, select Best for global workloads and click Next.

- For Load balancer generation, select Global external Application Load Balancer and click Next.

- Click Configure.

Basic configuration

- Set Load balancer name to

web-map.

Configure the load balancer

- In the left panel of the Create global external Application Load Balancer page, click Backend configuration.

- In the Create or select backend services & backend buckets list, select Backend services, and then Create a backend service. You see the Create Backend Service dialog box.

- Set Name to

web-map-backend-service. - Set the Protocol. For HTTP protocol, leave the values set to the defaults.

- For Backend type, select Instance Groups.

- Under Backends, set Instance group to

us-resources-w. - Click Add backend.

- Select the europe-resources-w instance group and then do the following:

- For HTTP traffic between the load balancer and the instances,

make sure that Port numbers is set to

80. - Leave the default values for the rest of the fields.

- For HTTP traffic between the load balancer and the instances,

make sure that Port numbers is set to

- Click Done.

- Under Health check, select Create a health check or Create another health check.

- Set the following health check parameters:

- Name:

http-basic-check - Protocol:

HTTP - Port:

80

- Name:

- Click Create.

gcloud

For each instance group, define an HTTP service and map a port name to the relevant port:

gcloud compute instance-groups unmanaged set-named-ports us-resources-w \ --named-ports http:80 \ --zone us-central1-b gcloud compute instance-groups unmanaged set-named-ports europe-resources-w \ --named-ports http:80 \ --zone europe-west1-bCreate a health check:

gcloud compute health-checks create http http-basic-check \ --port 80Create a backend service:

gcloud compute backend-services create web-map-backend-service \ --protocol HTTP \ --health-checks http-basic-check \ --globalYou set the

--protocolflag toHTTPbecause you're using HTTP to go to the instances. For the health check, you use thehttp-basic-checkhealth check that you created earlier.Add your instance groups as backends to the backend services:

gcloud compute backend-services add-backend web-map-backend-service \ --balancing-mode UTILIZATION \ --max-utilization 0.8 \ --capacity-scaler 1 \ --instance-group us-resources-w \ --instance-group-zone us-central1-b \ --global gcloud compute backend-services add-backend web-map-backend-service \ --balancing-mode UTILIZATION \ --max-utilization 0.8 \ --capacity-scaler 1 \ --instance-group europe-resources-w \ --instance-group-zone europe-west1-b \ --global

Set host and path rules

Console

In the left panel of the Create global external Application Load Balancer page, click Host and path rules.

For this tutorial, you don't need to configure any host or path rules, because all traffic will go to the default rule. Therefore, you can accept the pre-populated default values.

gcloud

Create a default URL map that directs all incoming requests to all of your instances:

gcloud compute url-maps create web-map \ --default-service web-map-backend-serviceCreate a target HTTP proxy to route requests to the URL map:

gcloud compute target-http-proxies create http-lb-proxy \ --url-map web-map

Configure the frontend and finalize your setup

Console

- In the left panel of the Create global external Application Load Balancer page, click Frontend configuration.

- Set Name to

http-cr-rule. - Set Protocol to

HTTP. - Set IP version to

IPv4. - In the IP address list, select

lb-ip-cr, the address that you created earlier. - Confirm that Port is set to

80. - Click Done.

- Click Add frontend IP and port.

- Set Name to

http-cr-ipv6-rule. - For Protocol, select HTTP.

- Set IP version to

IPv6. - In the IP address list, select

lb-ipv6-cr, the other address that you created earlier. - Confirm that Port is set to

80. - Click Create.

- Click Done.

- In the left panel of the Create global external Application Load Balancer page, click Review and finalize.

- Compare your settings to what you intended to create.

If the settings are correct, click Create.

You are returned to the Load Balancing pages. After the load balancer is created, a green check mark next to it indicates that it's running.

gcloud

Get the static IP addresses that you created for your load balancer. Make a note of them, because you use them in the next step.

gcloud compute addresses listCreate two global forwarding rules to route incoming requests to the proxy, one for IPv4 and one for IPv6. Replace lb_ip_address in the command with the static IPv4 address you created, and replace lb_ipv6_address with the IPv6 address you created.

gcloud compute forwarding-rules create http-cr-rule \ --address lb_ip_address \ --global \ --target-http-proxy http-lb-proxy \ --ports 80 gcloud compute forwarding-rules create http-cr-ipv6-rule \ --address lb_ipv6_address \ --global \ --target-http-proxy http-lb-proxy \ --ports 80After you create the global forwarding rules, it can take several minutes for your configuration to propagate.

Test the configuration

In this section, you send an HTTP request to your instance to verify that the load-balancing configuration is working.

Console

In the Google Cloud console, go to the Load balancing page:

Select the load balancer named

web-mapto see details about the load balancer that you just created.In the Backend section of the page, confirm that instances are healthy by viewing the Healthy column.

It can take a few moments for the display to indicate that the instances are healthy.

When the display shows that the instances are healthy, copy the IP:Port value from the Frontend section and paste it into your browser.

In your browser, you see your default content page.

gcloud

Get the IP addresses of your global forwarding rules, and make a note of them for the next step:

gcloud compute forwarding-rules listUse the

curlcommand to test the response for various URLs for your services. Try both IPv4 and IPv6. For IPv6, you must put[]around the address, such ashttp://[2001:DB8::]/.curl http://ipv4-address curl -g -6 "http://[ipv6-address]/"

Summary

Your VMs can now serve traffic to the internet and can fetch data from the internet. You can also access them using SSH in order to perform administration tasks. All of this functionality is achieved using only private IP addresses, which helps protect them from direct attacks by not exposing IP addresses that are reachable from the internet.

Clean up

To avoid incurring charges to your Google Cloud account for the resources used in this tutorial, either delete the project that contains the resources, or keep the project and delete the individual resources.

Delete the project

- In the Google Cloud console, go to the Manage resources page.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

What's next

- Creating Cloud Load Balancing shows you how to create HTTPS and HTTP2 load balancers.

- Setting up a private cluster shows you how to set up a private Google Kubernetes Engine cluster.

- Using IAP for TCP forwarding describes other uses for IAP for TCP, such as RDP or remote command execution.

- Using Cloud NAT provides examples for Google Kubernetes Engine and describes how to modify parameter details.

- Explore reference architectures, diagrams, and best practices about Google Cloud. Take a look at our Cloud Architecture Center.