Large Language Models (LLMs)

Large Language Models powered by world-class Google AI

Google Cloud brings innovations developed and tested by Google DeepMind to our enterprise-ready AI platform so customers can start using them to build and deliver generative AI capabilities today — not tomorrow.

New customers get $300 in free credits to spend on Vertex AI.

Overview

What is a large language model (LLM)?

A large language model (LLM) is a statistical language model, trained on a massive amount of data, that can be used to generate and translate text and other content, and perform other natural language processing (NLP) tasks.

LLMs are typically based on deep learning architectures, such as the Transformer developed by Google in 2017, and can be trained on billions of text and other content.

What are some examples of popular foundation models?

Vertex AI offers access to Gemini, a multimodal model from Google DeepMind. Gemini is capable of understanding virtually any input, combining different types of information, and generating almost any output. Prompt and test in Vertex AI with Gemini, using text, images, video, or code. Using Gemini’s advanced reasoning and state-of-the-art generation capabilities, developers can try sample prompts for extracting text from images, converting image text to JSON, and even generate answers about uploaded images to build next-gen AI applications.

What are the use cases for large language models?

Text-driven LLMs are used for a variety of natural language processing tasks, including text generation, machine translation, text summarization, question answering, and creating chatbots that can hold conversations with humans.

LLMs can also be trained on other types of data, including code, images, audio, video, and more. Google’s Codey, Imagen and Chirp are examples of such models that will spawn new applications and help create solutions to the world’s most challenging problems.

What are the benefits of large language models?

LLMs are pre-trained on a massive amount of data. They are extremely flexible because they can be trained to perform a variety of tasks, such as text generation, summarization, and translation. They are also scalable because they can be fine-tuned to specific tasks, which can improve their performance.

What large language model services does Google Cloud offer?

Generative AI on Vertex AI: Gives you access to Google's large generative AI models so you can test, tune, and deploy them for use in your AI-powered applications.

Vertex AI Agent Builder: Enterprise search and chatbot applications with pre-built workflows for common tasks like onboarding, data ingestion, and customization.

Contact Center AI (CCAI) : Intelligent Contact Center solution which includes Dialogflow, our conversational AI platform with both intent-based and LLM capabilities.

How It Works

LLMs work by using a massive amount of text data to train a neural network. This neural network is then used to generate text, translate text, or perform other tasks. The more data that is used to train the neural network, the better and more accurate it will be at performing its task.

Google Cloud developed products based on its LLM technologies, catering to a wide variety of use cases you can explore in the Common Uses section below.

LLMs work by using a massive amount of text data to train a neural network. This neural network is then used to generate text, translate text, or perform other tasks. The more data that is used to train the neural network, the better and more accurate it will be at performing its task.

Google Cloud developed products based on its LLM technologies, catering to a wide variety of use cases you can explore in the Common Uses section below.

Common Uses

Build a chatbot

Build a LLM powered chatbot

Vertex AI Agents facilitates the creation of natural-sounding, human-like chatbots. Generative AI Agent is a feature within Vertex AI Agents that is built on top of functionality in Dialogflow CX.

With this feature, you can provide a website URL and/or any number of documents, and then Generative AI Agent parses your content and creates a virtual agent that is powered by data stores and LLMs.

How-tos

Build a LLM powered chatbot

Vertex AI Agents facilitates the creation of natural-sounding, human-like chatbots. Generative AI Agent is a feature within Vertex AI Agents that is built on top of functionality in Dialogflow CX.

With this feature, you can provide a website URL and/or any number of documents, and then Generative AI Agent parses your content and creates a virtual agent that is powered by data stores and LLMs.

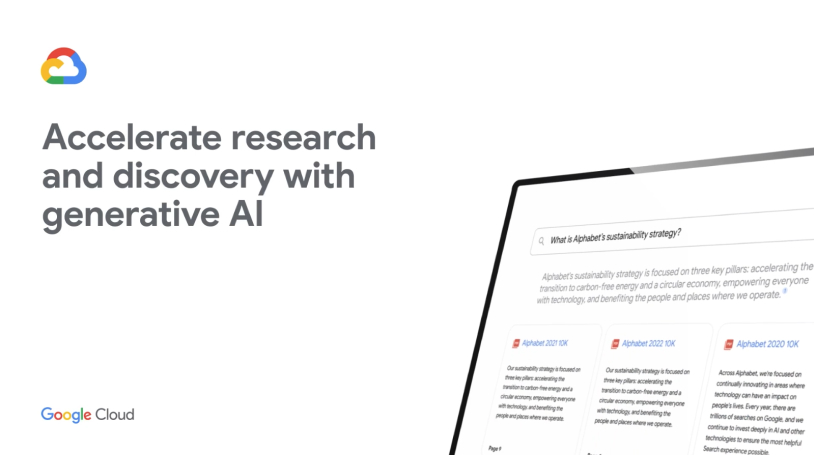

Research and information discovery

Find and summarize complex information in moments

Extract and summarize valuable information from complex documents, such as 10-K forms, research papers, third-party news services, and financial reports—with a click of a button. Watch how Enterprise Search uses natural language to understand semantic queries, offer summarized responses, and provide follow-up questions in the demo on the right.

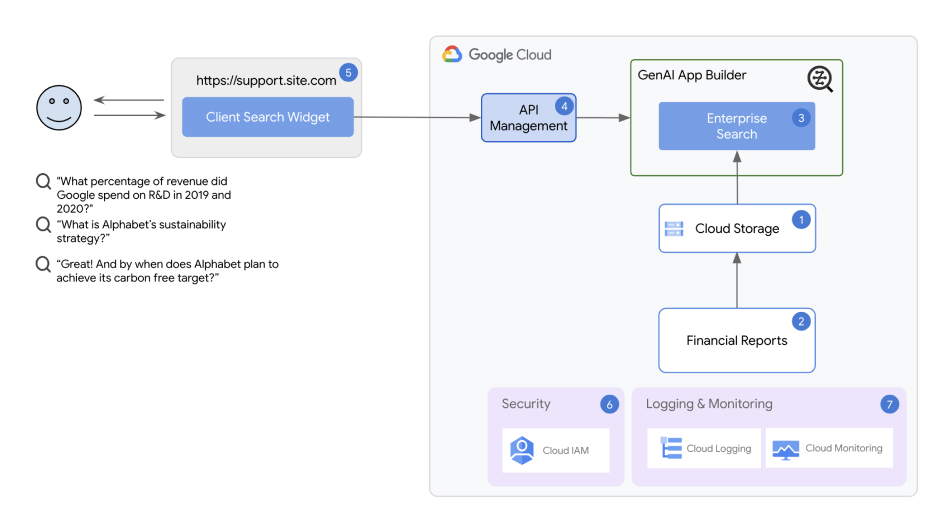

Research and information discovery solution architecture

The solution uses Vertex AI Agent Builder as its core component. With Vertex AI Agent Builder, even early career developers can rapidly build and deploy chatbots and search applications in minutes.

How-tos

Find and summarize complex information in moments

Extract and summarize valuable information from complex documents, such as 10-K forms, research papers, third-party news services, and financial reports—with a click of a button. Watch how Enterprise Search uses natural language to understand semantic queries, offer summarized responses, and provide follow-up questions in the demo on the right.

Additional resources

Research and information discovery solution architecture

The solution uses Vertex AI Agent Builder as its core component. With Vertex AI Agent Builder, even early career developers can rapidly build and deploy chatbots and search applications in minutes.

Document summarization

Process and summarize large documents using Vertex AI LLMs

With Generative AI Document Summarization, deploy a one-click solution that helps detect text in raw files and automate document summaries. The solution establishes a pipeline that uses Cloud Vision Optical Character Recognition (OCR) to extract text from uploaded PDF documents in Cloud Storage, creates a summary from the extracted text with Vertex AI, and stores the searchable summary in a BigQuery database.

How-tos

Process and summarize large documents using Vertex AI LLMs

With Generative AI Document Summarization, deploy a one-click solution that helps detect text in raw files and automate document summaries. The solution establishes a pipeline that uses Cloud Vision Optical Character Recognition (OCR) to extract text from uploaded PDF documents in Cloud Storage, creates a summary from the extracted text with Vertex AI, and stores the searchable summary in a BigQuery database.

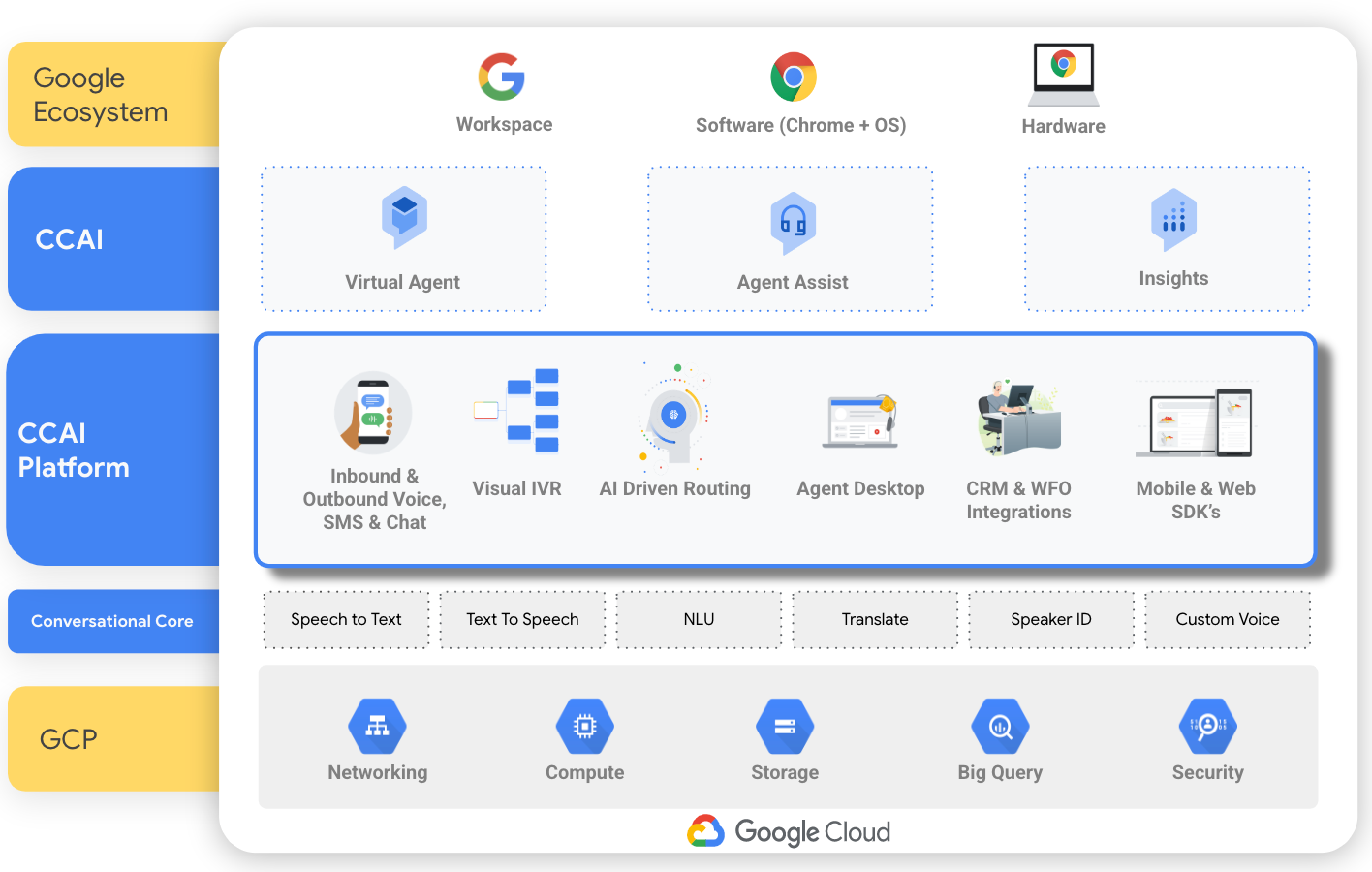

Build an AI-powered contact center

Build an AI-powered contact center with CCAI

Powered by AI technologies such as natural language processing, generative AI, and text and speech recognition, Contact Center AI (CCAI) offers a Contact Center as a Service (CCaaS) solution that helps build a contact center from the ground up. It also has individual tools that target specific aspects of a call center, for example Dialogflow CX for building a LLM powered chatbot, Agent Assist for real-time assistance to human agents, and CCAI Insights for identifying call drivers and sentiment.

How-tos

Build an AI-powered contact center with CCAI

Powered by AI technologies such as natural language processing, generative AI, and text and speech recognition, Contact Center AI (CCAI) offers a Contact Center as a Service (CCaaS) solution that helps build a contact center from the ground up. It also has individual tools that target specific aspects of a call center, for example Dialogflow CX for building a LLM powered chatbot, Agent Assist for real-time assistance to human agents, and CCAI Insights for identifying call drivers and sentiment.

Train custom LLMs

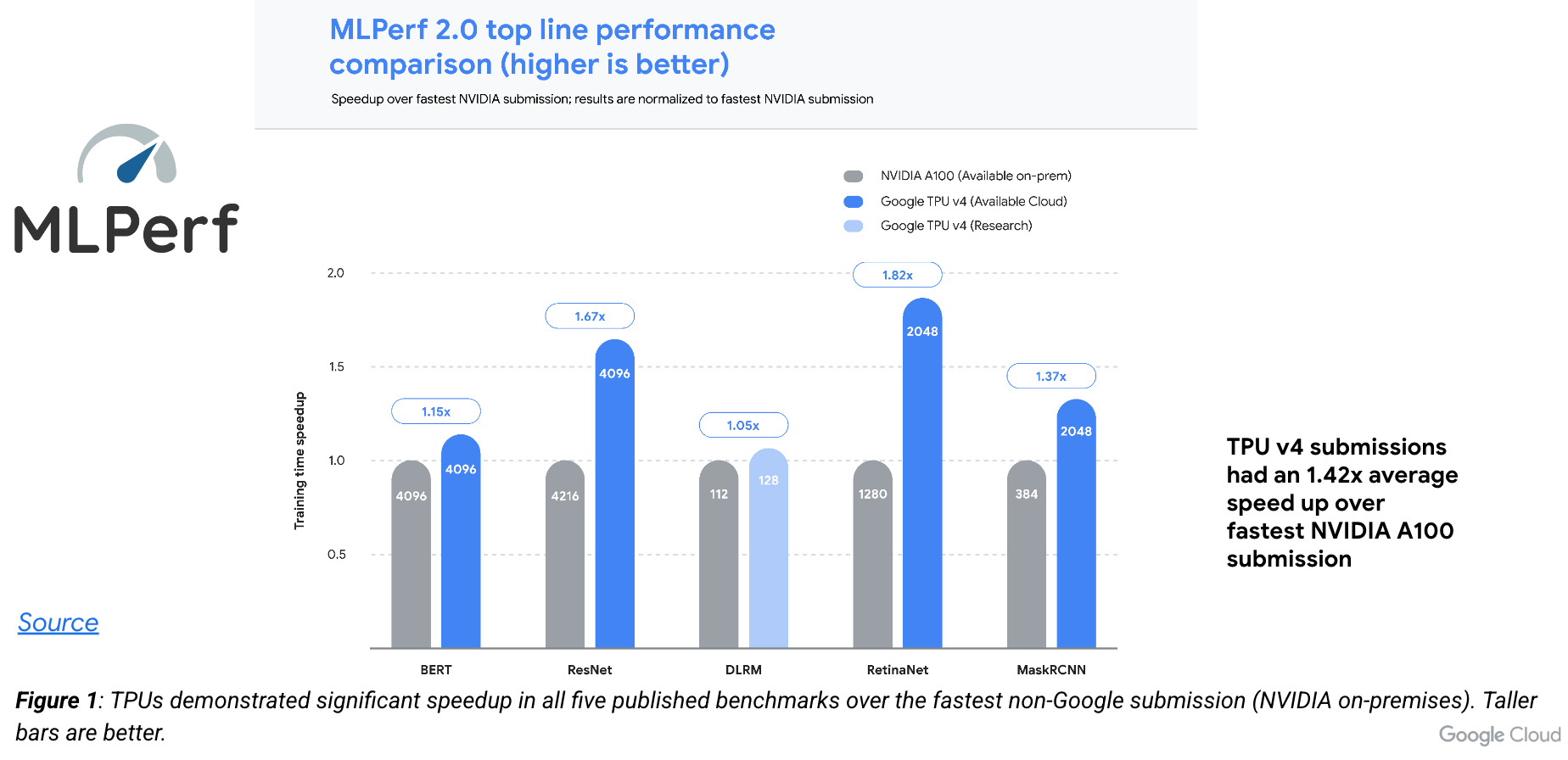

Use TPUs (Tensor Processing Units) to train LLMs at scale

Cloud TPUs are Google’s warehouse scale supercomputers for machine learning. They are optimized for performance and scalability while minimizing the total cost of ownership and are ideally suited for training LLMs and generative AI models.

With the fastest training times on five MLPerf 2.0 benchmarks, Cloud TPU v4 pods are the latest generation of accelerators, forming the world's largest publicly available ML hub with up to 9 exaflops of peak aggregate performance.

How-tos

Use TPUs (Tensor Processing Units) to train LLMs at scale

Cloud TPUs are Google’s warehouse scale supercomputers for machine learning. They are optimized for performance and scalability while minimizing the total cost of ownership and are ideally suited for training LLMs and generative AI models.

With the fastest training times on five MLPerf 2.0 benchmarks, Cloud TPU v4 pods are the latest generation of accelerators, forming the world's largest publicly available ML hub with up to 9 exaflops of peak aggregate performance.