BigQuery

From data warehouse to autonomous data and AI platform

BigQuery is the autonomous data to AI platform, automating the entire data life cycle, from ingestion to AI-driven insights, so you can go from data to AI to action faster.

Gemini in BigQuery features are now included in BigQuery pricing models.

Store 10 GiB of data and run up to 1 TiB of queries for free per month. New customers also get $300 in free credits to try BigQuery and other Google Cloud products.

Features

Unlock powerful AI with familiar SQL

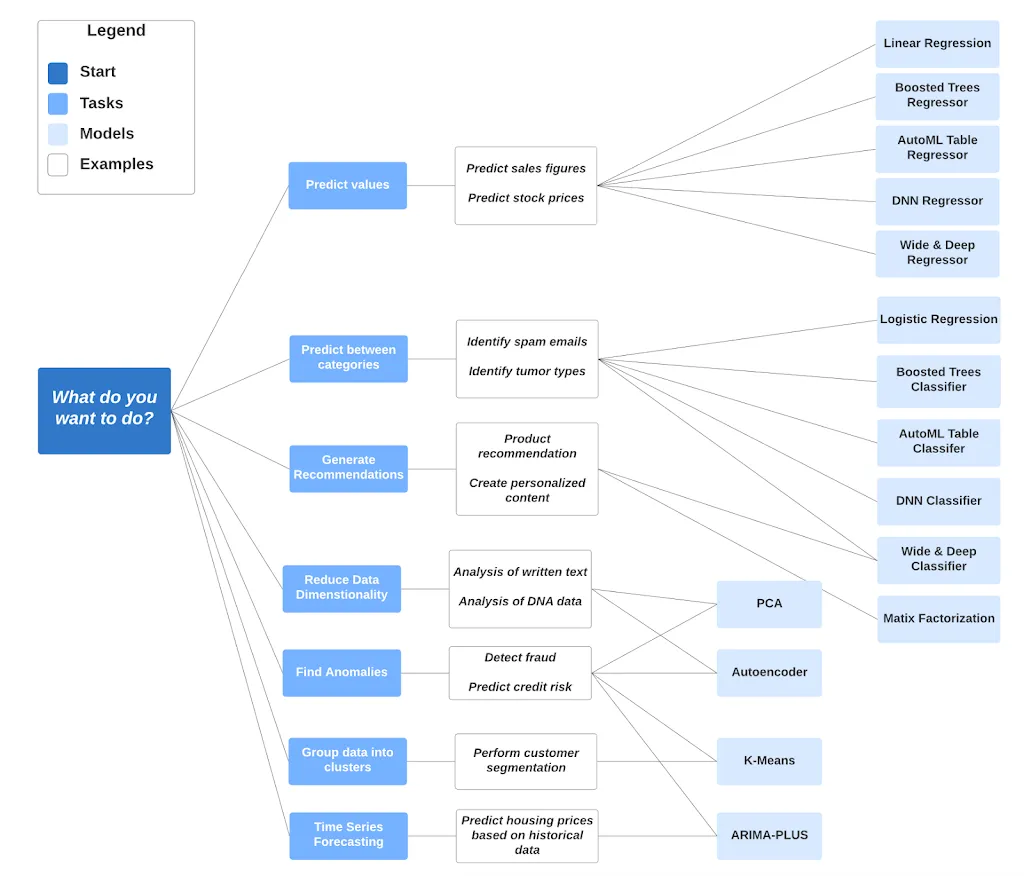

Connect your data to AI with BigQuery AI. Train, evaluate, and run ML models like linear regression, k-means clustering, or time series forecasts directly within BigQuery using familiar SQL commands. Easily integrate your models with Vertex AI Model Registry for advanced MLOps. Make generative AI an integral component of SQL for tasks like text summarization and sentiment analysis without requiring specialized tools or data movement with native AI functions. Perform sophisticated context retrieval and build advanced search applications using embedding generation and vector, text, or hybrid search.

Simplify workflows with agents

Get AI-powered assistance and automation for all data users across all analytical workflows. Automate data preparation, error detection, transformations, and pipeline building with Data Engineering Agent. Streamline entire ML lifecycle, from exploratory data analysis to running predictions in Data Science Agent. Make BigQuery insights accessible to all users, allowing them to ask questions in plain language and receive answers with Conversational Analytics Agent. Leverage foundational APIs, ADK integrations and BigQuery MCP server for custom agent development.

Your choice of open source and open formats

Run serverless Spark alongside SQL workloads in BigQuery, with unified security, runtime metadata, and governance. BigQuery's fully managed capabilities combined with managed Apache Iceberg tables powered by BigLake enable streaming, advanced analytics, and AI use cases, making it easy to work with open formats.

Built-in data to AI governance

BigQuery provides contextual governance that is powered by Dataplex Universal Catalog. All the key capabilities such as automatic metadata harvesting, data profiling, data quality and lineage are integrated and are available in the BigQuery experience. Customers can use gen AI-powered capabilities such as semantic search, metadata augmentation, and data insights to discover, document and get faster insights for all your BigQuery assets.

Built for enterprise scale and efficiency

BigQuery’s unique architecture decouples storage and compute for petabyte-scale analysis while optimizing costs with compressed storage, compute autoscaling, flexible pricing, and more. Under the hood, BigQuery employs a vast set of Google infrastructure technologies like Borg, Colossus, Jupiter, and Dremel. For mission-critical workloads, BigQuery also provides managed disaster recovery in the case of a total region outage relying on cross-region dataset replication capabilities.

Real-time analytics with streaming data pipelines

Use Managed Service for Apache Kafka to build and run real-time streaming applications. From SQL-based easy streaming with BigQuery continuous queries, popular open source Kafka platforms, and advanced multimodal data streaming and ML with Dataflow, including support for Iceberg, you can make real-time data and AI a reality.

Enterprise capabilities

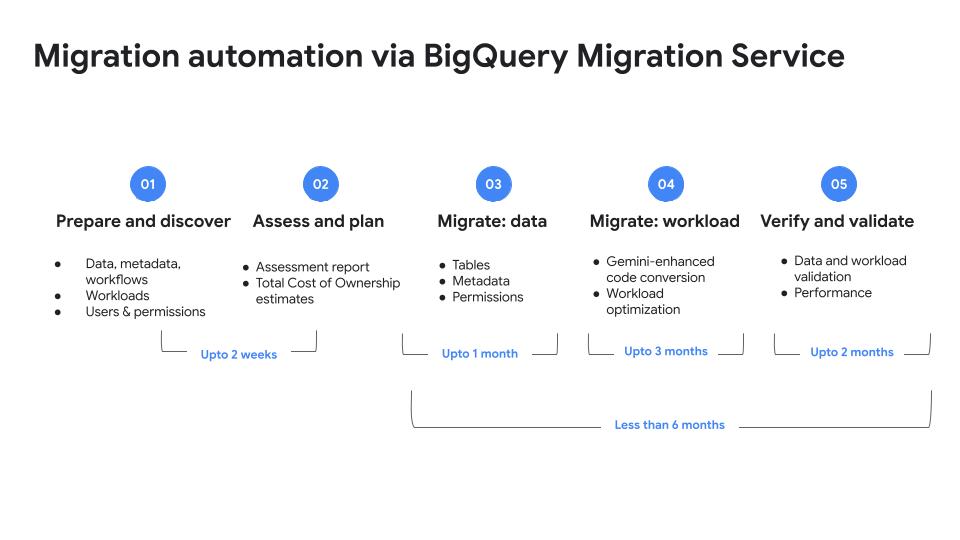

BigQuery continues to build new enterprise capabilities. Cross-region disaster recovery provides managed failover in the unlikely event of a regional disaster as well as data backup and recovery features to help you recover from user errors. BigQuery operational health monitoring provides organization-wide views of your BigQuery operational environment. BigQuery Migration Services provides a comprehensive collection of tools for migrating to BigQuery from legacy or cloud data warehouses.

How It Works

See how BigQuery can help you unify your data and connect it with groundbreaking AI. Learn how to access unstructured data like images, pdfs, texts, and others to populate an ecommerce websites' metadata. Something that would take hours is made easy with BigQuery.

See how BigQuery can help you unify your data and connect it with groundbreaking AI. Learn how to access unstructured data like images, pdfs, texts, and others to populate an ecommerce websites' metadata. Something that would take hours is made easy with BigQuery.

Common Uses

Data science

Simplify data to AI workflows

Simplify data to AI workflows

Streamline end-to-end data science workflows on Colab Enterprise notebooks with built-in agents or open source Python libraries through BigQuery DataFrames. Bring your preferred processing engine—SQL or open source frameworks like Apache Spark. Train, evaluate, and deploy ML models directly within BigQuery or use pre-trained models like TimesFM using SQL. Conveniently store features for models built and used in BigQuery. You can version, evaluate, and deploy the models by registering in Vertex AI for online prediction by using a single interface.

Tutorials, quickstarts, & labs

Simplify data to AI workflows

Simplify data to AI workflows

Streamline end-to-end data science workflows on Colab Enterprise notebooks with built-in agents or open source Python libraries through BigQuery DataFrames. Bring your preferred processing engine—SQL or open source frameworks like Apache Spark. Train, evaluate, and deploy ML models directly within BigQuery or use pre-trained models like TimesFM using SQL. Conveniently store features for models built and used in BigQuery. You can version, evaluate, and deploy the models by registering in Vertex AI for online prediction by using a single interface.

Data warehouse migration

Migrate data warehouses to BigQuery

Migrate data warehouses to BigQuery

Solve for today’s analytics demands and tomorrow's AI use cases by migrating your data warehouse to BigQuery. Streamline your migration path from Netezza, Oracle, Redshift, Teradata, Snowflake, or Databricks to BigQuery using the free and fully managed BigQuery Migration Service.

Tutorials, quickstarts, & labs

Migrate data warehouses to BigQuery

Migrate data warehouses to BigQuery

Solve for today’s analytics demands and tomorrow's AI use cases by migrating your data warehouse to BigQuery. Streamline your migration path from Netezza, Oracle, Redshift, Teradata, Snowflake, or Databricks to BigQuery using the free and fully managed BigQuery Migration Service.

Data integration and ELT

Bring any data into BigQuery

Bring any data into BigQuery

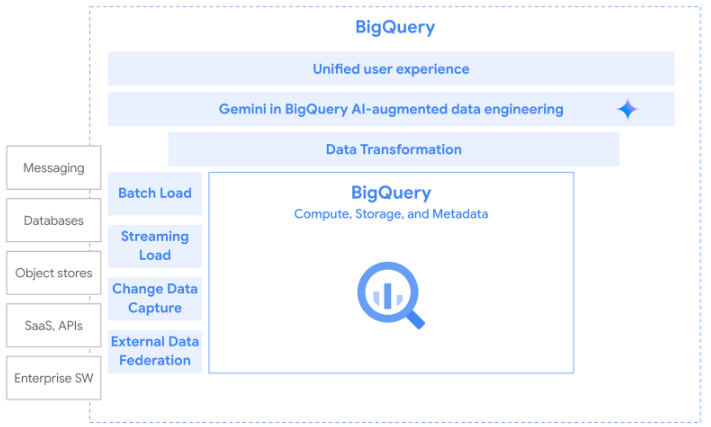

ELT is the recommended pattern for bringing data into BigQuery. There are many tools that offer flexibility for data integration. For batch load, use BigQuery Data Transfer Service (DTS) to automate the bulk load of data from supported data sources into BigQuery. For streaming load, Pub/Sub BigQuery subscriptions writes Pub/Sub messages to an existing BigQuery table as they are received. For Change data capture (CDC), Datastream enables non-intrusive change data capture (CDC) from databases into BigQuery. Finally, you can federate to a number of external data sources that don't require data movement.

Tutorials, quickstarts, & labs

Bring any data into BigQuery

Bring any data into BigQuery

ELT is the recommended pattern for bringing data into BigQuery. There are many tools that offer flexibility for data integration. For batch load, use BigQuery Data Transfer Service (DTS) to automate the bulk load of data from supported data sources into BigQuery. For streaming load, Pub/Sub BigQuery subscriptions writes Pub/Sub messages to an existing BigQuery table as they are received. For Change data capture (CDC), Datastream enables non-intrusive change data capture (CDC) from databases into BigQuery. Finally, you can federate to a number of external data sources that don't require data movement.

Real-time analytics

Event-driven analysis

Event-driven analysis

Gain a competitive advantage by responding to business events in real time with event-driven analysis. Built-in streaming capabilities automatically ingest streaming data and make it immediately available to query. This allows you to stay agile and make business decisions based on the freshest data. Or use Dataflow to enable fast, simplified streaming data pipelines for a comprehensive solution.

Tutorials, quickstarts, & labs

Event-driven analysis

Event-driven analysis

Gain a competitive advantage by responding to business events in real time with event-driven analysis. Built-in streaming capabilities automatically ingest streaming data and make it immediately available to query. This allows you to stay agile and make business decisions based on the freshest data. Or use Dataflow to enable fast, simplified streaming data pipelines for a comprehensive solution.

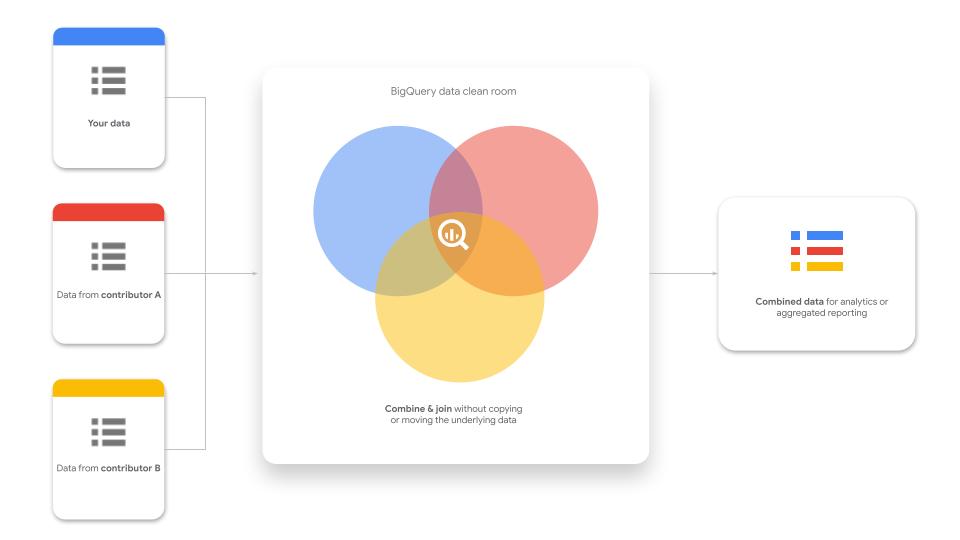

Data clean rooms

BigQuery data clean rooms for privacy-centric data sharing

BigQuery data clean rooms for privacy-centric data sharing

Create a low-trust environment for you and your partners to collaborate without copying or moving the underlying data right within BigQuery. This allows you to perform privacy-enhancing transformations in BigQuery SQL interfaces and monitor usage to detect privacy threats on shared data. Benefit from BigQuery scale without needing to manage any infrastructure and built-in BI and AI/ML.

Tutorials, quickstarts, & labs

BigQuery data clean rooms for privacy-centric data sharing

BigQuery data clean rooms for privacy-centric data sharing

Create a low-trust environment for you and your partners to collaborate without copying or moving the underlying data right within BigQuery. This allows you to perform privacy-enhancing transformations in BigQuery SQL interfaces and monitor usage to detect privacy threats on shared data. Benefit from BigQuery scale without needing to manage any infrastructure and built-in BI and AI/ML.

Geospatial analytics

Unlock planetary-scale insights with rich, easy-to-use geospatial datasets

Unlock planetary-scale insights with rich, easy-to-use geospatial datasets

Access a portfolio of rich geospatial data, powerful cloud computing, and built-in AI tools that make it easier for you to unlock insights that lead to more informed and faster business and sustainability decisions, without needing remote sensing or GIS expertise. Seamlessly integrate analysis-ready imagery and datasets from Earth Engine, and Places, Routes, Street View, and satellite data from Google Maps Platform into your existing BigQuery workflows, using data clean rooms.

Tutorials, quickstarts, & labs

Unlock planetary-scale insights with rich, easy-to-use geospatial datasets

Unlock planetary-scale insights with rich, easy-to-use geospatial datasets

Access a portfolio of rich geospatial data, powerful cloud computing, and built-in AI tools that make it easier for you to unlock insights that lead to more informed and faster business and sustainability decisions, without needing remote sensing or GIS expertise. Seamlessly integrate analysis-ready imagery and datasets from Earth Engine, and Places, Routes, Street View, and satellite data from Google Maps Platform into your existing BigQuery workflows, using data clean rooms.

Pricing

| How BigQuery pricing works | BigQuery pricing is based on compute (analysis), storage, additional services, and data ingestion and extraction. Loading and exporting data are free. | |

|---|---|---|

| Services and usage | Subscription type | Price (USD) |

Free tier | The BigQuery free tier gives customers 10 GiB storage, up to 1 TiB queries in on-demand compute free per month, and other resources. | Free |

Compute (analysis) | On-demand Generally gives you access to up to 2,000 concurrent slots, shared among all queries in a single project. | Starting at $6.25 per TiB scanned. First 1 TiB per month is free. |

Editions: Standard, Enterprise, and Enterprise Plus Includes Gemini in BigQuery AI-assistance features. | Starting at $0.04 per slot hour | |

Storage | Logical storage Based on the uncompressed bytes used in tables or table partitions modified in the last 90 days. | Starting at $0.01 Per GiB. The first 10 GiB is free each month. |

Physical storage Based on the compressed bytes used in tables or table partitions modified for 90 consecutive days. | Starting at $0.02 Per GiB. The first 10 GiB is free each month. | |

Data ingestion | Batch loading Import table from Cloud Storage. | Free When using the shared slot pool. |

Streaming inserts You are charged for rows that are successfully inserted. Individual rows are calculated using a 1 KB minimum. | $0.01 per 200 MiB | |

BigQuery Storage Write API Data loaded into BigQuery, is subject to BigQuery storage pricing or Cloud Storage pricing. | $0.025 per 1 GiB. The first 2 TiB per month are free. | |

Data extraction | Batch export Export table data to Cloud Storage. | Free When using the shared slot pool. |

Streaming reads Use the storage Read API to perform streaming reads of table data. | Starting at $1.10 per TiB read | |

Learn more about BigQuery pricing. View all pricing details

How BigQuery pricing works

BigQuery pricing is based on compute (analysis), storage, additional services, and data ingestion and extraction. Loading and exporting data are free.

Free tier

The BigQuery free tier gives customers 10 GiB storage, up to 1 TiB queries in on-demand compute free per month, and other resources.

Free

Compute (analysis)

On-demand

Generally gives you access to up to 2,000 concurrent slots, shared among all queries in a single project.

Starting at

$6.25

per TiB scanned. First 1 TiB per month is free.

Editions: Standard, Enterprise, and Enterprise Plus

Includes Gemini in BigQuery AI-assistance features.

Starting at

$0.04

per slot hour

Storage

Logical storage

Based on the uncompressed bytes used in tables or table partitions modified in the last 90 days.

Starting at

$0.01

Per GiB. The first 10 GiB is free each month.

Physical storage

Based on the compressed bytes used in tables or table partitions modified for 90 consecutive days.

Starting at

$0.02

Per GiB. The first 10 GiB is free each month.

Data ingestion

Batch loading

Import table from Cloud Storage.

Free

When using the shared slot pool.

Streaming inserts

You are charged for rows that are successfully inserted. Individual rows are calculated using a 1 KB minimum.

$0.01

per 200 MiB

BigQuery Storage Write API

Data loaded into BigQuery, is subject to BigQuery storage pricing or Cloud Storage pricing.

$0.025

per 1 GiB. The first 2 TiB per month are free.

Data extraction

Batch export

Export table data to Cloud Storage.

Free

When using the shared slot pool.

Streaming reads

Use the storage Read API to perform streaming reads of table data.

Starting at

$1.10

per TiB read

Learn more about BigQuery pricing. View all pricing details

Business Case

Tens of thousands of customers choose BigQuery to build their data to AI platforms

Mattel saves time and money by connecting its data to AI in BigQuery.

TJ Allard, Lead Data Scientist, Mattel

"BigQuery and Vertex AI bring all our data and AI together into a single platform. This has transformed how we take action on customer feedback from a lengthy manual process, to a simple natural language query in seconds, allowing us to get to customer insights in minutes instead of months.”

Read more customer stories

Deutsche Telekom designs the telco of tomorrow with BigQuery

Read the blog

10 months to innovation: Definity's leap to data agility with BigQuery and Vertex AI

Read the blog

Yassir migrated from Databricks to BigQuery and improved the performance and efficiency of its machine learning processes

Read the blog

See the BigQuery difference

AI-powered innovation with conversational, intelligent search, and all-new agentic experiences, enriched with semantic layer for accuracy.

Unified data to AI platform for seamless analytics, AI co-processing, and real-time insights on multimodal data, with unified governance, runtime metadata, and security.

Flexible and future-proof with low cost AI and seamless interoperability with third party and open source.

Partners & Integration

Work with a partner with BigQuery expertise

ETL and data integration

Reverse ETL and MDM

BI and data visualization

Data governance and security

Connectors and developer tools

Machine learning and advanced analytics

Data quality and observability

Consulting partners

From data ingestion to visualization, many partners have integrated their data solutions with BigQuery. Listed above are partner integrations through Google Cloud Ready - BigQuery.

Visit our partner directory to learn about these BigQuery partners.

FAQ

What makes BigQuery different from other enterprise data warehouse alternatives?

BigQuery is Google Cloud’s fully managed and completely serverless enterprise data warehouse. BigQuery supports all data types, works across clouds, and has built-in machine learning and business intelligence, all within a unified platform. With native Vertex AI integration, you can easily connect your data to Google's industry leading AI without leaving BigQuery.

What is an enterprise data warehouse?

An enterprise data warehouse is a system used for the analysis and reporting of structured and semi-structured data from multiple sources. Many organizations are moving from traditional data warehouses that are on-premises to cloud data warehouses, which provide more cost savings, scalability, and flexibility.

How secure is BigQuery?

BigQuery offers robust security, governance, and reliability controls that offer high availability and a 99.99% uptime SLA. Your data is protected with encryption by default and customer-managed encryption keys.

How can I get started with BigQuery?

There are a few ways to get started with BigQuery. New customers get $300 in free credits to spend on BigQuery. All customers get 10 GB storage and up to 1 TB queries free per month, not charged against their credits. You can get these credits by signing up for the BigQuery free trial. Not ready yet? You can use the BigQuery sandbox without a credit card to see how it works.

What is the BigQuery sandbox?

The BigQuery sandbox lets you try out BigQuery without a credit card. You stay within BigQuery’s free tier automatically, and you can use the sandbox to run queries and analysis on public datasets to see how it works. You can also bring your own data into the BigQuery sandbox for analysis. There is an option to update to the free trial where new customers get a $300 credit to try BigQuery.

What are the most common ways companies use BigQuery?

Companies of all sizes use BigQuery to consolidate siloed data into one location so you can perform data analysis and get insights from all of your business data. This allows companies to make decisions in real time, streamline business reporting, and incorporate machine learning into data analysis to predict future business opportunities.