Running computer-aided engineering workloads on Google Cloud

Computer-aided engineering (CAE) encompasses a wide range of applications, including structural analysis, fluid dynamics, crash safety, and thermal analysis, to name a few. All of those use cases require significant computational resources to handle the complex simulations of the involved physics and potentially large input and output data. In this technical reference guide we describe how to leverage Google Cloud to accelerate CAE workflows by providing high performance computing (HPC) resources.

CAE Solution - In a Nutshell

Google Cloud’s HPC platform provides a powerful and scalable platform for demanding CAE workflows. It combines the performance of traditional HPC systems with the advantages of a global-scale, elastic, and flexible cloud:

- Scalability: Google Cloud's elastic infrastructure allows users to scale their CAE workloads up or down on-demand, providing the flexibility to handle fluctuating computational needs.

- Performance: Google Cloud's latest-generation CPUs and GPUs, combined with high-performance networking, ensure that CAE simulations run efficiently, minimizing turnaround times and accelerating design iterations.

- Flexibility: Google Cloud offers a wide range of VM instances optimized for different CAE applications and workloads, allowing users to select the optimal compute resources for their specific needs.

- Ease-of-use: Google's CAE solution makes it easy for you to get started and experience the transformative power of accelerated simulations and analyses.

A Reference Architecture for CAE Workloads

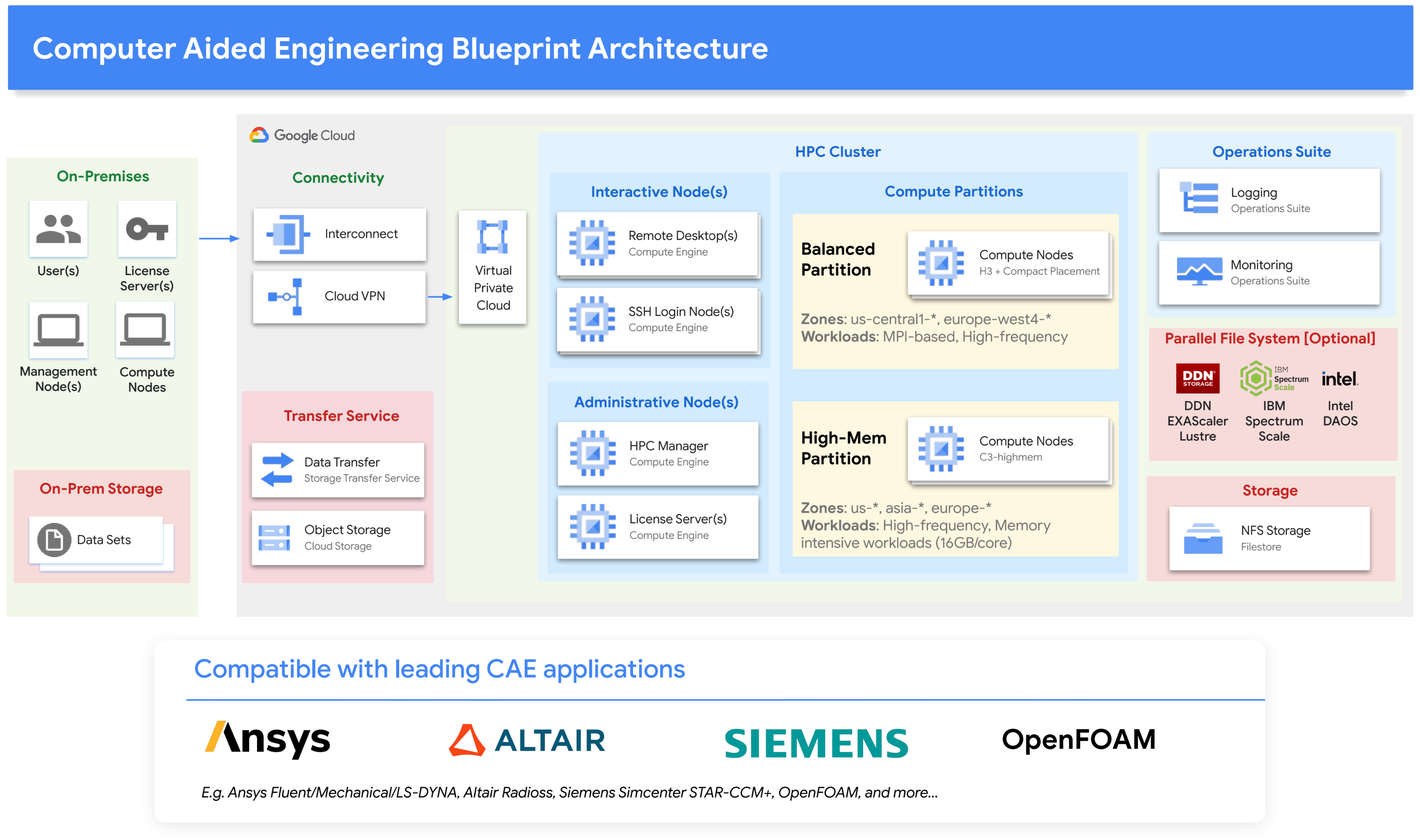

To simplify using Google Cloud for CAE workflows, we have assembled the right cloud components to meet the requirements of computationally-intensive CAE workloads. Specifically, our CAE solution is architected around Google Cloud’s H3 and C3 VM families, based on the latest Intel Xeon platform. These VM families provide high memory bandwidth for a balanced memory/flop ratio, which is ideally suited for CAE. The solution caters to tightly-coupled MPI applications as well as memory-demanding workloads with up to 16GB/core. It includes various storage options addressing a broad range of IO requirements. For resource management, it supports schedulers such as SchedMD’s Slurm and Altair's PBS Professional.

The following architecture diagram illustrates the solution:

Solution Components

The CAE solution's architecture is composed of several key components, including compute, networking, storage, and Google’s open source Cloud HPC Toolkit.

Compute

The CAE solution is built on Google Compute Engine. Compute Engine offers a variety of machine types, including machine types with GPUs. Compute Engine is a good choice for CAE workloads that require high performance and flexibility, due to its specialized VM types and high-performance networking:

H3 VMs: A balanced partition well suited for CAE workloads is built from Google’s HPC VM using Intel’s latest Sapphire Rapids with 4GB/core and up to thousands of cores (via MPI).

C3 VMs: A high-memory partition well suited for memory-intensive CAE workloads is built from Google’s C3 VM using Intel’s latest Sapphire Rapids generation with 16 GB/core.

Placement Policies: Placement policies ensure that VMs are created in close proximity to each other, reducing inter-VM communication latency and improving overall performance.

HPC VM Image: For optimal performance we provide HPC VM images that configure standard Linux operating system distributions for optimal performance on Google Cloud.

General purpose VMs: for login nodes, license servers, and miscellaneous tasks.

Remote desktop VMs - for remote desktop sessions and remote visualization.

Networking

Google Virtual Private Cloud: Google VPC is a virtual version of a physical network for your project.

Google Cloud Connectivity: Google Cloud Connectivity allows you to connect and extend on-premise networks to Google Cloud with high availability and low latency.

Google Cloud VPN: Google Cloud VPN securely connects your peer network to a Virtual Private Cloud (VPC) network.

gVNIC: Google Virtual NIC is a virtual network interface card (NIC) that offers high performance and low latency among compute VMs. gVNIC is a good choice for CAE workloads that require high network performance.

Storage

Google Filestore: Filestore is a fully managed NFS service that offers high performance and low latency. Filestore is a good choice for data that is shared or needs to be visible across the network such as applications or home directories.

Parallel File Systems: Google Cloud partners with a number of storage vendors to offer a variety of parallel file systems for HPC workloads. These partners include NetApp, DDN EXAScaler, Sycomp Spectrum Scale, and Weka.

Google Storage Transfer Service: The managed Storage Transfer Service can transfer data quickly and securely between object and file storage across Google Cloud, Amazon, Azure, on-premises, and more.

Google Cloud Storage: Cloud Storage is a scalable and durable object storage service. Cloud Storage is a good choice for storing large amounts of data or can be used for data transfer.

Tools

Google Cloud HPC Toolkit - Google’s open source Cloud HPC Toolkit makes it easy for customers and partners to deploy repeatable turnkey HPC environments following Google Cloud’s HPC best practices. Google’s CAE solution comes with an HPC Toolkit blueprint that allows one to easily instantiate an HPC environment in Google Cloud, ready to run CAE workloads.

Getting Started with CAE on Google Cloud

Considerations for running CAE workloads in the cloud

When running CAE workloads in the cloud, there are a number of factors to consider, including:

- Compatibility: ensure that CAE applications have been tested for compatibility with the cloud-based HPC environment.

- VM Selection: choose the right VM type that runs ISV software optimally—many CAE workloads lend themselves to compute architectures with high amounts of memory bandwidth and an overall balanced flops:memory bandwidth ratio.

- Scalability: consider that larger CAE workloads may need to run across multiple VMs, requiring support for MPI and dense deployments of the VMs.

- Throughput: assess how many workloads need to run concurrently and consider auto-scaling scheduling models that can grow and shrink the cloud resources according to demand.

- Cost: commercial ISV software packages for CAE often are licensed “by core”. This is an important factor in the total cost and can influence the choice of a VM type.

- Usage/Consumption: cloud offers elasticity that on-prem installation cannot offer, explore the right balance between on-demand versus committed use or even spot VMs.

- Ease of use: make sure that the cloud HPC solution you choose is easy to use and manage.

General Purpose CAE Reference Blueprint

As part of the Google Cloud CAE solution, we developed a general purpose CAE reference architecture and blueprint, which can readily be used with Google’s Cloud HPC Toolkit to provision the CAE architecture in Google Cloud. We have verified the compatibility and performance of several leading ISV applications, including:

- Ansys Fluent

- Ansys Mechanical

- Ansys LS-DYNA

- Siemens Simcenter STAR-CCM+

- Altair Radioss

- OpenFOAM

See the benchmarks section below for the performance of those software packages.

The general purpose CAE Reference Architecture blueprint enables users to instantly bring up a cloud environment that is compatible with a wide range of CAE applications and workflows. It is a good option for users who want to have flexibility in choosing their CAE software and who want to manage their own HPC environment. It also serves as a starting point for system integrators, leveraging Google’s best-practices for running CAE simulations on Google Cloud.

Application-specific Blueprints

Google Cloud also offers a number of application-specific blueprints for popular CAE software. These blueprints are pre-configured to provide optimal performance for the specific CAE software. Software with specific blueprints include:

The application-specific blueprints are a good option for users who want to get started with CAE quickly and easily. The blueprints provide a pre-configured environment that is optimized for the specific CAE software, so users do not need to worry about configuring the environment themselves.

Partner Offerings

Google Cloud partners with a number of HPC-as-a-Service providers, such as TotalCAE, Rescale, Parallel Works, Eviden Nimbix, Penguin Computing, and NAG and also with CAE ISV vendors such as Altair. These providers offer a variety of managed HPC solutions for CAE, including pre-configured CAE software environments, support for specific CAE applications, and expert consulting services. These offerings are a good option for users who want a managed HPC solution for CAE. The providers offer a variety of services, including pre-configured CAE software environments, support for specific CAE applications, and expert consulting services.

Architectural Alternatives and Best Practices

Google Compute Engine, Google Kubernetes Engine, Google Batch.

While the CAE solution is built on Google Compute Engine, it is similarly possible to build it on top of other compute frameworks, such as Google Kubernetes Engine or Google Batch. Kubernetes Engine is a managed Kubernetes service that can be used to run CAE workloads in a containerized environment. Kubernetes Engine is a good choice for CAE workloads that require scalability and portability. Google Batch is a managed service for running batch jobs. Batch is a good choice for CAE workloads that are not containerized, and do not require significant customization or tuning.

You can read more about architecting HPC environments in our technical guide to Cluster Toolkit, which covers the breadth of options in infrastructure (compute, network, storage), system software (schedulers, storage), and architectural considerations.

Best Practices

There are a number of best practices you can follow to optimize the performance of your CAE workloads on Google Cloud. For example, you can use placement policies to ensure that your workloads are placed on compute resources that are close to each other, which can reduce latency and improve performance. You can also use the Cloud HPC Toolkit to optimize your workloads.

Our “Best practices for running HPC workloads” guide documents how to improve MPI performance. Both Open MPI and Intel MPI have been tuned and optimized for Google Cloud performance out of the box, in partnership with Google Cloud’s HPC networking engineers.

Benchmarks

The generic CAE Environment Blueprint and with its H3 VM has been benchmarked for major CAE ISV applications on their standard benchmark models.

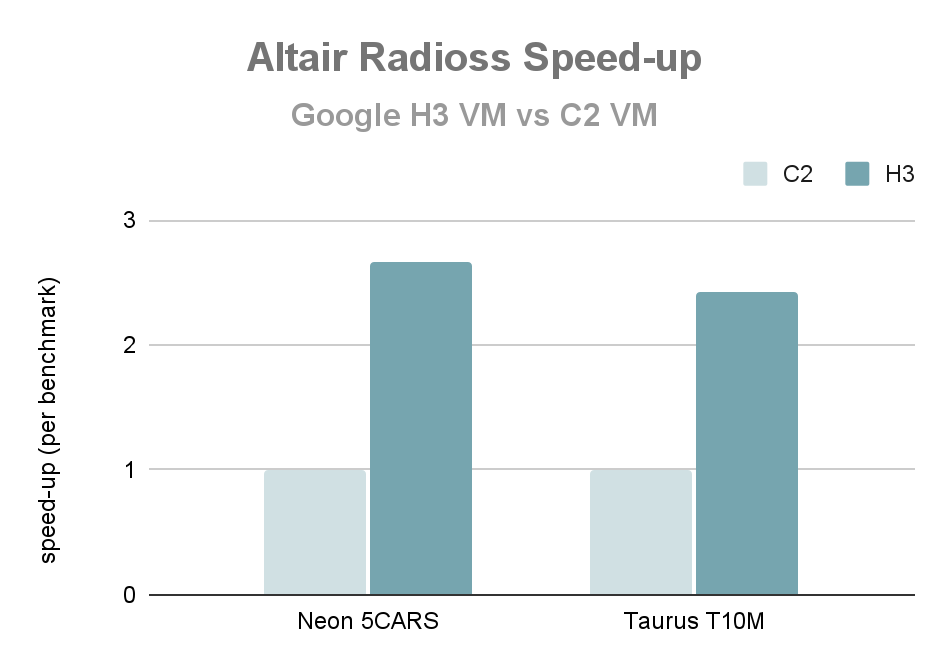

Single node performance: Altair Radioss

The following chart shows the single node performance of running Altair Radioss 2022.3 on the H3 VM relative to the C2 VM (whole VM in each case). The speed-up over two commonly used benchmarks (Neon 5CARS and T10M) for Altair Radioss is 2.6x.

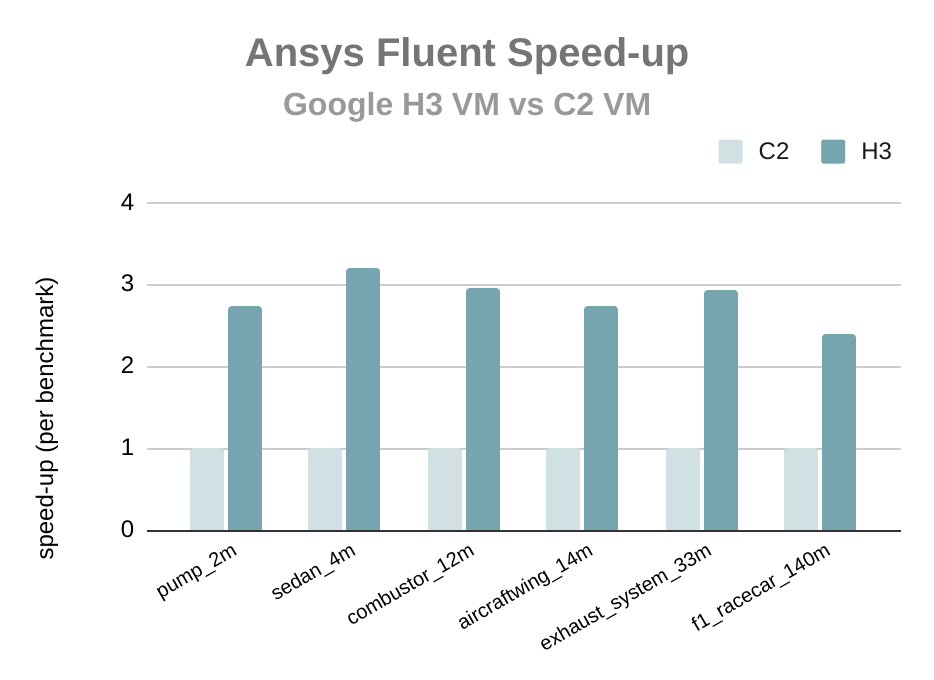

Single node performance: Ansys Fluent

The following chart shows the performance when running Ansys Fluent 2022 R2 on the H3 VM relative to the C2 VM using the CAE Solution blueprint (whole VM in each case). The speed-up over commonly used benchmarks for Ansys Fluent is 2.8x and demonstrates that the Google H3 platform is very well-suited for computational fluid dynamics workloads.

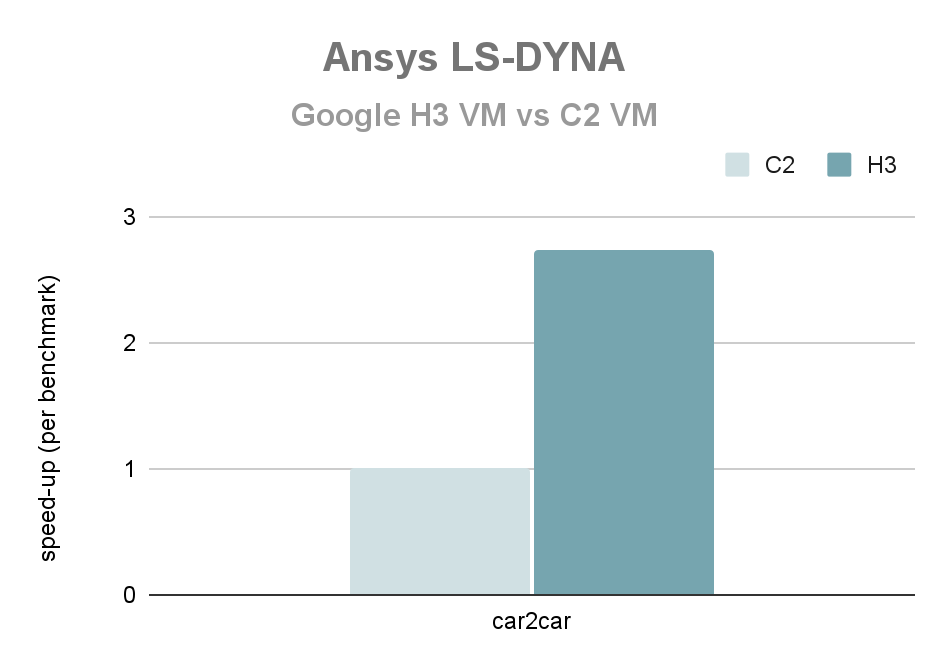

Single node performance: Ansys LS-DYNA

The following chart shows the single node performance of running Ansys LS-DYNA R9.3.1 on the H3 VM relative to the C2 VM (whole VM in each case). The speed-up for the car2car crash benchmark for Ansys LS-DYNA is 2.7x

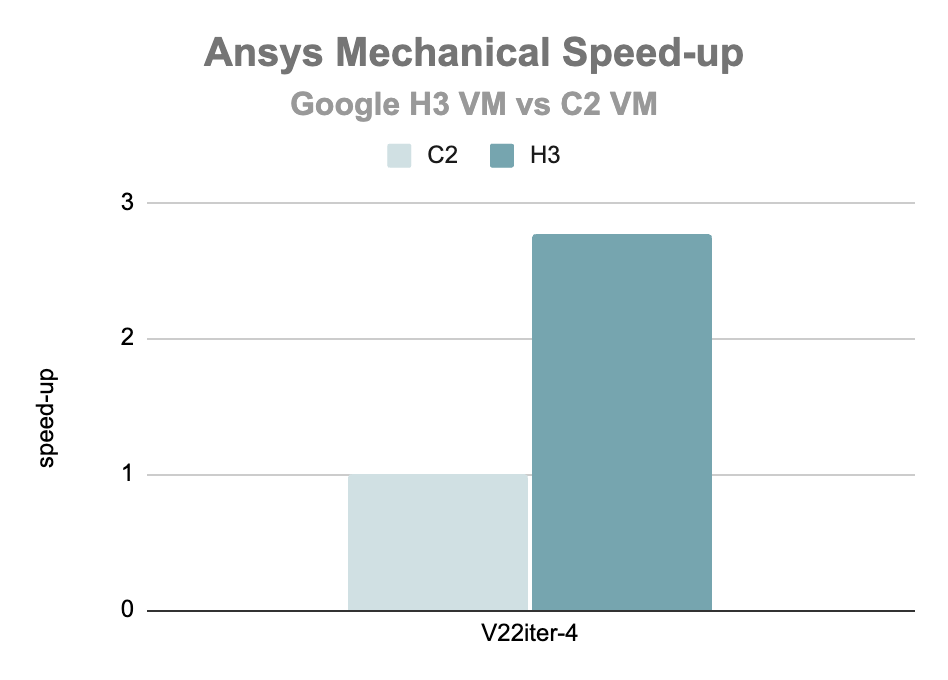

Single node performance: Ansys Mechanical

The following chart shows the single node performance of running Ansys Mechanical 2022 R1 on the H3 VM relative to the C2 VM (whole VM in each case). The speed-up for the V2iter-4 benchmark for Ansys Mechanical is 2.8x

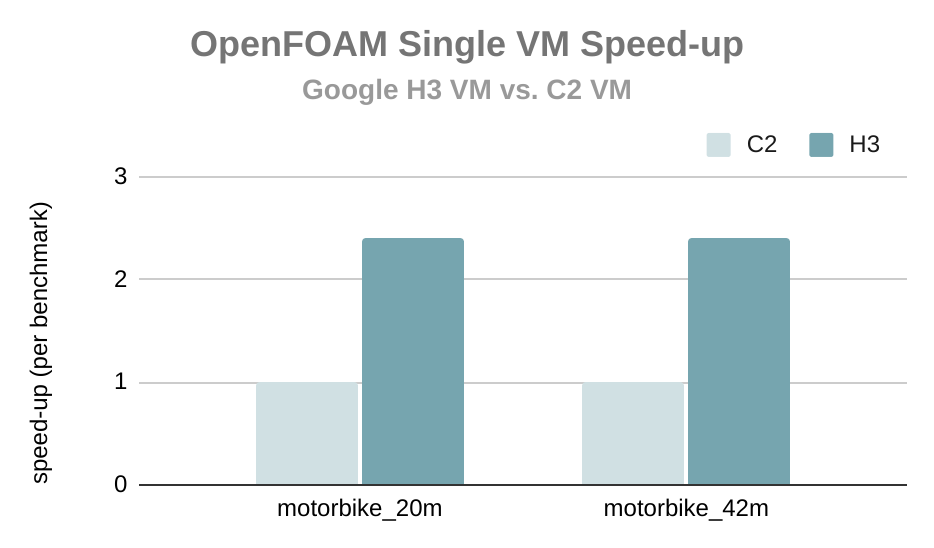

Single node performance: OpenFOAM

The following chart shows the single node performance of running The OpenFOAM Foundation’s OpenFOAM v7 on the H3 VM relative to the C2 VM (whole VM in each case). The speed-up over commonly used benchmarks for OpenFOAM is 2.4x.

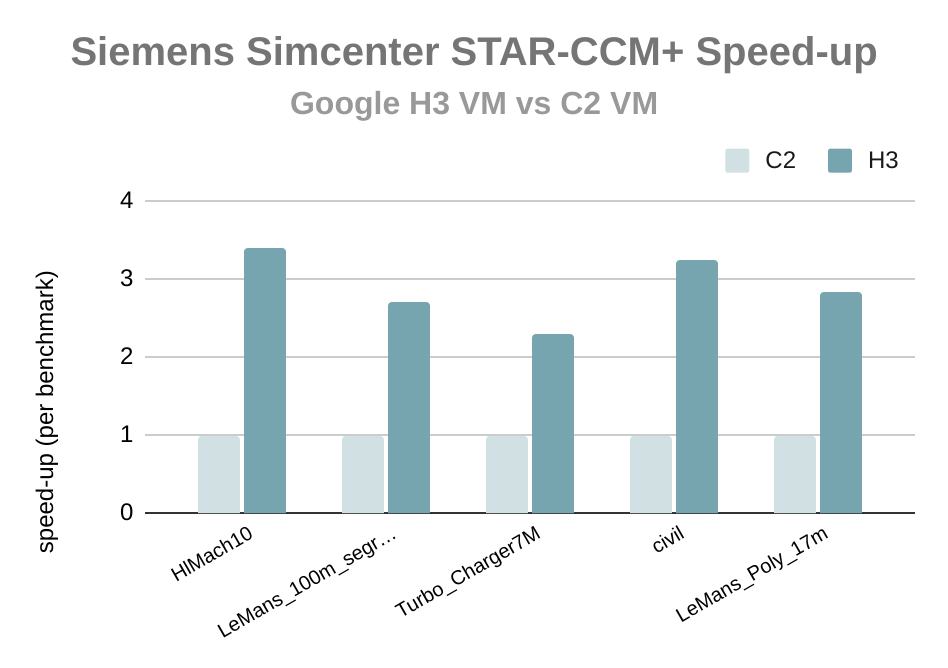

Single node performance: Siemens Simcenter STAR-CCM+

The following chart shows the single node performance of running Siemens Simcenter STAR-CCM+ 18.02.008 on the H3 VM relative to the C2 VM (whole VM in each case). The speed-up over commonly used benchmarks for Siemens Simcenter STAR-CCM+ is 2.9x.

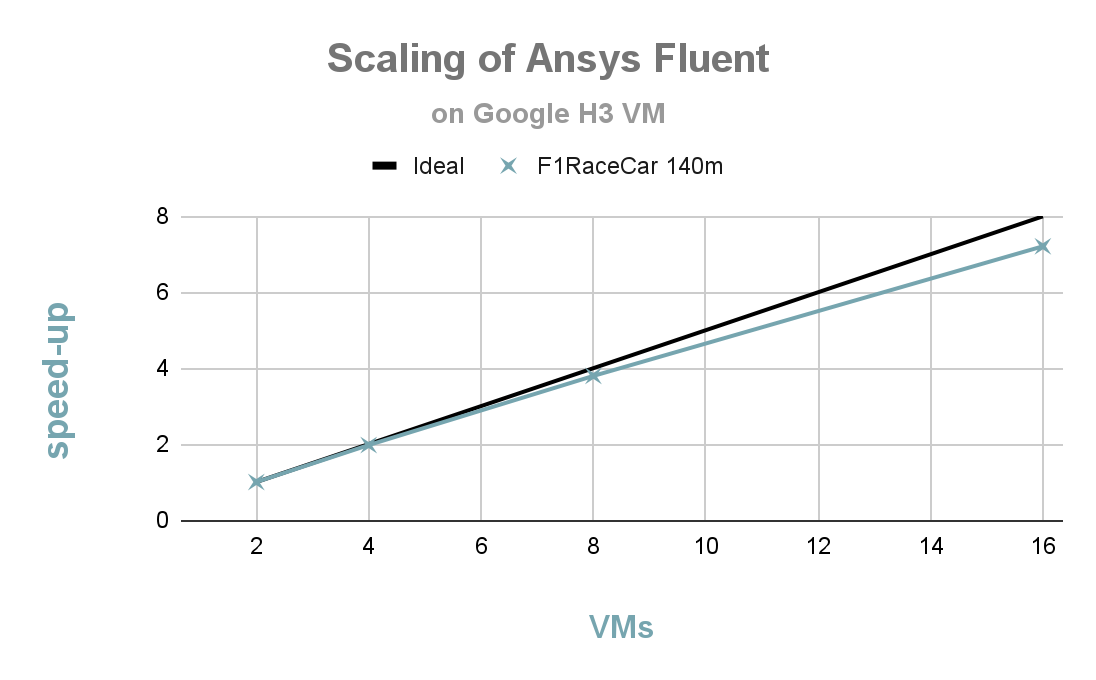

Strong scaling: Ansys Fluent

The following chart shows the strong scaling behavior of Ansys Fluent 2022 R2 on the F1 RaceCar (140m) benchmark using H3 VMs. You can see good scaling from 2 to 16 nodes, with a parallel efficiency of >90% at 16 nodes (1408 cores).

Benefits of Google Cloud for CAE Workloads

Google Cloud offers a number of benefits for running CAE workloads, including:

Performance

Performance

Google Cloud's HPC infrastructure is designed to provide high performance for CAE workloads. The latest CPUs and GPUs are available, and the networking infrastructure is designed to provide low latency.

Scalability

Scalability

Google Cloud's HPC infrastructure is designed to be scalable. CAE workloads can be scaled up or down as needed, and resources can be added or removed quickly and easily.

Flexibility

Flexibility

Google Cloud's HPC infrastructure is designed to be flexible. CAE workloads can be run on a variety of compute options, and a variety of storage options are available.

Ease of use

Ease of use

Google Cloud's HPC infrastructure is designed to be easy to use. The Cloud HPC Toolkit provides a set of tools and libraries that can be used to optimize CAE workloads on Google Cloud.

Cost-effectiveness

Cost-effectiveness

Google Cloud's HPC infrastructure is designed to be cost-effective. A variety of pricing options are available, and Spot VMs can be used to reduce costs.

Further Optimizations of Performance and Costs

Choosing the right machine type

Google Cloud offers a wide range of machine types, each with different CPU, GPU, and memory configurations. Choosing the right machine type for your workload can have a significant impact on performance and cost. For example, the H3 VM is a good choice for per-core licensed CAE applications due to the high memory-bandwidth to core ratio and with 4 GB/core the H3 VM provides sufficient memory for diverse workloads. For particularly memory demanding workloads, such as structural mechanics, the C3 VM in its high memory configuration provides 16 GB/core.

Choosing the right storage

Google Cloud offers a variety of storage options, each with different performance and cost characteristics. Choosing the right storage option for your workload can have a significant impact on performance and cost. There are also several types of storage in an HPC environment to consider.

Typical HPC environments host at least two types of storage with different requirements: home storage and scratch storage. The choice of storage type for each will depend on the specific needs of the HPC workload. For example, a workload that generates a large amount of scratch data may require a high-performance scratch storage solution, or a workload which accesses common data across multiple compute nodes simultaneously may require a parallel file system. The choice of storage type for a particular HPC workload will depend on the specific needs of the workload.

In addition to home storage and scratch storage, HPC environments may also use other types of storage, such as archive storage, which is used for storing data that is not frequently accessed. Archive storage can be provided most cost-effectively by Cloud Storage.

Home Storage

Home storage is typically used for storing shared user files, primarily in the “/home” directory, such as configurations, scripts, and post-processing data. This storage will be mounted in the same place across the cluster to allow common access to this namespace. Home storage is typically persistent. Home storage is typically built on the NFS protocol.

In a Google Cloud HPC environment, home storage can be provided by Google services like Filestore or partner offerings like NetApp.

- Google Cloud Filestore is a fully managed file storage service that provides high performance and low latency access to data. Filestore is a good choice for storing home storage data that is frequently accessed.

- NetApp Cloud Volumes Service is a fully managed file storage service that provides high performance and low latency access to data. NetApp Cloud Volumes Service is a good choice for storing home storage data that is frequently accessed.

Scratch Storage

Scratch storage is typically used for storing temporary files, such as intermediate results and simulation output data. These can be shared or not shared across different nodes in the HPC environment. Scratch storage is typically not persistent. Scratch storage is typically built on higher performance storage systems than home storage, such as local flash storage or Parallel File Systems.

In a Google Cloud HPC environment, scratch storage can be provided by Google services such as Persistent Disk, Local SSD, Cloud Filestore, or Parallelstore or by partner offerings like NetApp, DDN EXAScaler, Sycomp, and Weka.

- Google Cloud Persistent Disk is a block storage service that provides high performance and low latency access to data. Persistent Disk can be used to create a scratch directory for each user on an HPC cluster.

- Google Cloud Local SSD is a local SSD storage option that is available on some Compute Engine machine types. Local SSD can be used to create a scratch directory for each user on an HPC cluster.

- Google Cloud Filestore is a fully managed NFS file storage service that provides high performance and low latency access to data. Filestore can be used to create a scratch directory for each user on an HPC cluster.

- Google Cloud Parallelstore is a high-performance parallel file system that is optimized for HPC workloads. Parallelstore can be used to create a scratch directory for each user on an HPC cluster.

- NetApp Cloud Volumes Service is a fully managed file storage service that provides high performance and low latency access to data. NetApp Cloud Volumes Service can be used to create a scratch directory for each user on an HPC cluster.

- DDN EXAScaler Cloud is a high-performance parallel file system that is optimized for HPC workloads. DDN EXAScaler Cloud can be used to create a single or multiple scratch directories on an HPC cluster.

- WekaIO Matrix is a high-performance parallel file system that is optimized for HPC workloads. WekaIO Matrix can be used to create a scratch directory for each user on an HPC cluster.

Spot VMs

Spot VMs can be a cost-effective way to run CAE workloads. Spot VMs are available at a discounted price but can terminate anytime with a short notice period. Spot VMs are up to 91% off the cost of a standard instance and support the kind of features HPC users expect, including GPUs and Local SSDs.

If your workflow can tolerate the chance of interruption (preemption), testing out the Spot model is a good idea if the application can run in a relatively short time frame (< 4 hours). Customers have found that the up to 90% cost saving versus on-demand saves them enough to tolerate minor interruptions.

Note that certain VM types, such as H3, do not support Spot.

Case studies

AirShaper

AirShaper is an online aerodynamics platform that allows designers and engineers to run air flow simulations in a completely automated way, allowing users without expertise in aerodynamics to obtain reliable results and improve their design.

AirShaper migrated their HPC Computational Fluid Dynamics (CFD) workloads to the new C2D VM family from an older VM platform, saving them simulation time and cost per workload run compared to their previous cloud environment, and dramatically improving their time to results compared to their on-premises environment.

“At AirShaper, we offer CFD simulations at a fixed cost. More and faster cores usually means a higher overall cost, in part due to scaling issues. But with H3 we can cut simulation times in half while even reducing the overall cost."

- Wouter Remmerie, CEO, Airshaper

Reduce costs by almost 50% | Compared to on-prem instances they have been able to reduce simulation times by more than a factor of three | Reduced simulation times by 30% compared to previous generation high performance computing instances |

Reduce costs by almost 50%

Compared to on-prem instances they have been able to reduce simulation times by more than a factor of three

Reduced simulation times by 30% compared to previous generation high performance computing instances

Altair

Altair is a global technology company that provides software and cloud solutions in the areas of product development, high performance computing (HPC), and data analytics. Altair's software is used by engineers, scientists, and data analysts to solve complex problems in a wide range of industries, including automotive, aerospace, manufacturing, and energy.

Altair is a Google Cloud partner, and its software is available on Google Cloud. Altair's software is optimized for Google Cloud, and it can be used to take advantage of the performance, scalability, and flexibility of Google Cloud. Altair is committed to helping customers achieve their HPC goals, and offers a wide range of software solutions for HPC. One of these is Radioss, a finite element analysis tool. Altair has been able to demonstrate significant improvements in cloud-based runtime of Radioss with the new H3 VM.

“At Altair, we're excited that initial testing indicates up to 3x reduction in simulation runtime for Radioss workloads running on H3 versus C2. These significantly faster runtimes on Google Cloud will help to increase engineering productivity for our joint customers.”

- Eric Lequiniou, Senior Vice President, Radioss Development and Altair Solver

TotalCAE

TotalCAE is a leading provider of managed HPC solutions for engineering and scientific applications. TotalCAE's solutions are designed to be easy to use, and to help customers accelerate their time to results, reduce costs, and improve productivity. TotalCAE's solutions are used by customers around the world to solve complex engineering and scientific problems. For example, TotalCAE's solutions are used to design and simulate aircraft, cars, and other vehicles; to analyze the performance of buildings and bridges; and to develop new drugs and therapies.

TotalCAE is a Google Cloud partner, and its solutions support operating on Google Cloud. TotalCAE's solutions are optimized for Google Cloud, and they can be used to take advantage of the performance, scalability, and flexibility of Google Cloud. By using Google Cloud’s HPC infrastructure, TotalCAE has been able to offer their customers better performance at a lower cost.

“With Google Cloud H3 instances, we have seen up to a 25% performance boost per core for CAE workloads at a 50% lower job cost than C2, enabling TotalCAE to offer customers up to 2.5x higher price performance, and scalability for CAE workloads on Google Cloud.”

- Rodney Mach, CEO, TotalCAE

Learning more about CAE workloads on Google Cloud

There is a lot more to learn about HPC and CAE workloads on Google Cloud. Contact us if you are interested in speaking to a Google HPC team member, or want to start using Google Cloud. Until then, follow up with all of our resources to continue learning!

Take the next step

Start building on Google Cloud with $300 in free credits and 20+ always free products.

Need help getting started?

Contact salesWork with a trusted partner

Find a partnerContinue browsing

See all products