Dataproc

Managed Apache Spark and Hadoop with Google Dataproc

Run your most demanding Spark and open source workloads easier with a managed service, smarter with Gemini, and faster with Lightning Engine.

Apache Spark is a trademark of the Apache Software Foundation.

Features

Industry-leading performance

Accelerate your most demanding Spark jobs with Lightning Engine. Our next-generation engine delivers over 4.3x faster performance with managed optimization, reducing TCO, and manual tuning. Available now in preview for Dataproc.

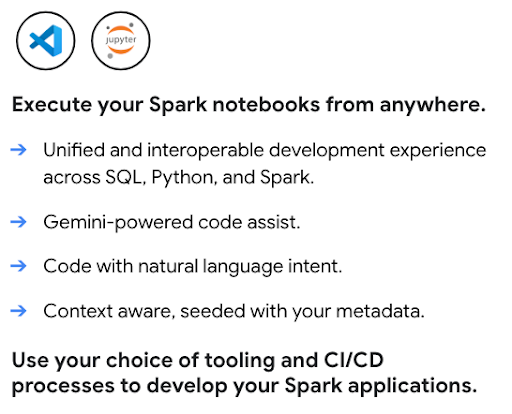

AI-powered development and operations

Accelerate your entire workflow with Gemini. Get AI-powered assistance to write and debug PySpark code, and use Gemini Cloud Assist to get automated root-cause analysis for failed or slow-running jobs, dramatically reducing troubleshooting time\

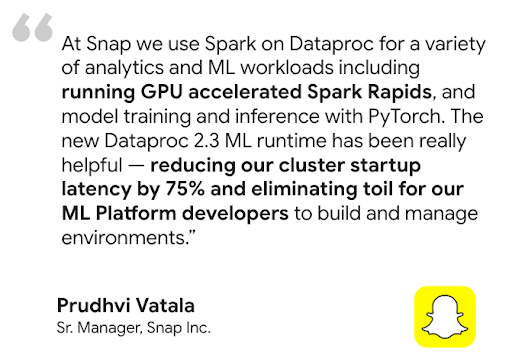

Ready for enterprise AI/ML

Build and operationalize your entire machine learning lifecycle. Accelerate model training and inference with GPU support, powered by NVIDIA RAPIDS™, and pre-configured ML Runtimes. Then, integrate with the broader Google Cloud AI ecosystem to orchestrate end-to-end MLOps with Vertex AI Pipelines.

Powerful lakehouse integrations

Connect natively to an open lakehouse architecture. Process data directly from BigQuery, orchestrate MLOps with Vertex AI Pipelines, and unify governance over your open data with BigLake and Dataplex Universal Catalog.

Unmatched control and customization

Tailor each Dataproc cluster to your exact needs. Develop in Python, Scala, or Java, choose from a wide range of machine types, use initialization actions to install custom software, and bring your own container images for maximum portability.

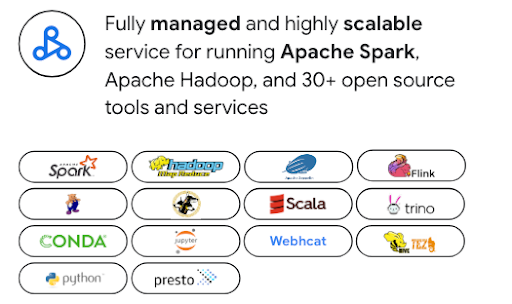

Built for the modern open source data stack

Avoid vendor lock-in. While Dataproc is optimized for Apache Spark, it supports 30+ open source tools like Apache Hadoop, Flink, Trino, and Presto. It integrates seamlessly with popular orchestrators like Airflow and can be extended with Kubernetes and Docker for maximum flexibility.

Enterprise-grade security

Integrate seamlessly with your security posture. Leverage IAM for granular permissions, VPC Service Controls for network security, and Kerberos for strong authentication on your Spark cluster.

Common Uses

Cloud migration

Seamlessly lift-and-shift on-prem Apache Hadoop and Spark workloads. It's also the ideal path for moving from self-managed 'DIY Spark' to a fully managed service.Dataproc's support for a wide range of Spark versions, including legacy 2.x, simplifies migration by reducing the need for immediate code refactoring. This allows you to leverage your team's existing open source skills for a faster path to the cloud.

Learning resources

Cloud migration

Seamlessly lift-and-shift on-prem Apache Hadoop and Spark workloads. It's also the ideal path for moving from self-managed 'DIY Spark' to a fully managed service.Dataproc's support for a wide range of Spark versions, including legacy 2.x, simplifies migration by reducing the need for immediate code refactoring. This allows you to leverage your team's existing open source skills for a faster path to the cloud.

Lakehouse modernization

Use Dataproc as the powerful, open source processing engine for your modern data lakehouse. Process data in open formats like Apache Iceberg directly from your data lake, eliminating data silos, and costly data movement. Integrate seamlessly with BigQuery and Dataplex Universal Catalog for a truly unified, multi-engine analytics, and governance platform.

Learning resources

Lakehouse modernization

Use Dataproc as the powerful, open source processing engine for your modern data lakehouse. Process data in open formats like Apache Iceberg directly from your data lake, eliminating data silos, and costly data movement. Integrate seamlessly with BigQuery and Dataplex Universal Catalog for a truly unified, multi-engine analytics, and governance platform.

Data engineering

Build and orchestrate complex, long-running Spark ETL pipelines with enterprise-grade reliability and scale. Leverage powerful features like autoscaling to optimize for cost and performance, and use workflow templates to automate and manage your most critical, production-level jobs from end to end.

Learning resources

Data engineering

Build and orchestrate complex, long-running Spark ETL pipelines with enterprise-grade reliability and scale. Leverage powerful features like autoscaling to optimize for cost and performance, and use workflow templates to automate and manage your most critical, production-level jobs from end to end.

Data science at scale

Provide data science teams with powerful, customizable Spark cluster environments for large-scale model training and batch inference. With pre-configured ML Runtimes and GPU support, you can accelerate the entire ML lifecycle and integrate with Vertex AI to build and operationalize end-to-end MLOps pipelines.

Learning resources

Data science at scale

Provide data science teams with powerful, customizable Spark cluster environments for large-scale model training and batch inference. With pre-configured ML Runtimes and GPU support, you can accelerate the entire ML lifecycle and integrate with Vertex AI to build and operationalize end-to-end MLOps pipelines.

Flexible OSS analytics engines

Go beyond Spark and Hadoop without adding operational overhead. Deploy dedicated clusters with Trino for interactive SQL, Flink for advanced stream processing, or other specialized open source engines. Dataproc provides a unified control plane to manage this diverse ecosystem with the simplicity of a managed service.

Learning resources

Flexible OSS analytics engines

Go beyond Spark and Hadoop without adding operational overhead. Deploy dedicated clusters with Trino for interactive SQL, Flink for advanced stream processing, or other specialized open source engines. Dataproc provides a unified control plane to manage this diverse ecosystem with the simplicity of a managed service.

Pricing

| Dataproc managed clusters | Dataproc offers pay-as-you-go pricing. Optimize costs with autoscaling and preemptible VMs. |

|---|---|

Key components |

|

Example | A cluster with 6 nodes (1 main + 5 workers) of 4 CPUs each ran for 2 hours would cost $0.48. Dataproc charge = # of vCPUs * hours * Dataproc price = 24 * 2 * $0.01 = $0.48 |

Dataproc managed clusters

Dataproc offers pay-as-you-go pricing. Optimize costs with autoscaling and preemptible VMs.

Key components

- Compute Engine instances (vCPU, memory)

- Dataproc service fee (per vCPU-hour)

- Persistent Disks

Example

A cluster with 6 nodes (1 main + 5 workers) of 4 CPUs each ran for 2 hours would cost $0.48. Dataproc charge = # of vCPUs * hours * Dataproc price = 24 * 2 * $0.01 = $0.48

Business Case

Build your business case for Google Dataproc

The economic benefits of Google Cloud Dataproc and Serverless Spark versus alternative solutions

See how Dataproc delivers significant TCO savings and business value compared to on-prem and other cloud solutions.

Related Content

In the report:

Discover how Dataproc and Serverless for Apache Spark can deliver 18% to 60% cost savings compared to other cloud-based Spark alternatives.

Explore how Google Cloud Serverless for Apache Spark can provide 21% to 55% better price-performance than other serverless Spark offerings.

Learn how Dataproc and Google Cloud Serverless for Apache Spark simplify Spark deployments and help reduce operational complexity.

FAQ

When should I choose Dataproc versus Google Cloud Serverless for Apache Spark?

Choose Dataproc when you need fine-grained control over your cluster environment, are migrating existing Hadoop/Spark workloads, or require a persistent cluster with a diverse set of open source tools. For a detailed breakdown of the differences in management models, ideal workloads, and cost structures.

Can I use more than just Spark and Hadoop?

Yes. Dataproc is a unified platform for the modern open source data stack. It supports over 30 components, allowing you to run dedicated clusters for tools like Flink for stream processing or Trino for interactive SQL, all under a single managed service.

How much control do I have over the cluster environment?

You have a high degree of control. Dataproc allows you to customize machine types, disk sizes, and network configurations. You can also use initialization actions to install custom software, bring your own container images, and leverage Spot VMs to optimize costs.