Learn more about Apigee's specific AI capabilities designed for the development, seamless application integration, and effective scaling of AI solutions.

Implement an enterprise-ready AI gateway

Apigee’s core API gateway functionality includes native capabilities for managing traffic for AI applications, providing a consistent API contract for service consumption.

Capabilities include:

- Model abstraction

- Multicloud model routing

- Request / response enrichment

- RAG integrations

- Agent connectivity to 3P systems (using Google Cloud’s Application Integration platform)

- LLM circuit breaker pattern for high availability

- Semantic caching

Resources

Programmable API proxies

Attaching policies to different points in an Apigee API proxy flow gives you fine-grained control over API behavior. This placement determines precisely when each policy is enforced as requests and responses travel through the proxy.

The Next Generation of Integration: AI Driven

Unlock the power of generative AI to build advanced automation workflows. Learn how to connect over 100 applications using simple natural language. Discover how gen AI agents can optimize your operations within the Google Cloud ecosystem.

Serve Hugging Face models from Vertex AI with Apigee

Build an LLM API gateway with Apigee and Vertex AI. This hands-on demo shows how to create a proxy for your LLM endpoint, ideal for developers new to Apigee or integrating AI APIs. Learn how Apigee secures, manages, and optimizes your AI applications.

Power agentic workflows grounded in enterprise context

AI agents use capabilities from LLMs to accomplish tasks for end users. These agents can be built using a variety of tools—from no-code and low-code platforms like Agentspace to full-code frameworks like LangChain or LlamaIndex. Apigee acts as an intermediary between your AI application and its agents.

Now with generally available Gemini Code Assist in Apigee, you can rapidly create API specifications using natural language in both Cloud Code and Gemini Chat interfaces. Leveraging your organization's API ecosystem through API Hub provides Enterprise Context for consistent, secure APIs with nested object support and proactive duplicate API detection.

Capabilities include:

- Apigee API hub, to catalog first-party and third-party APIs and workflows

- Token limit enforcement for cost controls

- Multi-agent orchestration

- Authentication and authorization

- Semantic caching, for optimized performance

- API specification generation with Enterprise Context (using Gemini Code Assist)

Resources

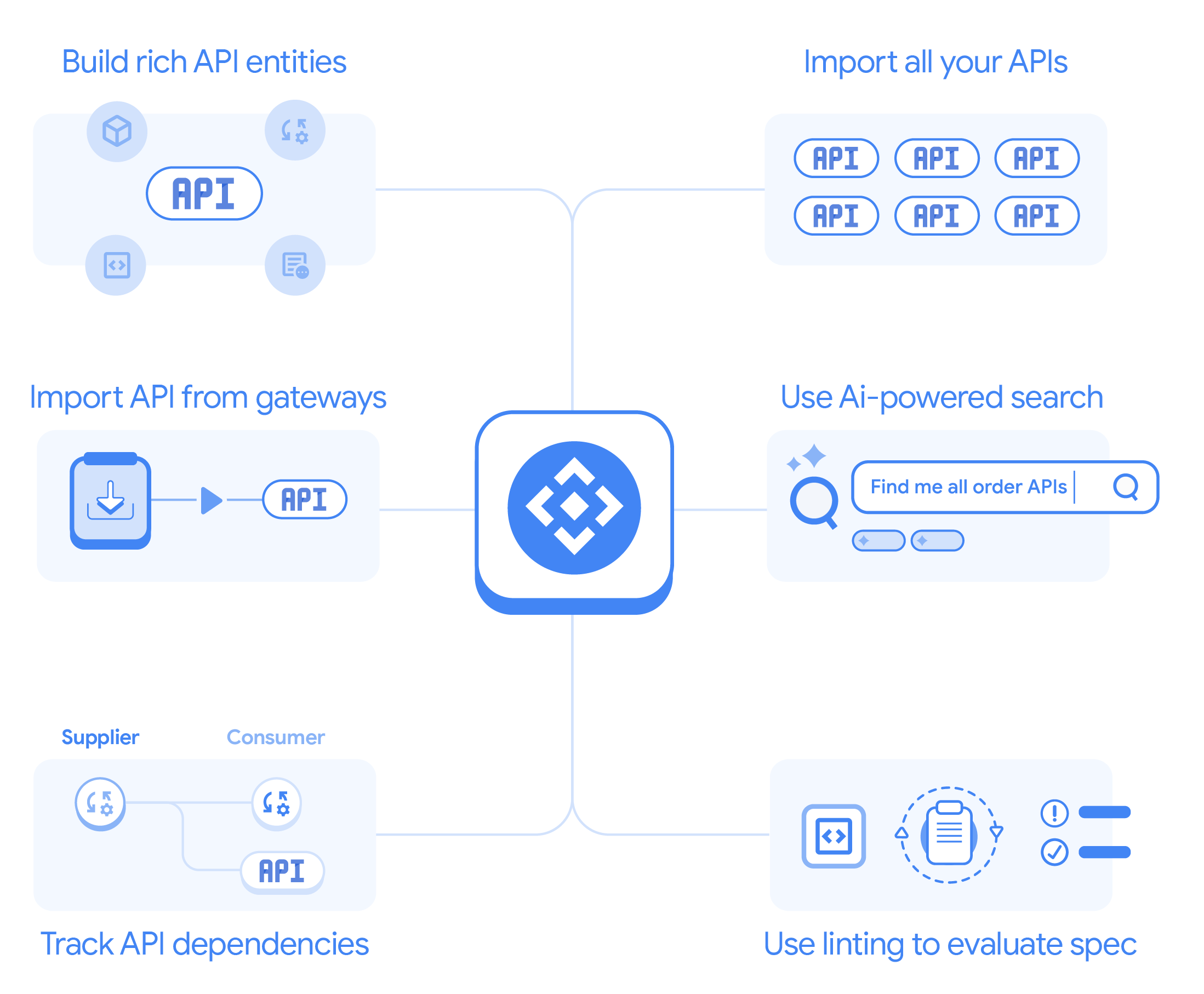

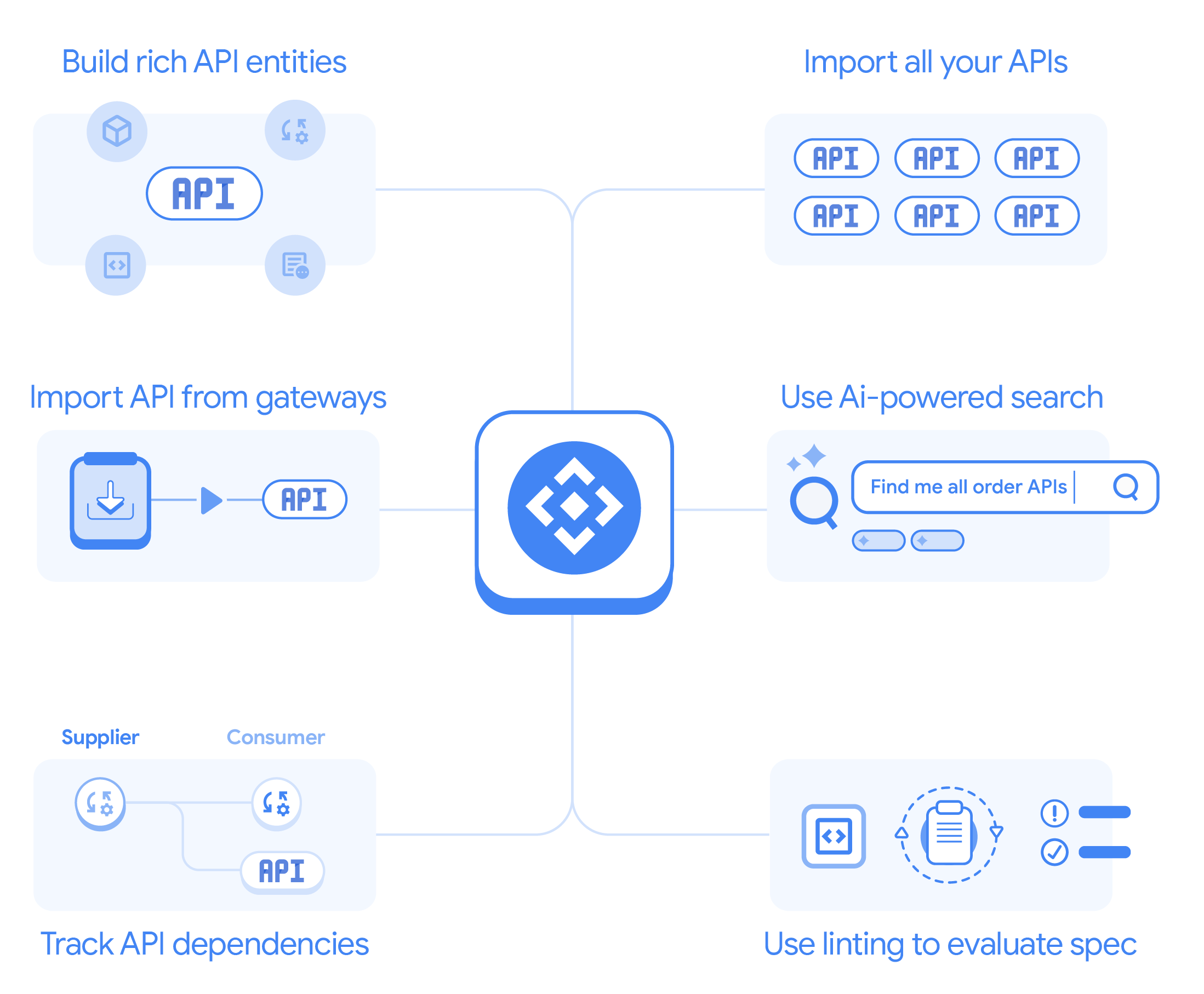

Documentation: Apigee API hub

Apigee API Hub, a centralized platform on Google Cloud, lets you discover, manage, and document all your APIs for better visibility, control, reuse, governance, and faster development.

Blog: Apigee API hub is now generally available

Centrally discover, govern, and manage all your APIs with the now generally available Apigee API Hub. This platform provides a single pane of glass for your entire API landscape, regardless of deployment location, empowering teams to streamline operations and strengthen enterprise-wide API governance.

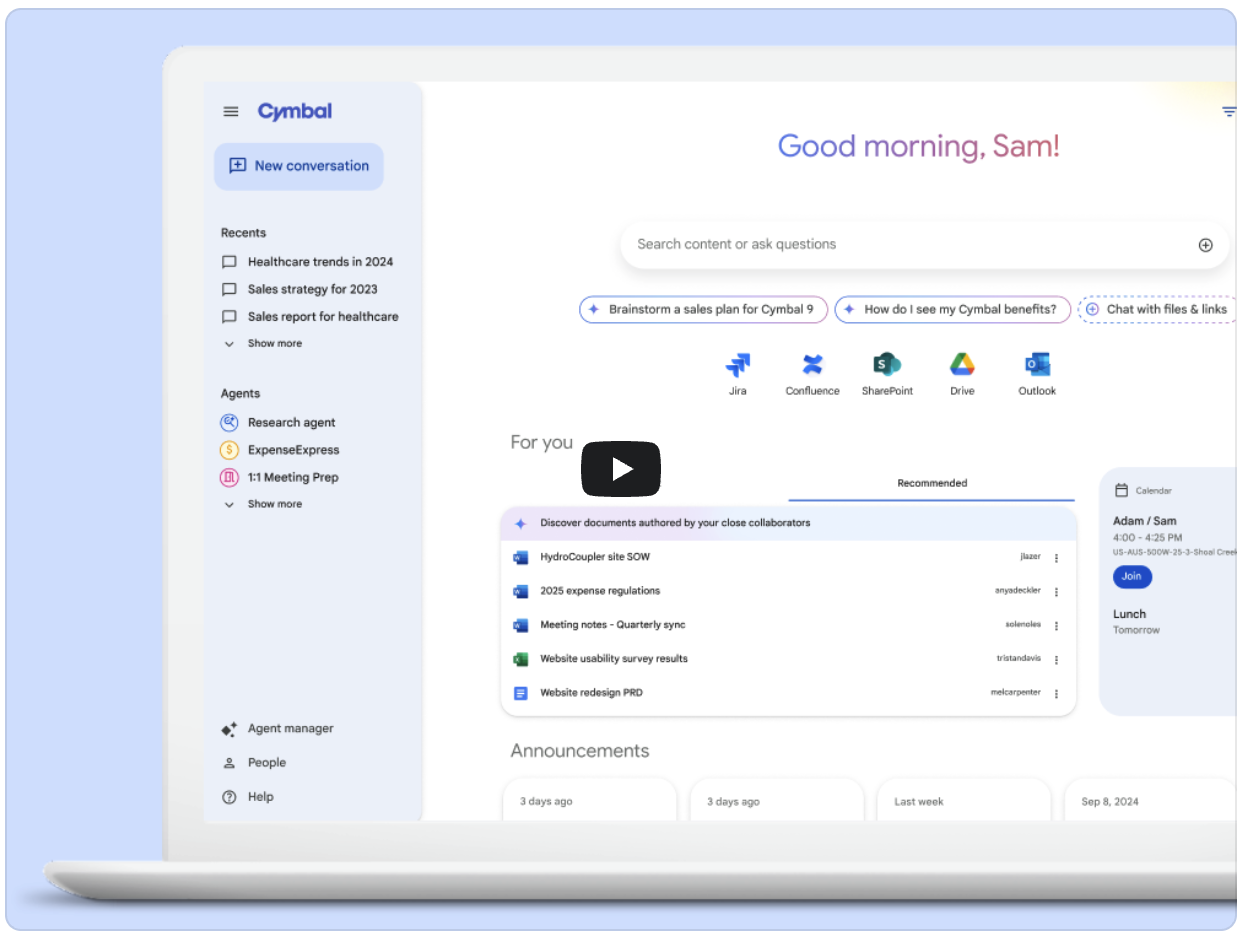

Learn more about Agentspace

Unleash your workforce's potential with Google Cloud Agentspace. Empower employees with secure access to company data, AI-driven task automation, and intelligent insights—driving productivity to new heights.

Govern enterprise-wide access and usage of LLMs

Apigee’s native policies and distribution capabilities enable gen AI platform teams to efficiently govern AI applications and the use of LLMs.

Capabilities include:

- Integrated developer portal for self-service access to models

- Granular, policy-based controls for model access and usage limits

- Monitoring and reporting of token usage limits

- LLM auditing and logging

Resources

Blog: Operationalizing generative AI apps with Apigee

Apigee, Google Cloud's API management platform, enables businesses to securely scale and manage APIs for their AI services, ensuring reliable access even with growing user demand.

Controlling access to your APIs by registering apps

Control access to your APIs on Apigee by registering developer apps. These registered apps are then used to obtain API keys, enabling secure API usage.

Building and Scaling Generative AI with Apigee API Management

Generative AI is transforming industries, but how do you effectively bring these powerful models into production? This webinar explores the pivotal role of APIs in operationalizing generative AI.

Keep your AI applications performant and highly available

Apigee provides deep observability for AI applications, to help you manage and optimize generative AI costs and efficiently govern your organization’s AI usage.

Capabilities include:

- Visibility into model usage and application token consumption

- Internal cost reporting and optimization

- Custom dashboards to monitor usage based on actual token counts (through integration with Looker Studio)

- Logging for AI applications

Resources

Semantic cache in Apigee reference solution

This Apigee sample uses cached responses and vector search to find similar previous requests based on prompt proximity and a configurable similarity score.

Pattern for LLM circuit breaking in Apigee

Apigee circuit breaking prevents 429 errors in RAG applications using LLMs by managing traffic and ensuring graceful failures.

Secure LLM APIs, while protecting your end users and your brand

Apigee acts as a secure gateway for LLM APIs, allowing you to control access with API keys, OAuth 2.0, and JWT validation, and prevent abuse and overload by enforcing rate limits and quotas. Apigee Advanced API Security can offer even more advanced protection.

Capabilities:

- Model Armor integration and policy enforcement for prompt and response sanitization

- Authentication and authorization

- Abuse and anomaly detection

- Mitigation against OWASP Top 10 API and LLM security risks

- LLM API security misconfiguration detection

- Google SecOps and 3P SIEM integration

Resources

Apigee Advanced API Security

Protect your APIs with Google Cloud's Advanced API Security. This service analyzes API traffic and configurations to stop threats without impacting performance. It identifies suspicious requests for blocking and flags misconfigurations.

White paper: Mitigating OWASP Top 10 API Security Threats

Stop OWASP Top 10 API attacks with Apigee. Secure authentication, authorization, and threat detection protect your business.

Blog: Protecting your APIs from OWASP’s Top 10 Security Threats

Safeguard your APIs from top threats like broken authentication and injection flaws with Apigee, Google Cloud's API management platform.

AI gateway

Implement an enterprise-ready AI gateway

Apigee’s core API gateway functionality includes native capabilities for managing traffic for AI applications, providing a consistent API contract for service consumption.

Capabilities include:

- Model abstraction

- Multicloud model routing

- Request / response enrichment

- RAG integrations

- Agent connectivity to 3P systems (using Google Cloud’s Application Integration platform)

- LLM circuit breaker pattern for high availability

- Semantic caching

Resources

Programmable API proxies

Attaching policies to different points in an Apigee API proxy flow gives you fine-grained control over API behavior. This placement determines precisely when each policy is enforced as requests and responses travel through the proxy.

The Next Generation of Integration: AI Driven

Unlock the power of generative AI to build advanced automation workflows. Learn how to connect over 100 applications using simple natural language. Discover how gen AI agents can optimize your operations within the Google Cloud ecosystem.

Serve Hugging Face models from Vertex AI with Apigee

Build an LLM API gateway with Apigee and Vertex AI. This hands-on demo shows how to create a proxy for your LLM endpoint, ideal for developers new to Apigee or integrating AI APIs. Learn how Apigee secures, manages, and optimizes your AI applications.

AI agents

Power agentic workflows grounded in enterprise context

AI agents use capabilities from LLMs to accomplish tasks for end users. These agents can be built using a variety of tools—from no-code and low-code platforms like Agentspace to full-code frameworks like LangChain or LlamaIndex. Apigee acts as an intermediary between your AI application and its agents.

Now with generally available Gemini Code Assist in Apigee, you can rapidly create API specifications using natural language in both Cloud Code and Gemini Chat interfaces. Leveraging your organization's API ecosystem through API Hub provides Enterprise Context for consistent, secure APIs with nested object support and proactive duplicate API detection.

Capabilities include:

- Apigee API hub, to catalog first-party and third-party APIs and workflows

- Token limit enforcement for cost controls

- Multi-agent orchestration

- Authentication and authorization

- Semantic caching, for optimized performance

- API specification generation with Enterprise Context (using Gemini Code Assist)

Resources

Documentation: Apigee API hub

Apigee API Hub, a centralized platform on Google Cloud, lets you discover, manage, and document all your APIs for better visibility, control, reuse, governance, and faster development.

Blog: Apigee API hub is now generally available

Centrally discover, govern, and manage all your APIs with the now generally available Apigee API Hub. This platform provides a single pane of glass for your entire API landscape, regardless of deployment location, empowering teams to streamline operations and strengthen enterprise-wide API governance.

Learn more about Agentspace

Unleash your workforce's potential with Google Cloud Agentspace. Empower employees with secure access to company data, AI-driven task automation, and intelligent insights—driving productivity to new heights.

AI governance

Govern enterprise-wide access and usage of LLMs

Apigee’s native policies and distribution capabilities enable gen AI platform teams to efficiently govern AI applications and the use of LLMs.

Capabilities include:

- Integrated developer portal for self-service access to models

- Granular, policy-based controls for model access and usage limits

- Monitoring and reporting of token usage limits

- LLM auditing and logging

Resources

Blog: Operationalizing generative AI apps with Apigee

Apigee, Google Cloud's API management platform, enables businesses to securely scale and manage APIs for their AI services, ensuring reliable access even with growing user demand.

Controlling access to your APIs by registering apps

Control access to your APIs on Apigee by registering developer apps. These registered apps are then used to obtain API keys, enabling secure API usage.

Building and Scaling Generative AI with Apigee API Management

Generative AI is transforming industries, but how do you effectively bring these powerful models into production? This webinar explores the pivotal role of APIs in operationalizing generative AI.

AI observability

Keep your AI applications performant and highly available

Apigee provides deep observability for AI applications, to help you manage and optimize generative AI costs and efficiently govern your organization’s AI usage.

Capabilities include:

- Visibility into model usage and application token consumption

- Internal cost reporting and optimization

- Custom dashboards to monitor usage based on actual token counts (through integration with Looker Studio)

- Logging for AI applications

Resources

Semantic cache in Apigee reference solution

This Apigee sample uses cached responses and vector search to find similar previous requests based on prompt proximity and a configurable similarity score.

Pattern for LLM circuit breaking in Apigee

Apigee circuit breaking prevents 429 errors in RAG applications using LLMs by managing traffic and ensuring graceful failures.

AI safety and security

Secure LLM APIs, while protecting your end users and your brand

Apigee acts as a secure gateway for LLM APIs, allowing you to control access with API keys, OAuth 2.0, and JWT validation, and prevent abuse and overload by enforcing rate limits and quotas. Apigee Advanced API Security can offer even more advanced protection.

Capabilities:

- Model Armor integration and policy enforcement for prompt and response sanitization

- Authentication and authorization

- Abuse and anomaly detection

- Mitigation against OWASP Top 10 API and LLM security risks

- LLM API security misconfiguration detection

- Google SecOps and 3P SIEM integration

Resources

Apigee Advanced API Security

Protect your APIs with Google Cloud's Advanced API Security. This service analyzes API traffic and configurations to stop threats without impacting performance. It identifies suspicious requests for blocking and flags misconfigurations.

White paper: Mitigating OWASP Top 10 API Security Threats

Stop OWASP Top 10 API attacks with Apigee. Secure authentication, authorization, and threat detection protect your business.

Blog: Protecting your APIs from OWASP’s Top 10 Security Threats

Safeguard your APIs from top threats like broken authentication and injection flaws with Apigee, Google Cloud's API management platform.

Developer resources

Learn how to leverage Apigee to unlock the full potential of your generative AI projects.

Apigee AI solutions for your use case

Secure AI applications with Apigee

Use Apigee as a policy enforcement point for Model Armor prompt and response sanitization, and mitigate OWASP LLM and API top 10 security risks.

Ready to unlock the full potential of gen AI?

Ready to unlock the full potential of gen AI?

Unlock the potential of generative AI for your solutions with Apigee. Leverage Apigee's robust operationalization features to build secure, scalable, and optimized deployments with confidence.

Ready to begin? Explore our Apigee generative AI samples page.