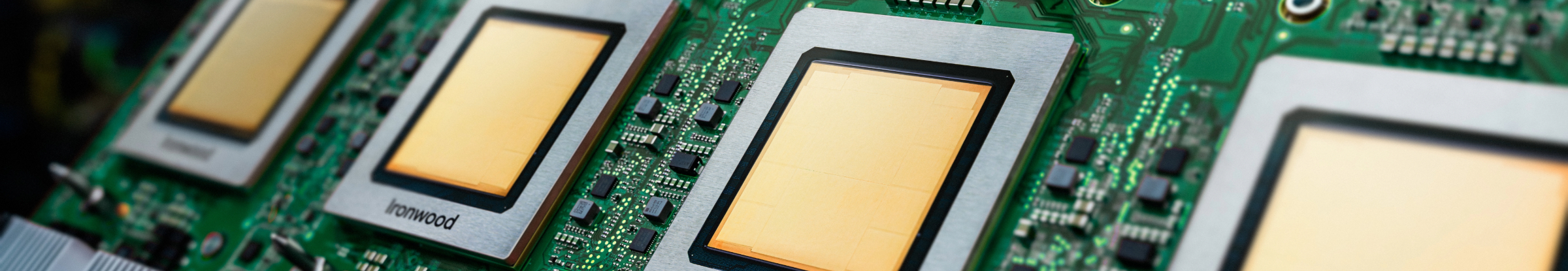

Ironwood: Google Cloud's 7th Generation TPU Engineered for Inference

Train, fine-tune, and serve larger models and datasets efficiently with the most powerful TPU yet.

Request more information about Ironwood.

- (+1)

- Afghanistan

- Albania

- Algeria

- American Samoa

- Andorra

- Angola

- Anguilla

- Antarctica

- Antigua & Barbuda

- Argentina

- Armenia

- Aruba

- Ascension Island

- Australia

- Austria

- Azerbaijan

- Bahamas

- Bahrain

- Bangladesh

- Barbados

- Belarus

- Belgium

- Belize

- Benin

- Bermuda

- Bhutan

- Bolivia

- Bosnia & Herzegovina

- Botswana

- Bouvet Island

- Brazil

- British Indian Ocean Territory

- British Virgin Islands

- Brunei

- Bulgaria

- Burkina Faso

- Burundi

- Cambodia

- Cameroon

- Canada

- Cape Verde

- Cayman Islands

- Central African Republic

- Chad

- Chile

- China

- Christmas Island

- Cocos (Keeling) Islands

- Colombia

- Comoros

- Congo - Brazzaville

- Congo - Kinshasa

- Cook Islands

- Costa Rica

- Croatia

- Curaçao

- Cyprus

- Czechia

- Côte d’Ivoire

- Denmark

- Djibouti

- Dominica

- Dominican Republic

- Ecuador

- Egypt

- El Salvador

- Equatorial Guinea

- Eritrea

- Estonia

- Eswatini

- Ethiopia

- Falkland Islands (Islas Malvinas)

- Faroe Islands

- Fiji

- Finland

- France

- French Guiana

- French Polynesia

- French Southern Territories

- Gabon

- Gambia

- Georgia

- Germany

- Ghana

- Gibraltar

- Greece

- Greenland

- Grenada

- Guadeloupe

- Guam

- Guatemala

- Guinea

- Guinea-Bissau

- Guyana

- Haiti

- Heard & McDonald Islands

- Honduras

- Hong Kong

- Hungary

- Iceland

- India

- Indonesia

- Iraq

- Ireland

- Israel

- Italy

- Jamaica

- Japan

- Jordan

- Kazakhstan

- Kenya

- Kiribati

- Kuwait

- Kyrgyzstan

- Laos

- Latvia

- Lebanon

- Lesotho

- Liberia

- Libya

- Liechtenstein

- Lithuania

- Luxembourg

- Macao

- Madagascar

- Malawi

- Malaysia

- Maldives

- Mali

- Malta

- Marshall Islands

- Martinique

- Mauritania

- Mauritius

- Mayotte

- Mexico

- Micronesia

- Moldova

- Monaco

- Mongolia

- Montenegro

- Montserrat

- Morocco

- Mozambique

- Myanmar (Burma)

- Namibia

- Nauru

- Nepal

- Netherlands

- New Caledonia

- New Zealand

- Nicaragua

- Niger

- Nigeria

- Niue

- Norfolk Island

- North Macedonia

- Northern Mariana Islands

- Norway

- Oman

- Pakistan

- Palau

- Palestine

- Panama

- Papua New Guinea

- Paraguay

- Peru

- Philippines

- Pitcairn Islands

- Poland

- Portugal

- Puerto Rico

- Qatar

- Romania

- Russia

- Rwanda

- Réunion

- Samoa

- San Marino

- Saudi Arabia

- Senegal

- Serbia

- Seychelles

- Sierra Leone

- Singapore

- Sint Maarten

- Slovakia

- Slovenia

- Solomon Islands

- Somalia

- South Africa

- South Georgia & South Sandwich Islands

- South Korea

- South Sudan

- Spain

- Sri Lanka

- St. Barthélemy

- St. Helena

- St. Kitts & Nevis

- St. Lucia

- St. Martin

- St. Pierre & Miquelon

- St. Vincent & Grenadines

- Suriname

- Svalbard & Jan Mayen

- Sweden

- Switzerland

- São Tomé & Príncipe

- Taiwan

- Tajikistan

- Tanzania

- Thailand

- Timor-Leste

- Togo

- Tokelau

- Tonga

- Trinidad & Tobago

- Tunisia

- Turkmenistan

- Turks & Caicos Islands

- Tuvalu

- Türkiye

- U.S. Outlying Islands

- U.S. Virgin Islands

- Uganda

- Ukraine

- United Arab Emirates

- United Kingdom

- United States

- Uruguay

- Uzbekistan

- Vanuatu

- Vatican City

- Venezuela

- Vietnam

- Wallis & Futuna

- Western Sahara

- Yemen

- Zambia

- Zimbabwe

Is your infrastructure ready for the age of inference?

Ironwood is Google’s most powerful, capable and energy efficient Tensor Processing Unit (TPU) yet, designed to power thinking, inferential AI models at scale. Building on extensive experience developing TPUs for Google's internal services and Google Cloud customers, Ironwood is engineered to handle the computational and memory demands of models such as Large Language Models (LLMs), Mixture-of-Experts (MoEs), and advanced reasoning tasks. It supports both training and serving workloads within the Google Cloud AI Hypercomputer architecture.

Optimized for Large Language Models (LLMs): Ironwood is specifically designed to accelerate the growing demands of LLMs and generative AI applications.

Enhanced Interconnect Technology: Benefit from improvements to TPU interconnect technology, enabling faster communication and reduced latency.

High-Performance Computing: Experience significant performance gains for a wide range of inference tasks.

Sustainable AI: Ironwood continues Google Cloud's commitment to sustainability, delivering exceptional performance with optimized energy efficiency

Ironwood integrates increased compute density, memory capacity, and interconnect bandwidth with significant gains in power efficiency. These features are designed to enable higher throughput and lower latency for demanding AI training and serving workloads, particularly those involving large, complex models. Ironwood TPUs operate within the Google Cloud AI Hypercomputer architecture.

Cloud AI products comply with our SLA policies. They may offer different latency or availability guarantees from other Google Cloud services.