Enables team to reduce complex technical document research from weeks to minutes

Creates a context layer platform that delivers high-quality, trustworthy, and low-hallucination AI applications

Ranked #1 on Google’s FACTS leaderboard for grounded language models

Accelerates time to value with exceptional out of the box performance achieved by fine-tuning Llama models

Scales dynamically on Google Cloud to support the entire AI pipeline from ingestion to generation to evaluation

Contextual AI built a platform that delivers enterprise-grade, low-hallucination AI by fine-tuning Llama models on Vertex AI.

Contextual AI built a platform that delivers enterprise-grade, low-hallucination AI by fine-tuning Llama models on Vertex AI.

Building a grounded foundation for AI enterprise

Advancements in generative AI have businesses buzzing about how they can use AI technology to eliminate manual processes, work faster, and deliver more value to customers. But companies can hit a major roadblock: hallucinations.

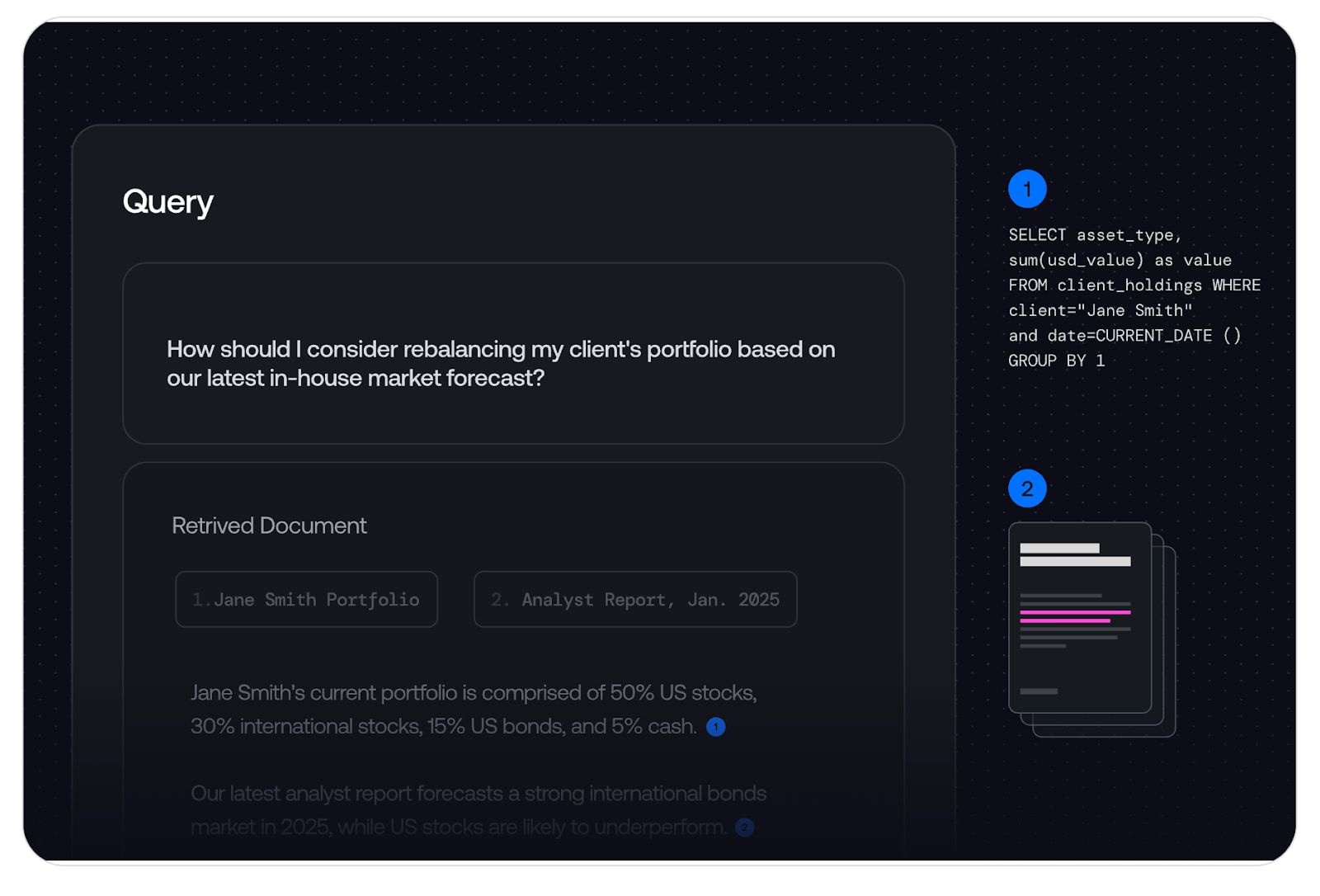

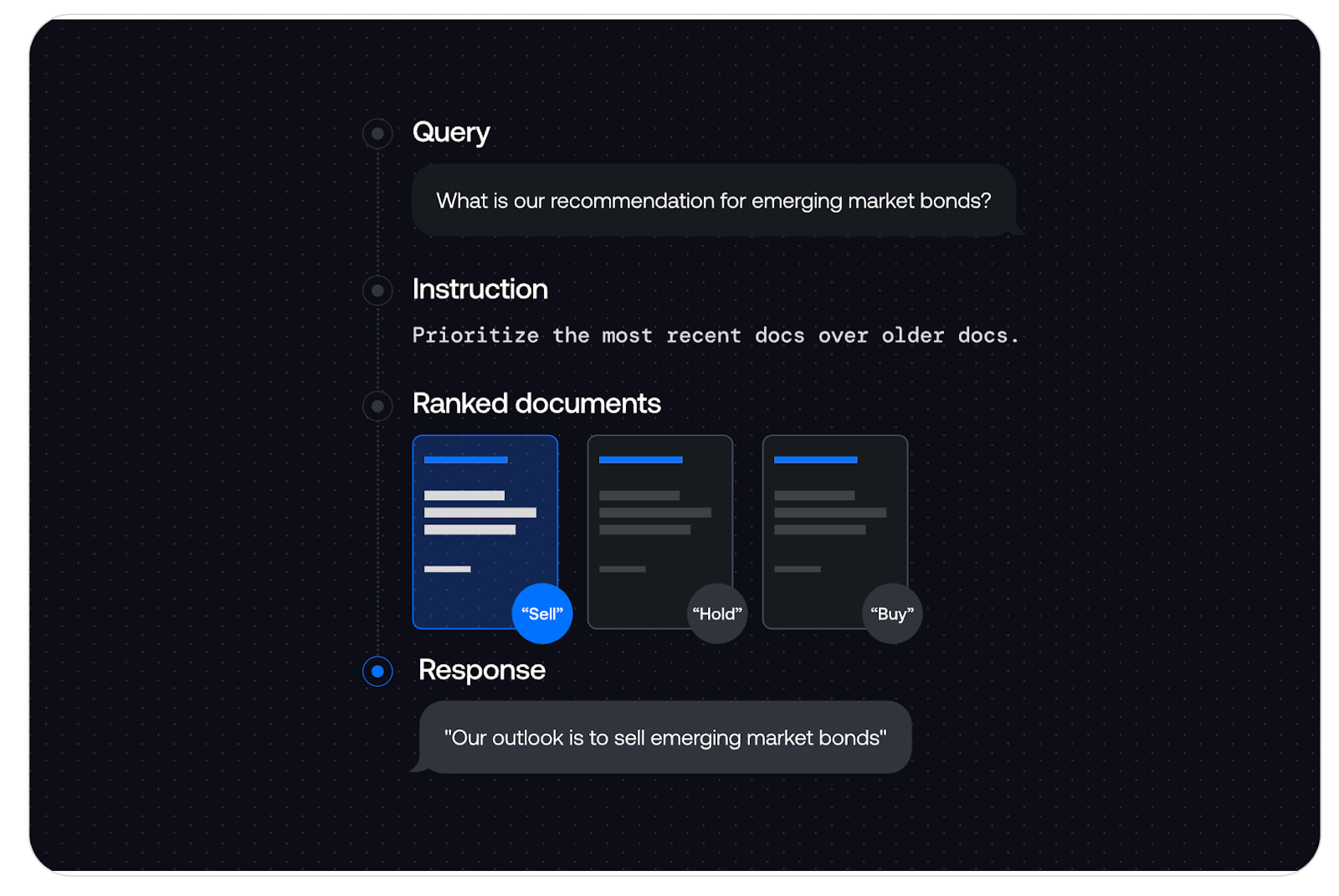

Douwe Kiela, one of the original pioneers of retrieval-augmented generation (RAG), decided to address this challenge by founding Contextual AI in June of 2023. The company’s platform uses specialized RAG agents to reduce hallucinations for specialized, highly accurate, and trustworthy AI applications.

Rather than building its models from scratch, Contextual AI chose to fine-tune open-source models for it’s platform. This allows developers and researchers to focus on grounding and eliminating hallucinations rather than spending resources to build a model from scratch.

After extensive trials and evaluations, the team determined that Llama models offered the best performance in terms of groundedness—or the lack of hallucinations—across various use cases. Llama 3.1 8B proved to be especially responsive to fine-tuning, delivering excellent accuracy, groundedness, and cost-performance.

Google Cloud made it easier to build, scale, and manage AI infrastructure capable of supporting high-value enterprise AI use cases, particularly for building agents that deliver real value fast. We’ve been proud to be a strategic partner of Google Cloud since the beginning.

Douwe Kiela

CEO and co-founder

Contextual AI needed robust and highly scalable infrastructure to deliver excellent performance for businesses out of the box. The team chose Google Cloud as its primary provider due to its high-performance GPUs and experience as an AI leader. With Llama and Google Cloud working together, Contextual AI achieved the highest performance on the FACTS grounded benchmark pertaining to hallucination-resistant, factual, and grounded results.

Accelerating time to value for enterprises

Contextual AI developed its flagship Grounded Language Model (GLM) on Llama 3.1 70B. While the company originally thought they might need to invest resources into building a model from scratch, working with Llama allowed the company to realign its strategy and focus work on mid-training and post-training. The result is state of the art business-ready models in less time.

Llama also serves as the foundation for other models in the Contextual AI platform, including reward models such as LMUnit, which evaluates how well AI responses satisfy specific criteria, and the Groundedness Reward Model, a real-time fact-checking model that reviews AI responses for potential hallucinations.

“We were able to take the experience from building our other models on Llama and use them as primordial for the reinforcement learning for our Grounded Language Model.” says William Berrios, Researcher at Contextual AI.

"A big reason we like Llama models is how effectively they can be fine-tuned for reward modeling, according to both the broader literature and our own experiments," says Rajan Vivek, Researcher at Contextual AI. "This enables us to optimize our language models for fine-grained customer preferences and use-cases."

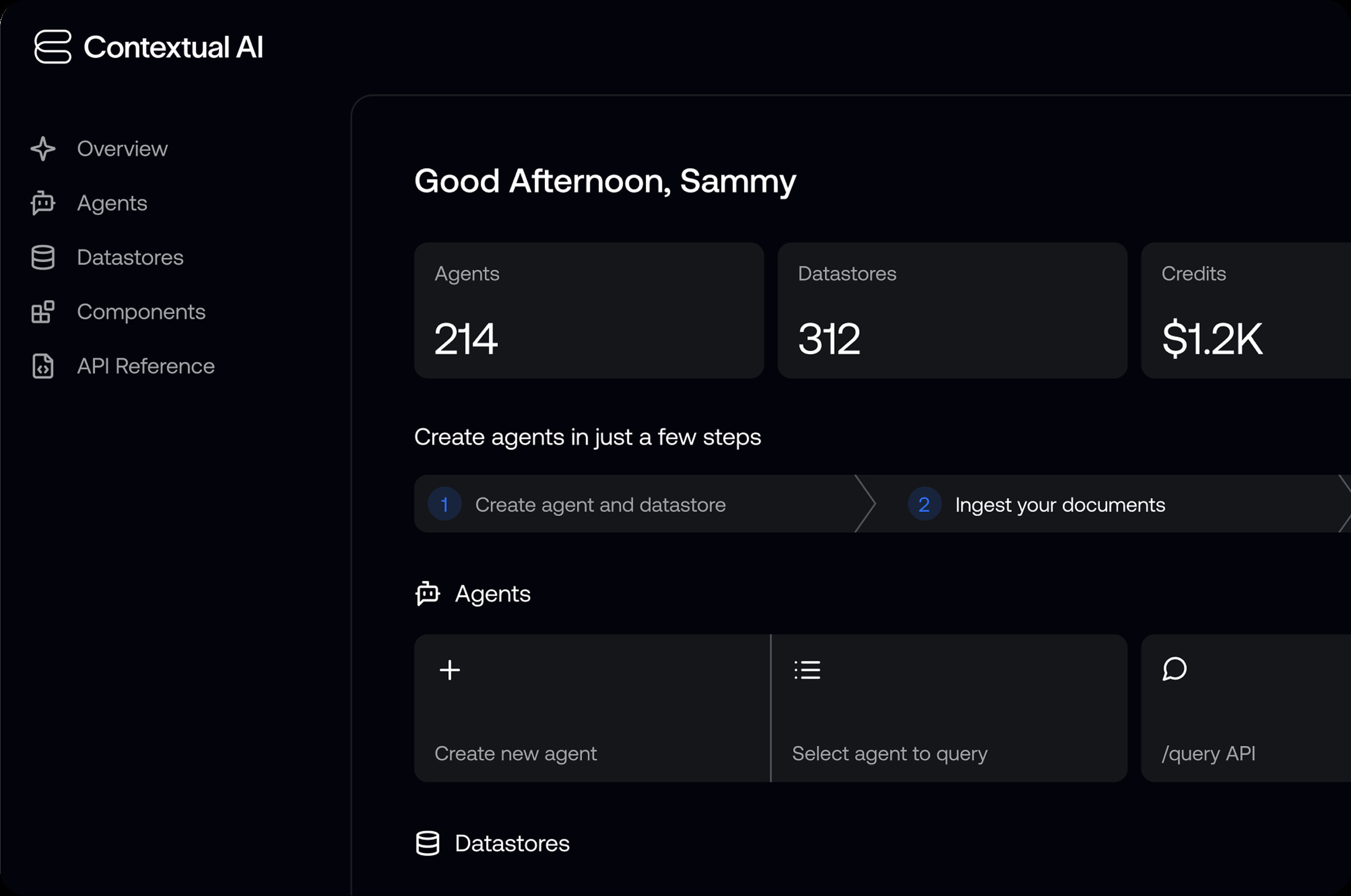

When these models work together as a single unified system, they deliver high levels of accuracy through a modular approach. Customers can use the end-to-end platform as-is no coding required, or they can leverage the individual components through the interface and APIs to customize their own system to their needs.

The work we've done is a great example of open source’s value. You can take a great starting point like Llama, and with the right specialization, make it the best model for a specific use case.

Douwe Kiela

CEO and co-founder

Customers ranging from small startups to global enterprises have already discovered the power of Contextual AI for document-heavy and knowledge-intensive applications. Companies use the platform to sift through complex technical documents, analyze financial data to create financial outlooks, and automatically resolve IT support questions.

“Contextual AI is a fantastic example of how partners can accelerate innovation on Google Cloud,” says Shikhar Mathur, Global Partner Development Manager -Gen AI, at Google Cloud. “By leveraging foundation models like Llama 3.1 70B on Google Cloud, they can focus their expertise on creating value-added layers. This is exactly what we aim to enable: helping our partners build and deploy trusted, enterprise-grade AI solutions faster for customers.”

Enabling a new class of AI applications with Google Cloud

Contextual AI runs its entire AI pipeline exclusively on Google Cloud, providing models and services for everything from data ingestion and retrieval to reranking, grounded generation, and evaluation. Ingest components use the latest Gemini 2.5 Flash and Pro for fast, accurate data ingestion. Vertex AI supports inference at the context layer, incorporating frontier models that add specialized intelligence when fine-tuning models for specific verticals.

Most importantly, Google Cloud is highly scalable with high-performance GPUs, providing the computational power needed for fine-tuning Llama models. High throughput and latency accelerate training and performance. With dynamic scaling, teams can spend less time worrying about infrastructure and more time refining models that help customers do more with their data.

For enterprises with massive volumes of highly technical and often multimodal documents,

Contextual AI’s platform delivers verifiable answers quickly. This allows them to automate complex processes and empower their teams with a trusted research assistant.

By combining the innovation of Llama models with the power and scale of Google Cloud, Contextual AI is delivering on the promise of enterprise-grade AI–one that is not only powerful but also trustworthy.

Humans will always be a part of the loop, but with Contextual AI models acting as their trusted research assistant, they can better focus on bringing their unique human creativity and expertise to their jobs.

Mike Klaczynski

Head of Partnerships, Contextual AI

Contextual AI provides a unified context layer that bridges the gap between enterprise data and AI agents, accelerating AI development while delivering exceptional accuracy. Founded by the pioneers of RAG, Contextual AI’s mission is to be the state-of-the-art platform for people to build verticalized gen AI apps on top of their data.

Industry: Technology

Location: United States

Products: Google Cloud, Llama, Vertex AI, Google Kubernetes Engine

About Google Cloud partner — Meta

Llama, from technology company Meta, is a collection of base models, research tools, and programs that enable the next wave of innovation.