25% to 35% reduction in Mean Time to Recovery (MTTR)

Vertex AI powers agents including "Ops Swarmer," an agentic system that reasons, plans, and assists engineers directly within their chat workflows

Allows BMC Helix's customers—including financial service companies and telcos—to securely deploy custom, fine-tuned models in their own cloud environments through Vertex AI

Simplified deployment, switching to new models within one minor release

Delivered efficient fine-tuning on a single GPU

BMC Helix uses autonomous AI agents on Vertex AI to help enterprise IT move beyond delivering simple reliability and toward unlocking new possibilities through Service Operations.

BMC Helix uses autonomous AI agents on Vertex AI to help enterprise IT move beyond delivering simple reliability and toward unlocking new possibilities through Service Operations.

Keeping mission-critical IT environments thriving

A key part of our value proposition is that customers can start using agentic AI to enhance Service Operations out-of-the-box. With our use of Llama models on Vertex AI, customers can deploy to the cloud and enhance ServiceOps right away.

Jake Adger

Head of Marketing, BMC Helix

Technology lies at the center of nearly every service and customer interaction today, especially in industries like financial services, public sector, and telecommunications. One slow network can impact the entire business, and its customers.

BMC Helix specializes in providing IT service management and operations (ServiceOps) solutions to organizations where IT service is mission-critical. Where BMC Helix stands out through its early investment in AI innovation. The AI-enabled platform starts by aggregating machine, application, and infrastructure data to provide visibility into the IT environment and spot issues early. But then it goes a step further, analyzing human actions such as ticket resolutions to suggest action plans. Instead of just sounding a generic alert, the BMC Helix platform can tell the IT team that a specific machine needs patching to maintain SLAs.

By shifting day-to-day reliability to autonomous AI agents, BMC Helix frees IT teams from much of the reactive operations work. That shift frees IT to partner with the business on an AI-first operating model.

"We set our bar high, creating AI that goes beyond just answering questions to deliver actionable plans that customers can trust," says Erhan Giral, head of the AI Innovation Team at BMC Helix. "The only way we could get that level of reasoning was to fine-tune a large language model (LLM) to fully understand how observability data fits together."

While BMC Helix had applied machine learning techniques to root cause analysis for many years, the company needed a more advanced LLM to deliver intelligent, automated support for customers. Llama stood out for its high quality and efficiency, all in a cost-effective open-source model that provides clarity into how customers' sensitive data is accessed and used.

Llama models allow BMC Helix to fine-tune for various domain-specific analysis and planning tasks without causing any regressions or knowledge collapse, thanks to the supported Mixture of Experts architecture. The models are trained on a tremendous amount of data, including historical ticket resolution data derived from decades of history. The outputs include insights for IT and AI agents that can cover a variety of use cases.

By running Llama models on Vertex AI, BMC Helix customers get a robust, agentic framework for ServiceOps that's ready to be deployed to their cloud infrastructure.

BMC Helix was an early adopter of these approaches and has gained an edge on the competition as a result.

Delivering intelligent reasoning

with fine-tuning

Observability data typically comes in many different formats and at high volumes, making it difficult for LLMs to grasp a full picture of IT environments.

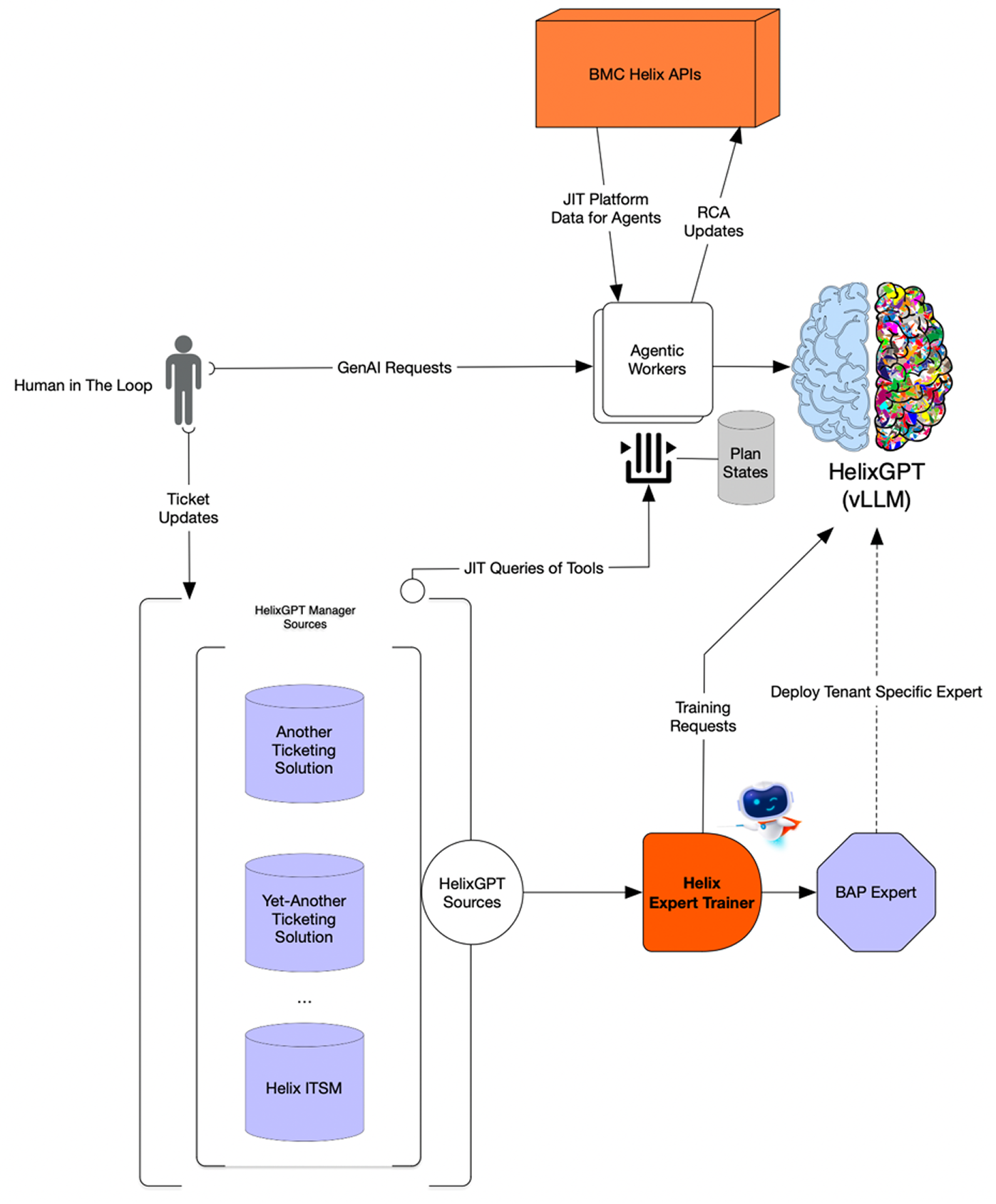

BMC Helix's AI agents rely on HelixGPT, the company's gen AI services capabilities that are based on a fine-tuned version of Llama 3.1 8B. "Meta doesn't just provide a great base model with open weights and biases, but also amazing tooling that was very helpful when starting to fine-tune," says Giral.

Llama models are lightweight and efficient, which allowed the AI team to implement a customized Mixture of Experts (MoE) architecture on a single GPU.

I've always considered Google Cloud to be a vendor in the AI space. With the Vertex AI team by our side, we accelerated deployment and started fine-tuning with Llama quickly.

Erhan Giral

Head of the AI Innovation Team, BMC Helix

Using this approach, the team fine-tuned the model to understand the shapes of observability data, and therefore understand how it all fits together in the IT environment.

Large enterprises tend to have more than one observability solution and can suffer from alert fatigue, or many uncorrelated alerts from across their disparate systems. Using Vertex AI and Llama models, BMC Helix aggregates the data from all solutions to consolidate alerts into a single situation.

BMC Helix also trains its planning agent within HelixGPT using Group Relative Policy Optimization (GRPO) on past ticket worklogs and incident conversations. This allows the model to learn how human experts think and plan for diagnostics, making its insights highly actionable and specific to the customer's environment. The result is more intelligent insights and the ability for agents to take action proactively.

BMC Helix chose to work with Llama models on Vertex AI for its simplicity and speed of deployment. Giral trusted Google Cloud as a leader in the AI space, with scalable, high-performance infrastructure for efficient processing. Vertex AI is easy to use and highly customizable, allowing the AI team to run its custom, fine-tuned model—including distillation and inference—without the complexity of managing GPUs and networks. This simplicity helped BMC Helix migrate its product to Llama models on Vertex AI in just one minor release. And if the BMC Helix team has any questions about Llama models, they can always turn to the open-source community of developers.

Faster resolution times with agentic AI

Since starting to work with Llama models on Vertex AI, BMC Helix has seen significant positive impacts on customers' mean time to identification (MTTI) and mean time to recovery (MTTR). With more relevant insights conveyed to teams faster, customers have seen between 25% and 35% faster MTTR. This means greater uptime for customers dealing with mission-critical IT environments.

Many customers prefer deploying the BMC Helix platform on their own environments to better control sensitive data and comply with privacy and security requirements. Working through Vertex AI, customers can easily set up the HelixGPT model in their own Google Cloud infrastructure to take full advantage of the ServiceOps platform.

The HelixGPT agents are also integrated directly into applications such as Slack and Teams, bringing AI to users at BMC Helix customers and eliminating the need for staff to learn yet another tool.

"The next generation of AI isn't just about answering questions–it's about reasoning and taking action," says Shikhar Mathur, Global Partner Dev Manager, Gen AI ISVs, Google Cloud. "We are thrilled to see BMC Helix using Llama models on Vertex AI to drive this innovation."

BMC Helix aims to deliver increasingly powerful AI automation for production systems. Instead of just recommending next steps for IT teams, AI agents are running inspections and tests to gather deeper information, and even automatically patching known vulnerabilities. With faster and more intelligent responsiveness, BMC Helix can free up time for IT staff to focus on high-value work that keeps mission-critical technology flowing.

BMC Helix augments work across enterprise IT Service Operations with its AI-enabled platform that anticipates needs, automates solutions, and accelerates outcomes.

Industry: Technology

Location: United States

Products: Vertex AI

About Google Cloud partner — Meta

Llama, from technology company Meta, is a collection of base models, research tools, and programs that enable the next wave of innovation.