75% reduction in data storage and infrastructure costs

Model evaluation time decreased from 24 hours to 20 minutes

Data processing capacity increased from a few nodes and GPUs to thousands of nodes and GPUs/TPUs, accelerating research and time to market

Increased audio data volume from 1M+ to 12M+ hours and 100 TB to over 1 PB

Over 10x increase in data storage capacity with Google Cloud

Managing the data processing pipelines and orchestration frameworks for larger speech-to-text models was overwhelming, and we didn’t have a compute cluster or data storage capacity on-premises to support the volume and diversity of research we wanted to do.

Ahmed Etefy

Lead Engineer, Data Infrastructure Team, AssemblyAI

At AssemblyAI, there was no lack of ingenuity — just a lack of computing resources.

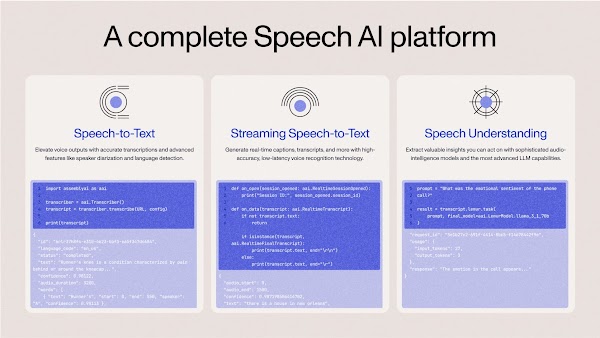

The artificial intelligence company builds speech-to-text and speech understanding models, accessible through their API, to help product and development teams turn speech into meaning. Going far beyond speech-to-text transcription, AssemblyAI solves complex problems in audio intelligence like summarization, PII redaction, and sentiment analysis.

"We're democratizing access to AI-based speech understanding and unlocking new use cases for conversational intelligence by training and packaging speech-to-text models," says Ahmed Etefy, the lead engineer on AssemblyAI's data infrastructure team in Vienna. "We provide building blocks for businesses to integrate speech AI into their products. For Conversational Intelligence companies, this means building features to help their customers better understand and serve their own customers in turn."

But when AssemblyAI's growing research team, which Etefy's team supports, embarked on their next generation of AI speech models, they hit a wall that they literally could not scale.

All of their model training, and much of their data storage, was handled on-premises. That worked for their initial Conformer-2 model release, which was trained on 1.1 million hours of audio stored in hundreds of terabytes of data. By comparison, their new Universal models would be trained on 12.5 million hours of audio — requiring storage capacity and processing power 10 times larger — and, understanding the exploratory nature of AI model development, AssemblyAI's research team would need to run many more experiments simultaneously.

While on-prem training and data storage is expensive, the most serious problem was the digital traffic jam.

"Managing the data processing pipelines and orchestration frameworks for training our new Universal models was overwhelming, and we didn't have the sufficient compute cluster or data storage capacity to support the volume and diversity of research we were doing," Etefy reports, noting that their previous model had been trained on a relatively small, in-house GPU cluster. "Our ability to innovate was stifled not by a lack of ideas, but by a lack of computing power and efficiency."

A smooth, cost-saving migration from on-prem storage and processing to Google Cloud

AssemblyAI was already storing inactive data in Google Cloud because of their limited capacity on-premises. The decision to move all the data and processing for training AssemblyAI's models to Google Cloud turned out to be an easy one.

"When we analyzed the cost of on-prem data storage for training alone, it was four times the price of storing it on Google Cloud," Etefy reports, "but we knew we'd derive even more value from scalability."

His team built a working proof-of-concept for an end-to-end Google Cloud–based system for research, compute, and data in only a month, proving the migration would reduce storage, data transfer, and infrastructure management costs; accelerate training; and give them room to scale exponentially. "It was an easy sell to the leadership team," he adds, "and getting up and running on Google Cloud was incredibly fast and easy because things worked the way they’re supposed to right from the very start."

Getting up and running on Google Cloud was incredibly fast and easy because things worked the way they’re supposed to right from the very start.

Ahmed Etefy

Lead Engineer, Data Infrastructure Team, AssemblyAI

Google Cloud now stores AssemblyAI's AI lake house, which hosts petabyte-scale structured and unstructured data and uses BigQuery, Bigtable, Google Cloud Storage, and Looker to visualize that data. Their Kubernetes-based orchestration framework, which readies large amounts of data for training, as well as their entire training infrastructure — including the tensor processing units (TPUs) — are also now on Google Cloud. This enables AssemblyAI to train millions of hours of audio efficiently, leveraging powerful parallel processing capabilities.

Empowering AI researchers to innovate faster

Moving to Google Cloud opened the floodgates for innovation and we were able to release our following model in less than six months after completing the migration.

Ahmed Etefy

Lead Engineer, Data Infrastructure Team, AssemblyAI

Etefy aimed to empower AssemblyAI's researchers by reducing their reliance on the engineering team for building processing pipelines, allowing each team to focus on their respective strengths. Using Cloud Composer, AssemblyAI built an orchestration framework that makes it easy for its researchers to write their own scalable pipeline code.

"Now they can run experiments autonomously, and we just spin up more clusters as we need them, so they can run as many as they want," Etefy says. Using Vertex AI, evaluations that used to take an entire day now take 20 minutes. "If we run a hundred jobs on Vertex AI and then go grab lunch, by the time we're back, the evaluations are complete."

And as new chips and hardware upgrades are released, Google Cloud infrastructure is updated automatically. "We flip a switch and we're already on the newest machine available. We never could have done that on-prem," he adds.

Etefy also appreciates the audit, logging, and data lineage offerings in Google Cloud. "We always know what data went into training which model, which is crucial for customers navigating data privacy or ownership compliance requirements," he notes.

"Moving to Google Cloud opened the floodgates for innovation, enabling us to run many more experiments simultaneously, and we were able to release our following model, Universal-2, in less than six months after completing our migration to Google Cloud," he reports proudly.

And the Google Cloud support team continues to buoy his work. "They remove any blockers we encounter quickly and even help us get experiments up and running," he says. "Google Cloud is a real partner — there's always someone there I can go to for ideas."

Consequently, AssemblyAI is training speech AI models effective at solving specific and complex problems faster, and at a lower cost, with Google Cloud. "We can now train larger models, targeting billions of parameters that require petabytes of data and pods of TPUs," Etefy reports. "That means we can get the best speech understanding models to market quickly and efficiently — and our customers can provide better products for their own clients sooner."

AssemblyAI is a Speech AI company focused on building new state-of-the-art AI models that can transcribe and understand human speech.

Industries: Startup, Technology

Location: United States

Products: Google Cloud, Vertex AI, BigQuery, Bigtable, Looker, Cloud Composer, Google Kubernetes Engine, Dataproc, Dataflow, Cloud Storage