Vertex AI Platform

Innovate faster with enterprise-ready AI, enhanced by Gemini models

Vertex AI is a fully-managed, unified AI development platform for building and using generative AI. Access and utilize Vertex AI Studio, Agent Builder, and 200+ foundation models.

New customers get up to $300 in free credits to try Vertex AI and other Google Cloud products.

Features

Gemini, Google’s most capable multimodal models

Vertex AI offers access to the latest Gemini models from Google. Gemini is capable of understanding virtually any input, combining different types of information, and generating almost any output. Prompt and test Gemini in Vertex AI Studio, using text, images, video, or code. Using Gemini’s advanced reasoning and state-of-the-art generation capabilities, developers can try sample prompts for extracting text from images, converting image text to JSON, and even generate answers about uploaded images to build next-gen AI applications.

200+ generative AI models and tools

Choose from the widest variety of models with first-party (Gemini, Imagen, Chirp, Veo), third-party (Anthropic's Claude Model Family), and open models (Gemma, Llama 3.2) in Model Garden. Use extensions to enable models to retrieve real-time information and trigger actions. Customize models to your use case with a variety of tuning options for Google's text, image, or code models.

Generative AI models and fully managed tools make it easy to prototype, customize, and integrate and deploy them into applications.

Open and integrated AI platform

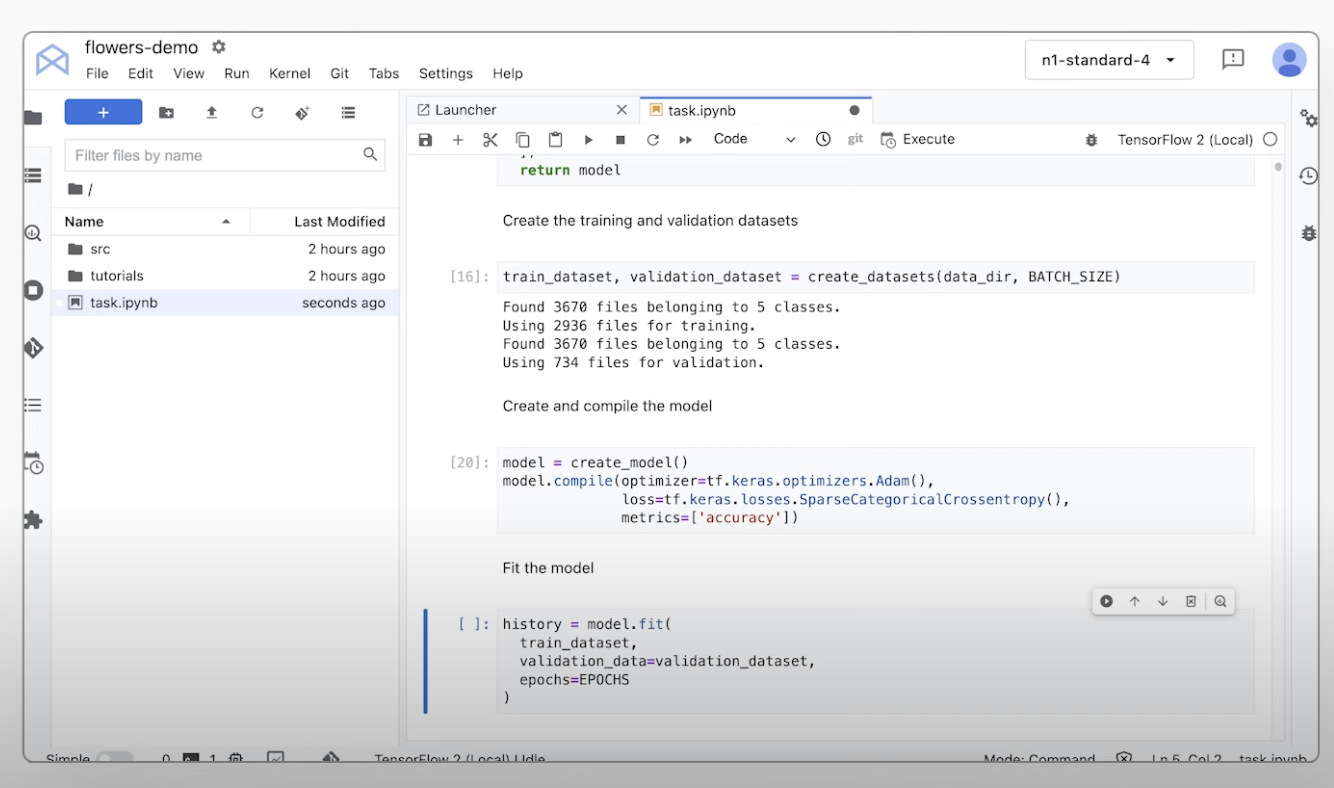

Data scientists can move faster with Vertex AI Platform's tools for training, tuning, and deploying ML models.

Vertex AI notebooks, including your choice of Colab Enterprise or Workbench, are natively integrated with BigQuery providing a single surface across all data and AI workloads.

Vertex AI Training and Prediction help you reduce training time and deploy models to production easily with your choice of open source frameworks and optimized AI infrastructure.

MLOps for predictive and generative AI

Vertex AI Platform provides purpose-built MLOps tools for data scientists and ML engineers to automate, standardize, and manage ML projects.

Modular tools help you collaborate across teams and improve models throughout the entire development lifecycle—identify the best model for a use case with Vertex AI Evaluation, orchestrate workflows with Vertex AI Pipelines, manage any model with Model Registry, serve, share, and reuse ML features with Feature Store, and monitor models for input skew and drift.

Agent Builder

Vertex AI Agent Builder enables developers to easily build and deploy enterprise ready generative AI experiences. It provides the convenience of a no code agent builder console alongside powerful grounding, orchestration, and customization capabilities. With Vertex AI Agent Builder developers can quickly create a range of generative AI agents and applications grounded in their organization’s data.

How It Works

Vertex AI provides several options for model training and deployment:

- Generative AI gives you access to large generative AI models, including Gemini 2.5, so you can evaluate, tune, and deploy them for use in your AI-powered applications.

- Model Garden lets you discover, test, customize, and deploy Vertex AI and select open-source (OSS) models and assets.

- Custom training gives you complete control over the training process, including using your preferred ML framework, writing your own training code, and choosing hyperparameter tuning options.

Vertex AI provides several options for model training and deployment:

- Generative AI gives you access to large generative AI models, including Gemini 2.5, so you can evaluate, tune, and deploy them for use in your AI-powered applications.

- Model Garden lets you discover, test, customize, and deploy Vertex AI and select open-source (OSS) models and assets.

- Custom training gives you complete control over the training process, including using your preferred ML framework, writing your own training code, and choosing hyperparameter tuning options.

Common Uses

Build with Gemini

Access Gemini models via the Gemini API in Google Cloud Vertex AI

- Python

- JavaScript

- Java

- Go

- Curl

Code sample

Access Gemini models via the Gemini API in Google Cloud Vertex AI

- Python

- JavaScript

- Java

- Go

- Curl

Generative AI in applications

Get an introduction to generative AI on Vertex AI

Get an introduction to generative AI on Vertex AI

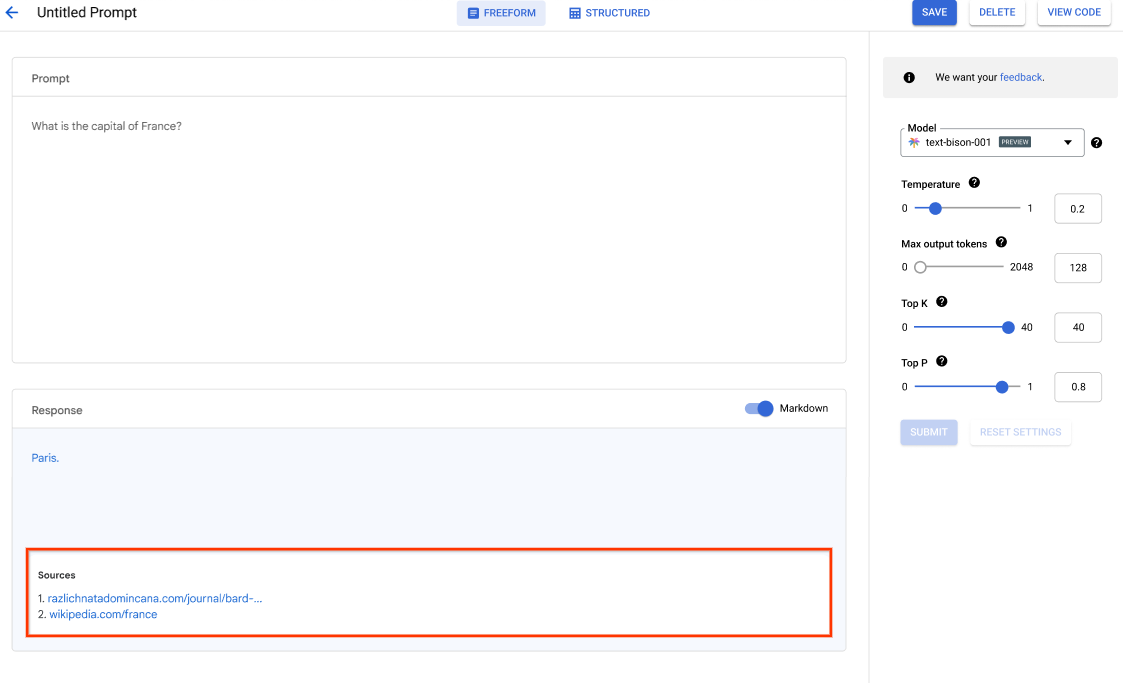

Vertex AI Studio offers a Google Cloud console tool for rapidly prototyping and testing generative AI models. Learn how you can use Generative AI Studio to test models using prompt samples, design and save prompts, tune a foundation model, and convert between speech and text.

See how to tune LLMs in Vertex AI Studio

Tutorials, quickstarts, & labs

Get an introduction to generative AI on Vertex AI

Get an introduction to generative AI on Vertex AI

Vertex AI Studio offers a Google Cloud console tool for rapidly prototyping and testing generative AI models. Learn how you can use Generative AI Studio to test models using prompt samples, design and save prompts, tune a foundation model, and convert between speech and text.

See how to tune LLMs in Vertex AI Studio

Extract, summarize, and classify data

Use gen AI for summarization, classification, and extraction

Use gen AI for summarization, classification, and extraction

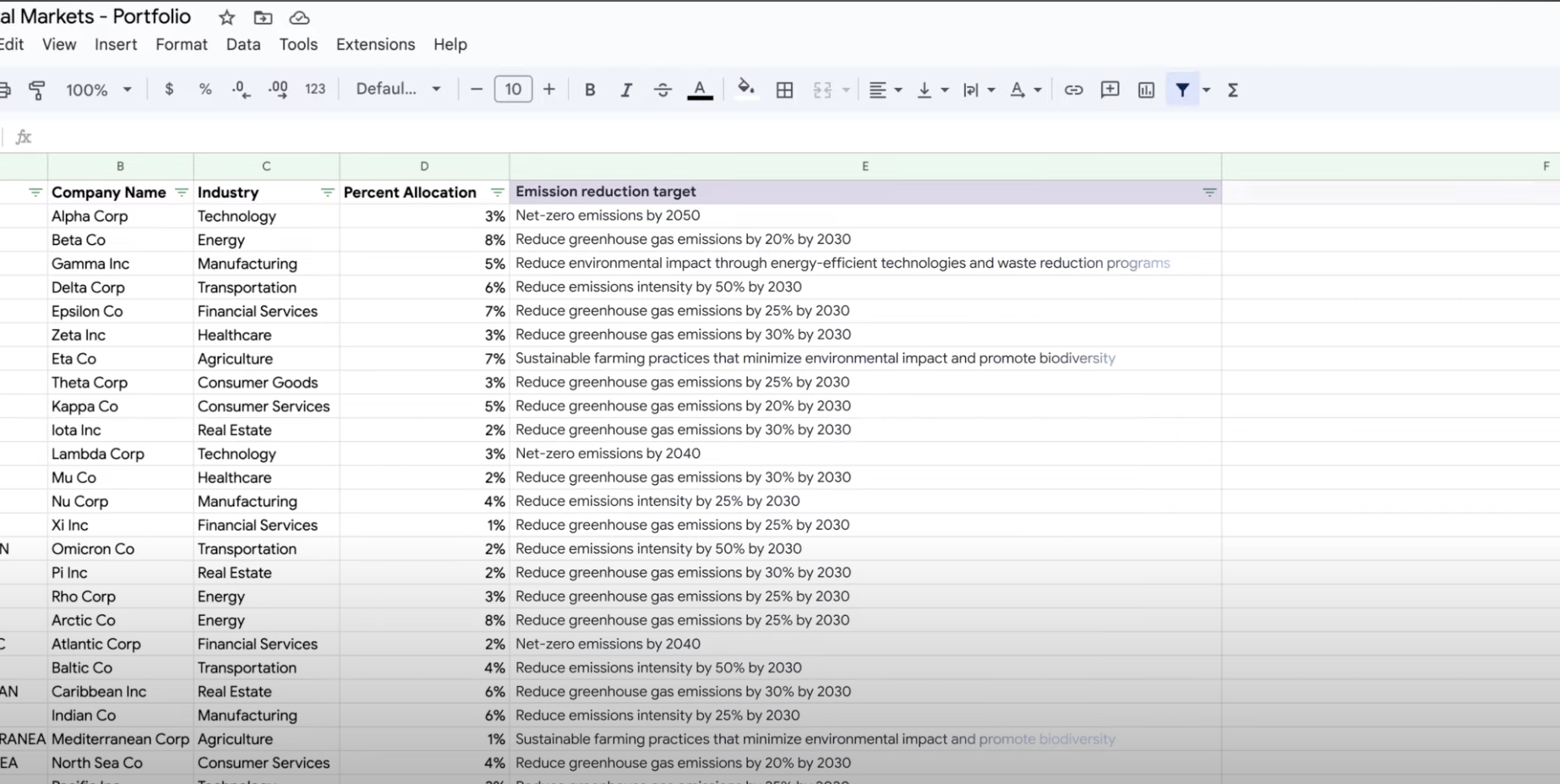

Learn how to create text prompts for handling any number of tasks with Vertex AI’s generative AI support. Some of the most common tasks are classification, summarization, and extraction. Gemini on Vertex AI lets you design prompts with flexibility in terms of their structure and format.

Tutorials, quickstarts, & labs

Use gen AI for summarization, classification, and extraction

Use gen AI for summarization, classification, and extraction

Learn how to create text prompts for handling any number of tasks with Vertex AI’s generative AI support. Some of the most common tasks are classification, summarization, and extraction. Gemini on Vertex AI lets you design prompts with flexibility in terms of their structure and format.

Train custom ML models

Custom ML training overview and documentation

Custom ML training overview and documentation

Get an overview of the custom training workflow in Vertex AI, the benefits of custom training, and the various training options that are available. This page also details every step involved in the ML training workflow from preparing data to predictions.

Get a video walkthrough of the steps required to train custom models on Vertex AI.

Tutorials, quickstarts, & labs

Custom ML training overview and documentation

Custom ML training overview and documentation

Get an overview of the custom training workflow in Vertex AI, the benefits of custom training, and the various training options that are available. This page also details every step involved in the ML training workflow from preparing data to predictions.

Get a video walkthrough of the steps required to train custom models on Vertex AI.

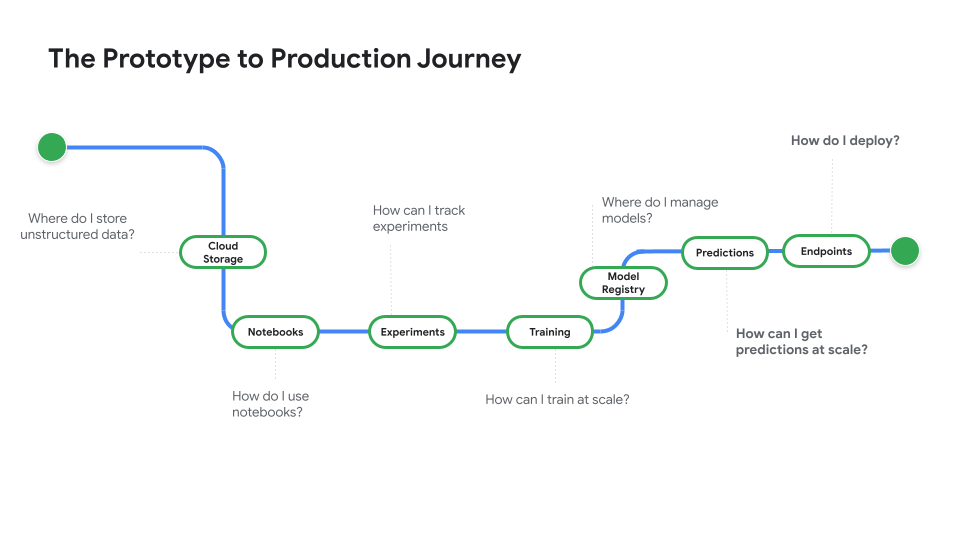

Deploy a model for production use

Deploy for batch or online predictions

Deploy for batch or online predictions

When you're ready to use your model to solve a real-world problem, register your model to Vertex AI Model Registry and use the Vertex AI prediction service for batch and online predictions.

Watch Prototype to Production, a video series that takes you from notebook code to a deployed model.

Tutorials, quickstarts, & labs

Deploy for batch or online predictions

Deploy for batch or online predictions

When you're ready to use your model to solve a real-world problem, register your model to Vertex AI Model Registry and use the Vertex AI prediction service for batch and online predictions.

Watch Prototype to Production, a video series that takes you from notebook code to a deployed model.

Pricing

| How Vertex AI pricing works | Pay for Vertex AI tools, storage, compute & Cloud resources used. New customers get $300 free credits to try Vertex AI & Google Cloud products. | |

|---|---|---|

| Tools and usage | Description | Price |

Generative AI | Imagen model for image generation Based on image input, character input, or custom training pricing. | Starting at $0.0001 |

Text, chat, and code generation Based on every 1,000 characters of input (prompt) and every 1,000 characters of output (response). | Starting at $0.0001 per 1,000 characters | |

AutoML models | Image data training, deployment, and prediction Based on time to train per node hour, which reflects resource usage, and if for classification or object detection. | Starting at $1.375 per node hour |

Video data training and prediction Based on price per node hour and if classification, object tracking, or action recognition. | Starting at $0.462 per node hour | |

Tabular data training and prediction Based on price per node hour and if classification/regression or forecasting. Contact sales for potential discounts and pricing details. | Contact sales | |

Text data upload, training, deployment, prediction Based on hourly rates for training and prediction, pages for legacy data upload (PDF only), and text records and pages for prediction. | Starting at $0.05 per hour | |

Custom-trained models | Custom model training Based on machine type used per hour, region, and any accelerators used. Get an estimate via sales or our pricing calculator. | Contact sales |

Vertex AI notebooks | Compute and storage resources Based on the same rates as Compute Engine and Cloud Storage. | Refer to products |

Management fees In addition to the above resource usage, management fees apply based on region, instances, notebooks, and managed notebooks used. View details. | Refer to details | |

Vertex AI Pipelines | Execution and additional fees Based on execution charge, resources used, and any additional service fees. | Starting at $0.03 per pipeline run |

Vertex AI Vector Search | Serving and building costs Based on the size of your data, the amount of queries per second (QPS) you want to run, and the number of nodes you use. View example. | Refer to example |

View pricing details for all Vertex AI features and services.

How Vertex AI pricing works

Pay for Vertex AI tools, storage, compute & Cloud resources used. New customers get $300 free credits to try Vertex AI & Google Cloud products.

Generative AI

Imagen model for image generation

Based on image input, character input, or custom training pricing.

Starting at

$0.0001

Text, chat, and code generation

Based on every 1,000 characters of input (prompt) and every 1,000 characters of output (response).

Starting at

$0.0001

per 1,000 characters

AutoML models

Image data training, deployment, and prediction

Based on time to train per node hour, which reflects resource usage, and if for classification or object detection.

Starting at

$1.375

per node hour

Video data training and prediction

Based on price per node hour and if classification, object tracking, or action recognition.

Starting at

$0.462

per node hour

Tabular data training and prediction

Based on price per node hour and if classification/regression or forecasting. Contact sales for potential discounts and pricing details.

Contact sales

Text data upload, training, deployment, prediction

Based on hourly rates for training and prediction, pages for legacy data upload (PDF only), and text records and pages for prediction.

Starting at

$0.05

per hour

Custom-trained models

Custom model training

Based on machine type used per hour, region, and any accelerators used. Get an estimate via sales or our pricing calculator.

Contact sales

Vertex AI notebooks

Compute and storage resources

Based on the same rates as Compute Engine and Cloud Storage.

Refer to products

Management fees

In addition to the above resource usage, management fees apply based on region, instances, notebooks, and managed notebooks used. View details.

Refer to details

Vertex AI Pipelines

Execution and additional fees

Based on execution charge, resources used, and any additional service fees.

Starting at

$0.03

per pipeline run

Vertex AI Vector Search

Serving and building costs

Based on the size of your data, the amount of queries per second (QPS) you want to run, and the number of nodes you use. View example.

Refer to example

View pricing details for all Vertex AI features and services.

Business Case

Unlock the full potential of gen AI

"The accuracy of Google Cloud's generative AI solution and practicality of the Vertex AI Platform gives us the confidence we needed to implement this cutting-edge technology into the heart of our business and achieve our long-term goal of a zero-minute response time."

Abdol Moabery, CEO of GA Telesis

Analyst reports

TKTKT

Google named a Leader in The Forrester Wave™: AI Infrastructure Solutions, Q1 2024, receiving the highest scores of any vendor evaluated in both Current Offering and Strategy.

Google is a Leader in The Forrester Wave™: AI Foundation Models For Language, Q2 2024. Read the report.

Google named a leader in the Forrester Wave: AI/ML Platforms, Q3 2024. Learn more.