Vertex AI 平台

運用由 Gemini 模型強化的企業級 AI 技術加速創新

Vertex AI 是全代管的統合式 AI 開發平台,用於建構及使用生成式 AI。您可以存取及使用 Vertex AI Studio、Agent Builder,以及超過 200 種基礎模型。

新客戶最多可獲得價值 $300 美元的免費抵免額,盡情體驗 Vertex AI 和其他 Google Cloud 產品。

功能

Gemini 是 Google 最強大的多模態模型

Vertex AI 提供 Google 的最新開發的 Gemini 模型。Gemini 幾乎任何輸入內容都能理解,還可合併不同類型的資訊,而且幾乎任何輸出內容都能生成。在 Vertex AI Studio 中,利用文字、圖片、影片或程式碼提示及測試 Gemini。透過 Gemini 的進階推論和先進的生成功能,開發人員可以嘗試使用範例提示來擷取圖片中的文字、將圖片文字轉換為 JSON,甚至產生關於上傳圖片的答案,打造新一代 AI 應用程式。

超過 200 種生成式 AI 模型與工具

Model Garden 有各式各樣的模型可供選擇,包括第一方 (Gemini、Imagen、Chirp、Veo)、第三方 (Anthropic Claude 模型系列) 和開放式模型 (Gemma、Llama 3.2)。您可以利用擴充功能讓模型擷取即時資訊及觸發動作,透過各種調整選項,依據用途自訂 Google 提供的文字、圖像或程式碼模型。

生成式 AI 模型和全代管工具可讓您輕鬆設計、自訂模型,並整合和部署至應用程式中。

開放的整合式 AI 平台

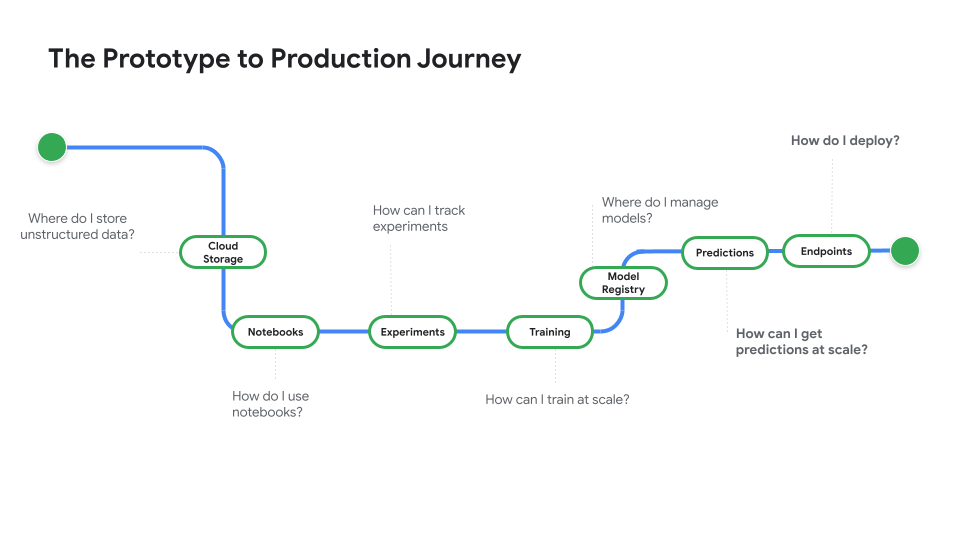

運用 Vertex AI 平台提供的訓練、調整及部署機器學習模型的工具,數據資料學家能加快行動速度。

Vertex AI Notebooks (包括您選擇的 Colab Enterprise 或 Workbench) 已與 BigQuery 原生整合,提供跨所有資料和 AI 工作負載的單一介面。

有了 Vertex AI 訓練和預測,您就能利用自選的開放原始碼架構和經過最佳化的 AI 基礎架構,縮短訓練時間並將模型部署至實際工作環境。

適用於預測式/生成式 AI 的機器學習運作平台

Vertex AI 平台提供特別打造的機器學習運作工具,可讓數據資料學家和機器學習工程師自動化、標準化及管理機器學習專案。

模組化工具有助於跨團隊協作,並在整個開發生命週期改良模型,例如透過 Vertex AI Evaluation 找出特定用途的最佳模型;透過 Vertex AI Pipelines 自動化調度管理工作流程;使用 Model Registry 管理模型;透過特徵儲存庫提供、共用及重複使用機器學習特徵;針對輸入偏差和偏移監控模型。

Agent Builder

Vertex AI Agent Builder 可以協助開發人員輕鬆建構及部署企業級生成式 AI 服務。它提供便利的無程式碼虛擬服務專員建構工具控制台,以及強大的基礎建構、自動化調度管理和自訂功能。有了 Vertex AI Agent Builder,開發人員可以根據機構資料快速建立各種生成式 AI 虛擬服務專員和應用程式。

運作方式

Vertex AI 提供多個模型訓練和部署選項:

- 生成式 AI 提供大型生成式 AI 模型 (包括 Gemini 2.5),讓您評估、調整、部署並用於 AI 輔助應用程式。

- Model Garden 可讓您探索、測試、自訂及部署 Vertex AI,並選取開放原始碼 (OSS) 模型和資產。

- 自訂訓練:您可以完全控管訓練程序,包括使用偏好的機器學習框架、編寫自己的訓練程式碼,以及選擇超參數微調選項。

Vertex AI 提供多個模型訓練和部署選項:

- 生成式 AI 提供大型生成式 AI 模型 (包括 Gemini 2.5),讓您評估、調整、部署並用於 AI 輔助應用程式。

- Model Garden 可讓您探索、測試、自訂及部署 Vertex AI,並選取開放原始碼 (OSS) 模型和資產。

- 自訂訓練:您可以完全控管訓練程序,包括使用偏好的機器學習框架、編寫自己的訓練程式碼,以及選擇超參數微調選項。

常見用途

使用 Gemini 進行建構

透過 Google Cloud Vertex AI 中的 Gemini API 存取 Gemini 模型

- Python

- JavaScript

- Java

- Go

- Curl

程式碼範例

透過 Google Cloud Vertex AI 中的 Gemini API 存取 Gemini 模型

- Python

- JavaScript

- Java

- Go

- Curl

應用程式中的生成式 AI

初步瞭解 Vertex AI 中的生成式 AI

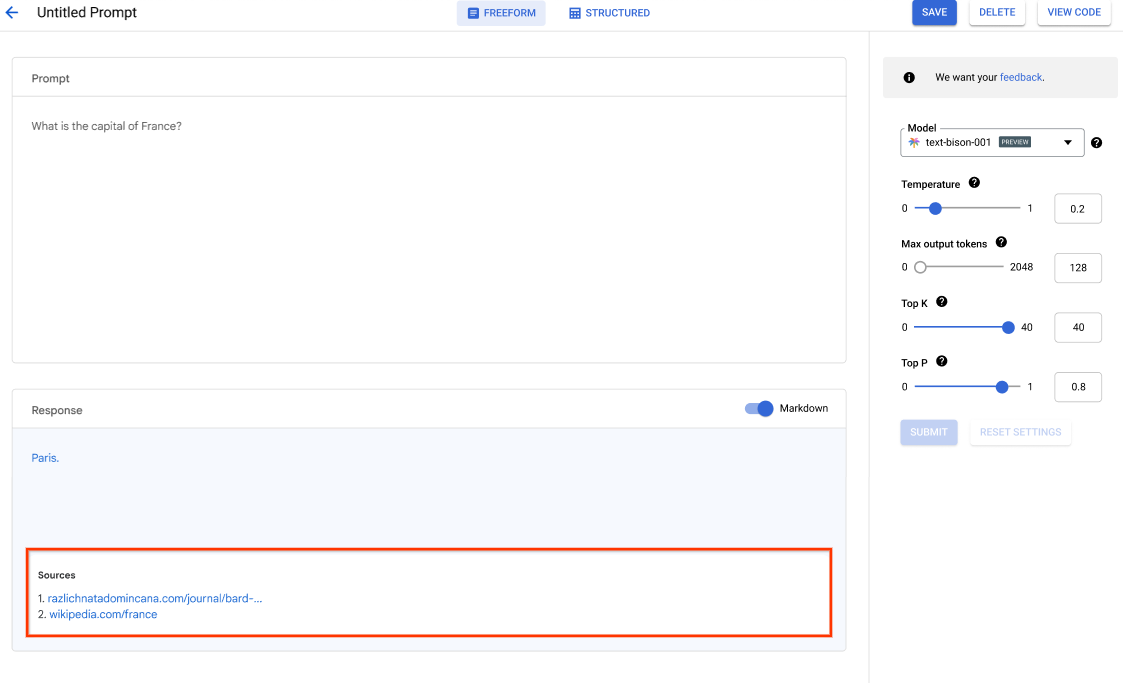

Vertex AI Studio 提供 Google Cloud 控制台工具,可快速設計及測試生成式 AI 模型的原型。瞭解如何使用 Generative AI Studio,透過提示範例、設計和儲存提示、調整基礎模型,以及在語音和文字之間轉換,來測試模型。

教學課程、快速入門導覽課程和研究室

初步瞭解 Vertex AI 中的生成式 AI

Vertex AI Studio 提供 Google Cloud 控制台工具,可快速設計及測試生成式 AI 模型的原型。瞭解如何使用 Generative AI Studio,透過提示範例、設計和儲存提示、調整基礎模型,以及在語音和文字之間轉換,來測試模型。

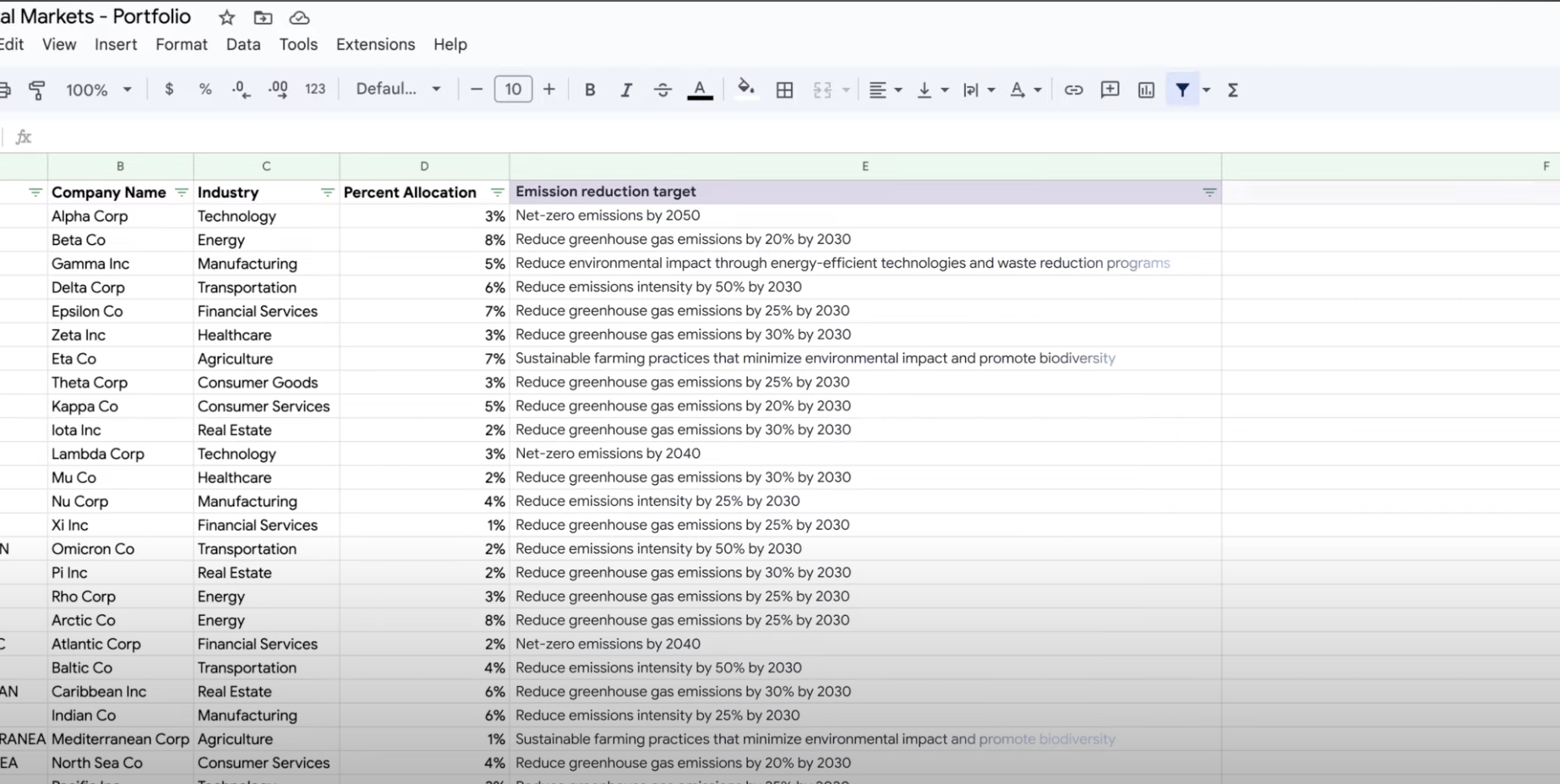

擷取、摘錄及分類資料

使用生成式 AI 進行摘要、分類及擷取

瞭解如何透過 Vertex AI 的生成式 AI 支援功能,建立文字提示,處理任意數量的工作。最常見的工作是分類、摘要和擷取。Vertex AI 的 Gemini 可讓您靈活設計提示的結構和格式。

教學課程、快速入門導覽課程和研究室

使用生成式 AI 進行摘要、分類及擷取

瞭解如何透過 Vertex AI 的生成式 AI 支援功能,建立文字提示,處理任意數量的工作。最常見的工作是分類、摘要和擷取。Vertex AI 的 Gemini 可讓您靈活設計提示的結構和格式。

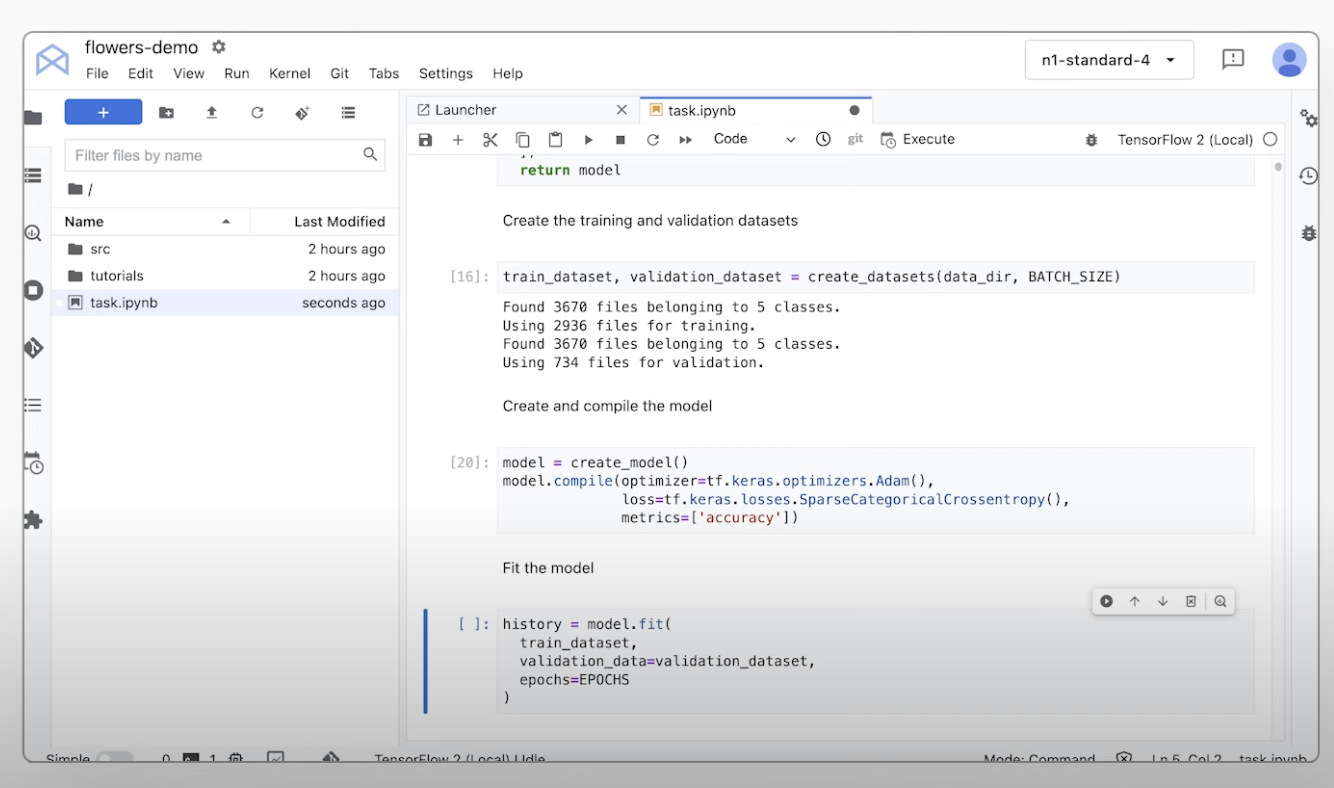

部署模型以用於實際工作環境

觀看「從原型設計到實際工作環境」系列影片,瞭解從筆記本程式碼至部署模型的過程。

教學課程、快速入門導覽課程和研究室

觀看「從原型設計到實際工作環境」系列影片,瞭解從筆記本程式碼至部署模型的過程。

定價

| Vertex AI 的定價方式 | 您只需為使用的 Vertex AI 工具、儲存空間、運算和 Cloud 資源付費。新客戶可獲得價值 $300 美元的免費抵免額,盡情體驗 Vertex AI 和 Google Cloud 產品。 | |

|---|---|---|

| 工具與用途 | 說明 | 價格 |

生成式 AI | 用於產生圖片的 Imagen 模型 依據圖片輸入內容、字元輸入內容或自訂訓練定價計費。 | 起始價 $0.0001 |

文字、即時通訊和程式碼生成 以每 1,000 個字元的輸入 (提示) 和每 1,000 個字元的輸出 (回應) 為依據。 | 起始價 $0.0001 每 1,000 個字元 | |

AutoML 模型 | 圖片資料訓練、部署和預測 依據每個節點時數的訓練時間計算,這會反映資源用量,以及是否用於分類或物件偵測。 | 起始價 $1.375 每節點時數 |

影片資料訓練和預測 依據每個節點時數的價格,以及是否用於分類、物件追蹤或動作識別來計算。 | 起始價 $0.462 美元 每節點時數 | |

表格資料訓練和預測 依據每個節點時數的價格,以及是否用於分類/迴歸或預測。如要瞭解可能適用的折扣和定價詳情,請與銷售人員聯絡。 | 聯絡銷售人員 | |

文字資料上傳、訓練、部署和預測 根據訓練和預測資料的每小時費率、上傳舊版資料 (僅限 PDF) 的頁數,以及預測服務的文字記錄和頁數來計算。 | 起始價 $0.05 美元 每小時 | |

自訂訓練模型 | 自訂模型訓練 以每小時使用的機型、區域和加速器為依據計費。您可以透過銷售或 Pricing Calculator 取得估價。 | 聯絡銷售人員 |

Vertex AI 筆記本 | 運算和儲存空間資源 費率計算方式與 Compute Engine 和 Cloud Storage 相同。 | 參考產品 |

管理費 除了上述資源用量之外,我們也會根據使用的區域、執行個體、筆記本和代管型筆記本收取管理費。查看詳細資料。 | 參閱詳細資料 | |

Vertex AI 管道 | 執行費用和其他費用 依據執行費用、使用的資源和任何額外服務費用。 | 起始價 $0.03 每項管道執行作業 |

Vertex AI Vector Search | 提供服務及建構的費用 視資料大小、要執行的每秒查詢次數 (QPS),以及使用的節點數量而定。查看範例。 | 參考範例 |

查看所有 Vertex AI 功能與服務的定價詳細資料。

Vertex AI 的定價方式

您只需為使用的 Vertex AI 工具、儲存空間、運算和 Cloud 資源付費。新客戶可獲得價值 $300 美元的免費抵免額,盡情體驗 Vertex AI 和 Google Cloud 產品。

文字、即時通訊和程式碼生成

以每 1,000 個字元的輸入 (提示) 和每 1,000 個字元的輸出 (回應) 為依據。

Starting at

$0.0001

每 1,000 個字元

圖片資料訓練、部署和預測

依據每個節點時數的訓練時間計算,這會反映資源用量,以及是否用於分類或物件偵測。

Starting at

$1.375

每節點時數

影片資料訓練和預測

依據每個節點時數的價格,以及是否用於分類、物件追蹤或動作識別來計算。

Starting at

$0.462 美元

每節點時數

文字資料上傳、訓練、部署和預測

根據訓練和預測資料的每小時費率、上傳舊版資料 (僅限 PDF) 的頁數,以及預測服務的文字記錄和頁數來計算。

Starting at

$0.05 美元

每小時

執行費用和其他費用

依據執行費用、使用的資源和任何額外服務費用。

Starting at

$0.03

每項管道執行作業

查看所有 Vertex AI 功能與服務的定價詳細資料。

企業案例

充分發揮生成式 AI 的潛力

「有了準確的 Google Cloud 生成式 AI 解決方案,以及實用的 Vertex AI 平台,我們就能放心導入這項最先進的技術來執行核心業務,並達到即時回應客戶需求的長期目標。」

GA Telesis 執行長 Abdol Moabery

分析師報告

TKTKT

在 2024 年第 1 季的《The Forrester Wave™: AI Infrastructure Solutions》(The Forrester Wave™:AI 基礎架構解決方案) 報告中,Google 獲評為領導品牌,並從接受評估的所有供應商中脫穎而出,在「現有產品/服務」和「策略」類別均拿下最高分。

在 2024 年第 2 季的《The Forrester Wave™: AI Foundation Models For Language》(The Forrester Wave™:用於語言生成的 AI 基礎模型) 報告中,Google 獲評為領導品牌。閱讀報告。

在 2024 年第 3 季的《Forrester Wave: AI/ML Platforms》(Forrester Wave:AI/機器學習平台) 報告中,Google 獲評為領導品牌。瞭解詳情。