Entraînement de modèle

Innovez plus rapidement grâce à une IA adaptée aux entreprises, optimisée par les modèles Gemini

Vertex AI est une plate-forme de développement d'IA unifiée et entièrement gérée qui permet de créer et d'utiliser l'IA générative. Accédez à Vertex AI Studio, à Agent Builder et à plus de 200 modèles de fondation, et utilisez-les.

Les nouveaux clients bénéficient d'un maximum de 300 $ de crédits pour essayer Vertex AI et d'autres produits Google Cloud.

Fonctionnalités

Gemini, le modèle multimodal le plus performant de Google

Vertex AI permet d'accéder aux derniers modèles Gemini de Google. Gemini est capable de comprendre pratiquement n'importe quelle entrée, de combiner différents types d'informations et de générer presque n'importe quel résultat. Envoyez des requêtes et faites des tests dans Vertex AI Studio à l'aide de texte, d'images, de vidéos ou de code. Grâce aux raisonnements avancés et aux fonctionnalités de génération de pointe de Gemini, les développeurs peuvent essayer des exemples de requêtes pour extraire du texte à partir d'images, convertir le texte des images au format JSON, et même générer des réponses sur les images importées pour créer des applications d'IA de nouvelle génération.

Plus de 200 modèles et outils d'IA générative

Faites votre choix parmi la plus grande variété de modèles avec des modèles propriétaires (Gemini, Imagen, Chirp, Veo), tiers (famille de modèles Claude d'Anthropic) et ouverts (Gemma, Llama 3.2) dans Model Garden. Utilisez des extensions pour permettre aux modèles de récupérer des informations en temps réel et de déclencher des actions. Personnalisez les modèles en fonction de votre cas d'utilisation grâce à différentes options de réglage pour les modèles de texte, d'images ou de code de Google.

Les modèles d'IA générative et les outils entièrement gérés permettent de créer des prototypes, de les personnaliser, de les intégrer et de les déployer facilement dans des applications.

Plate-forme d'IA ouverte et intégrée

Les data scientists peuvent évoluer plus rapidement grâce aux outils Vertex AI Platform dédiés à l'entraînement, au réglage et au déploiement de modèles de ML.

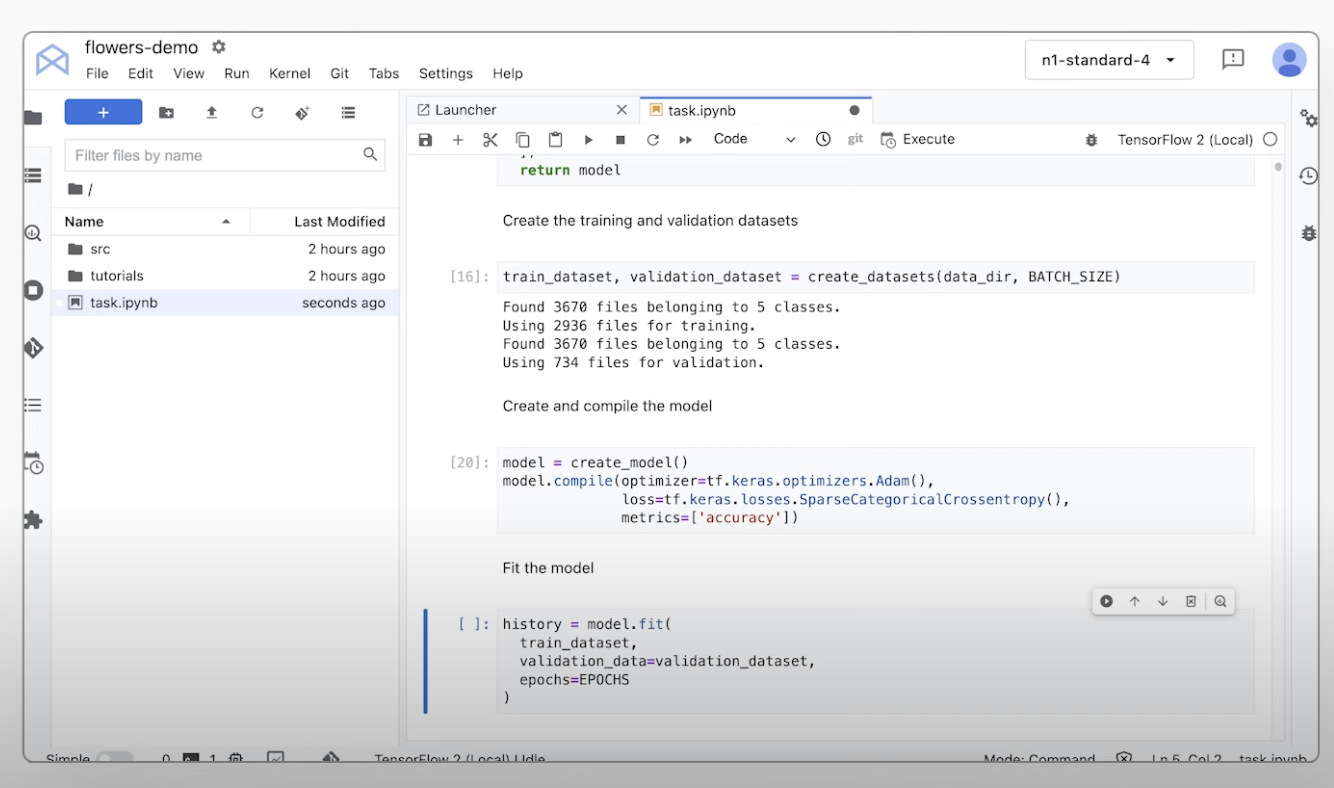

Les notebooks Vertex AI, y compris Colab Enterprise ou Workbench de votre choix, sont intégrés de façon native à BigQuery et offrent une surface unique pour toutes les charges de travail de données et d'IA.

Vertex AI Training et Prediction vous permettent de réduire la durée d'entraînement et de déployer facilement des modèles en production grâce aux frameworks Open Source de votre choix et à une infrastructure d'IA optimisée.

MLOps pour l'IA prédictive et générative

Vertex AI Platform fournit des outils MLOps conçus sur mesure pour que les data scientists et les ingénieurs en ML puissent automatiser, standardiser et gérer les projets de ML.

Les outils modulaires vous aident à collaborer entre les équipes et à améliorer les modèles tout au long du cycle de développement : identifier le meilleur modèle pour un cas d'utilisation avec Vertex AI Evaluation, orchestrer les workflows avec Vertex AI Pipelines, gérer n'importe quel modèle avec Model Registry, diffuser, partager et réutiliser des caractéristiques de ML avec Feature Store et surveiller les modèles pour détecter les décalages et dérives d'entrée.

Générateur d'agents

Vertex AI Agent Builder permet aux développeurs de créer et de déployer facilement des expériences d'IA générative adaptées aux entreprises. Il offre la commodité d'une console sans compilateur d'agents de code, ainsi que de puissantes fonctionnalités d'ancrage, d'orchestration et de personnalisation. Avec Vertex AI Agent Builder, les développeurs peuvent rapidement créer une gamme d'agents et d'applications d'IA générative ancrés dans les données de leur organisation.

Fonctionnement

Vertex AI propose plusieurs options d'entraînement et de déploiement de modèles :

- L'IA générative vous donne accès à de grands modèles d'IA générative comme Gemini 2.5. Vous pouvez ainsi les évaluer, les ajuster et les déployer pour les utiliser dans vos applications optimisées.

- Model Garden vous permet de découvrir, de tester, de personnaliser et de déployer Vertex AI, et de sélectionner des modèles et des éléments Open Source (OSS).

- L'entraînement personnalisé vous offre un contrôle total sur le processus d'entraînement, y compris l'utilisation de votre framework de ML préféré, l'écriture de votre propre code d'entraînement et le choix des options de réglage des hyperparamètres.

Vertex AI propose plusieurs options d'entraînement et de déploiement de modèles :

- L'IA générative vous donne accès à de grands modèles d'IA générative comme Gemini 2.5. Vous pouvez ainsi les évaluer, les ajuster et les déployer pour les utiliser dans vos applications optimisées.

- Model Garden vous permet de découvrir, de tester, de personnaliser et de déployer Vertex AI, et de sélectionner des modèles et des éléments Open Source (OSS).

- L'entraînement personnalisé vous offre un contrôle total sur le processus d'entraînement, y compris l'utilisation de votre framework de ML préféré, l'écriture de votre propre code d'entraînement et le choix des options de réglage des hyperparamètres.

Utilisations courantes

Compiler avec Gemini

Accédez aux modèles Gemini via l'API Gemini dans Google Cloud Vertex AI

- Python

- JavaScript

- Java

- Go

- Curl

Exemple de code

Accédez aux modèles Gemini via l'API Gemini dans Google Cloud Vertex AI

- Python

- JavaScript

- Java

- Go

- Curl

L'IA générative dans les applications

Introduction à l'IA générative sur Vertex AI

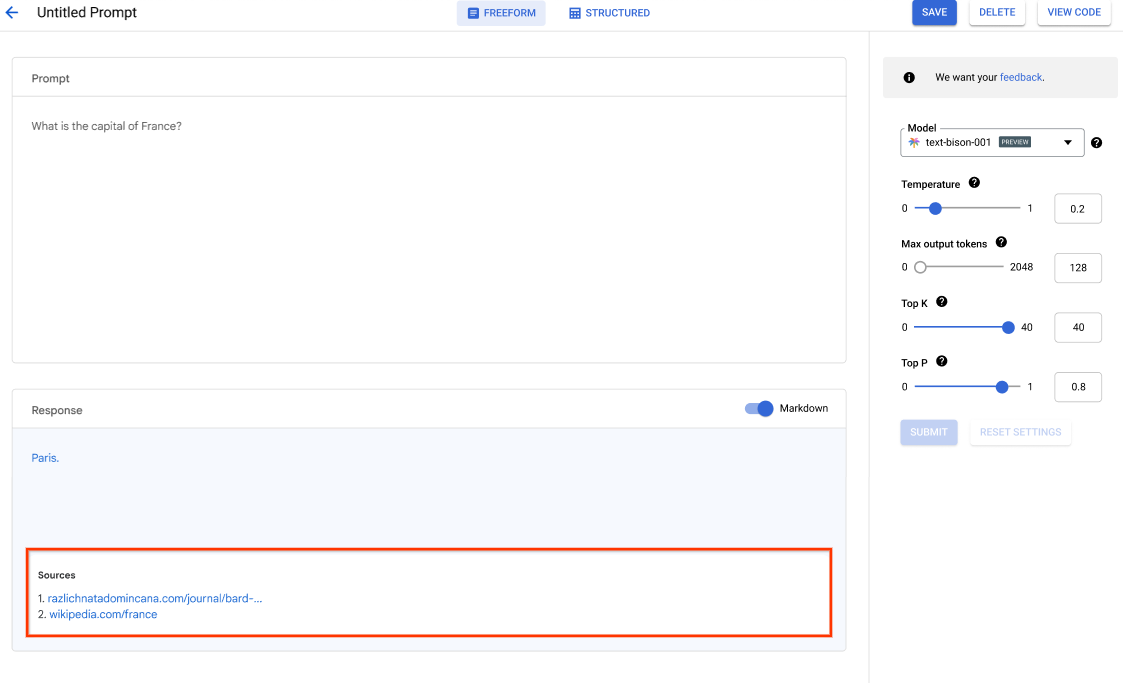

Vertex AI Studio propose un outil dans la console Google Cloud qui permet de rapidement prototyper et tester des modèles d'IA générative. Découvrez comment utiliser Generative AI Studio pour tester des modèles à l'aide d'exemples d'invites, concevoir et enregistrer des requêtes, régler un modèle de base, et convertir du contenu vocal en texte.

Comment ajuster les LLM dans Vertex AI Studio.

Tutoriels, guides de démarrage rapide et ateliers

Introduction à l'IA générative sur Vertex AI

Vertex AI Studio propose un outil dans la console Google Cloud qui permet de rapidement prototyper et tester des modèles d'IA générative. Découvrez comment utiliser Generative AI Studio pour tester des modèles à l'aide d'exemples d'invites, concevoir et enregistrer des requêtes, régler un modèle de base, et convertir du contenu vocal en texte.

Comment ajuster les LLM dans Vertex AI Studio.

Extraire, synthétiser et classer les données

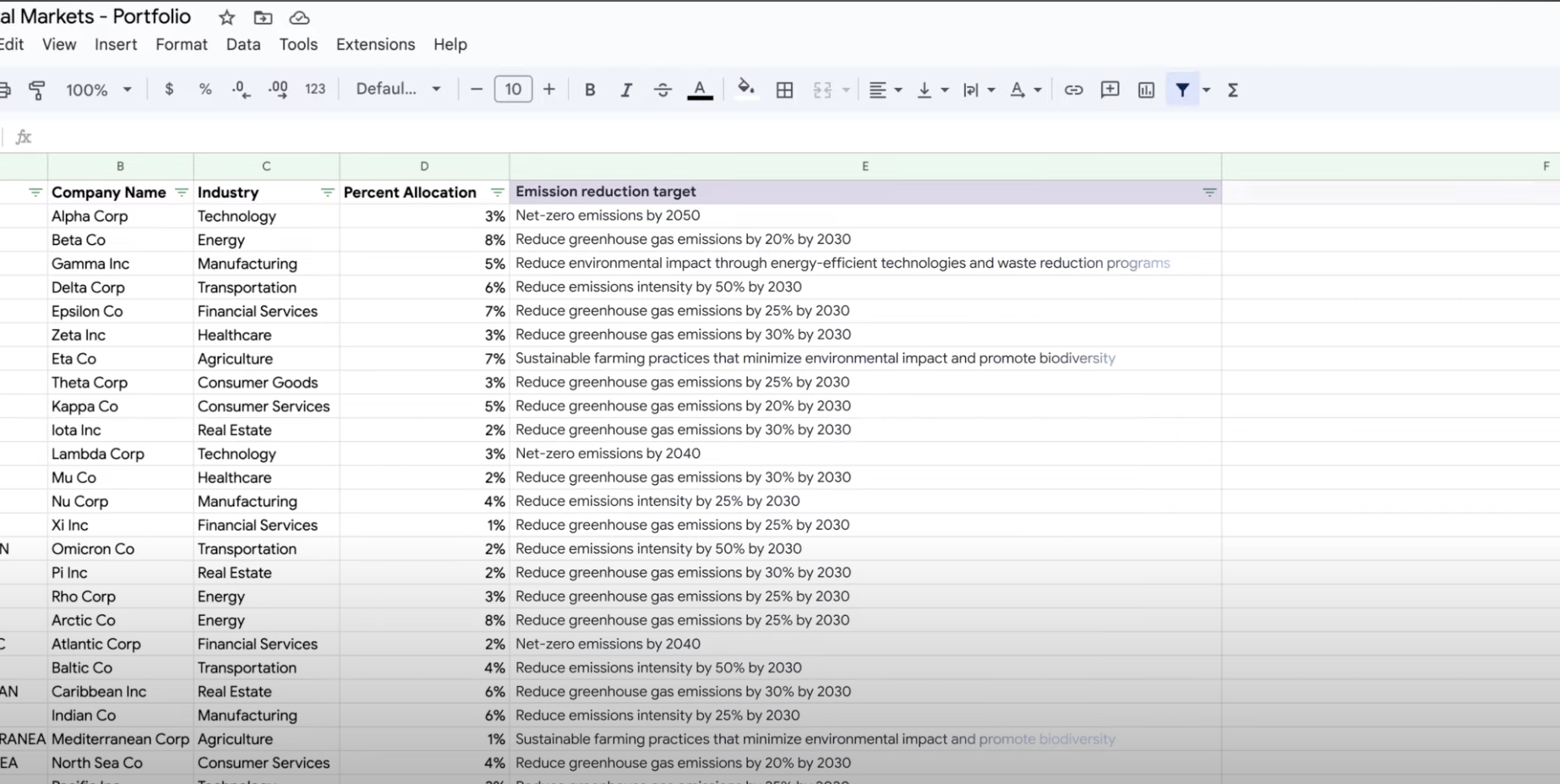

Utiliser l'IA générative pour la synthèse, la classification et l'extraction

Découvrez comment créer des requêtes textuelles pour gérer un nombre illimité de tâches grâce à la compatibilité de Vertex AI avec l'IA générative. Certaines des tâches les plus courantes sont la classification, la synthèse et l'extraction. Gemini sur Vertex AI vous permet de concevoir des requêtes avec flexibilité en termes de structure et de format.

Tutoriels, guides de démarrage rapide et ateliers

Utiliser l'IA générative pour la synthèse, la classification et l'extraction

Découvrez comment créer des requêtes textuelles pour gérer un nombre illimité de tâches grâce à la compatibilité de Vertex AI avec l'IA générative. Certaines des tâches les plus courantes sont la classification, la synthèse et l'extraction. Gemini sur Vertex AI vous permet de concevoir des requêtes avec flexibilité en termes de structure et de format.

Entraînez des modèles de ML personnalisés

Présentation et documentation de l'entraînement ML personnalisé

Regardez un tutoriel vidéo sur la procédure à suivre pour entraîner des modèles personnalisés sur Vertex AI.

Tutoriels, guides de démarrage rapide et ateliers

Présentation et documentation de l'entraînement ML personnalisé

Regardez un tutoriel vidéo sur la procédure à suivre pour entraîner des modèles personnalisés sur Vertex AI.

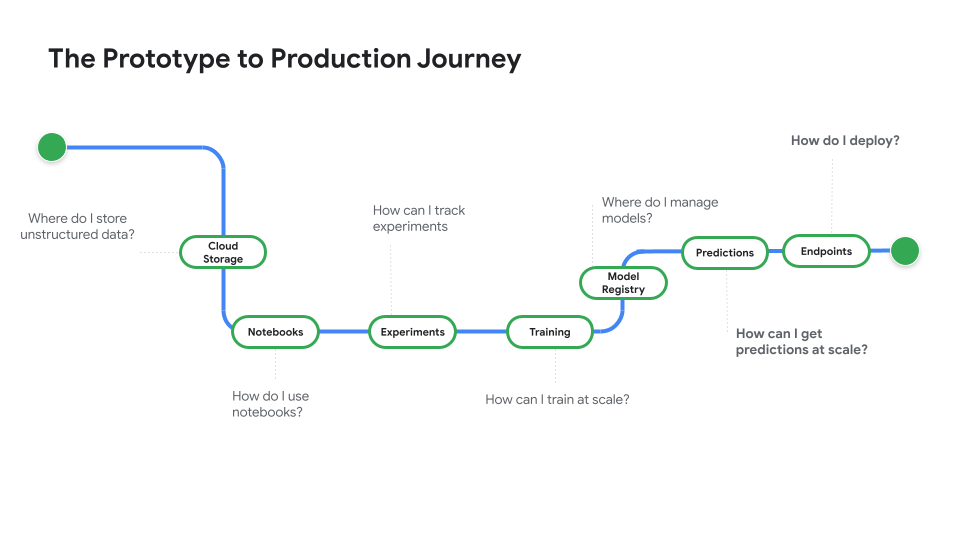

Déployer un modèle pour une utilisation en production

Effectuer des déploiements pour les prédictions par lot ou en ligne

Regardez Du prototype à la production, une série de vidéos qui vous fait passer du code du notebook au modèle déployé.

Tutoriels, guides de démarrage rapide et ateliers

Effectuer des déploiements pour les prédictions par lot ou en ligne

Regardez Du prototype à la production, une série de vidéos qui vous fait passer du code du notebook au modèle déployé.

Tarification

| Fonctionnement des tarifs de Vertex AI | Payez pour les outils Vertex AI, le stockage, le calcul et les ressources Cloud utilisées. Les nouveaux clients bénéficient de 300 $ de crédits pour essayer Vertex AI et d'autres produits Google Cloud. | |

|---|---|---|

| Outils et utilisation | Description | Prix |

IA générative | Modèle Imagen pour la génération d'images Basé sur la saisie d'image ou de caractères, ou sur la tarification d'un entraînement personnalisé. | À partir de 0,0001$ |

Génération de texte, de chat et de code Tous les 1 000 caractères d'entrée (requête) et tous les 1 000 caractères de sortie (réponse). | À partir de 0,0001$ pour 1 000 caractères | |

Modèles AutoML | Entraînement, déploiement et prédiction pour les données d'image En fonction de la durée d'entraînement par nœud-heure (qui reflète l'utilisation des ressources) et en cas de classification ou de détection d'objets. | À partir de 1,375 $ par nœud-heure |

Entraînement et prédiction des données vidéo Basé sur le prix par nœud-heure et sur la classification, le suivi des objets ou la reconnaissance d'actions, le cas échéant. | À partir de 0,462 $ par nœud-heure | |

Entraînement de données tabulaires et prédiction En fonction du prix par nœud-heure et en cas de classification/régression ou de prévision. Contactez le service commercial pour obtenir des informations détaillées sur les remises et les tarifs potentiels. | Contacter le service commercial | |

Importation de données textuelles, entraînement, déploiement, prédiction En fonction des taux horaires pour l'entraînement et la prédiction, pages pour l'importation de données anciennes (PDF uniquement), enregistrements texte et pages pour la prédiction. | À partir de 0,05 $ par heure | |

Modèles entraînés personnalisés | Entraînement de modèle personnalisé En fonction du type de machine utilisé par heure, la région et des accélérateurs utilisés. Obtenez une estimation via le service commercial ou notre simulateur de coût. | Contacter le service commercial |

Notebooks Vertex AI | Ressources de calcul et de stockage Basés sur les mêmes tarifs que Compute Engine et Cloud Storage. | Consulter les produits |

Frais de gestion Outre l'utilisation des ressources ci-dessus, des frais de gestion s'appliquent en fonction de la région, des instances, des notebooks et des notebooks gérés utilisés. Voir les détails | Voir les détails | |

Vertex AI Pipelines | Frais d'exécution et frais supplémentaires En fonction des tarifs d'exécution, des ressources utilisées et des éventuels frais de service supplémentaires. | À partir de 0,03 $ par exécution de pipeline |

Vertex AI Vector Search | Coûts de diffusion et de compilation En fonction de la taille de vos données, du nombre de requêtes par seconde (RPS) que vous souhaitez exécuter et du nombre de nœuds que vous utilisez. Voir un exemple | Consulter un exemple |

Consultez le détail des tarifs de l'ensemble des fonctionnalités et services Vertex AI.

Fonctionnement des tarifs de Vertex AI

Payez pour les outils Vertex AI, le stockage, le calcul et les ressources Cloud utilisées. Les nouveaux clients bénéficient de 300 $ de crédits pour essayer Vertex AI et d'autres produits Google Cloud.

Modèle Imagen pour la génération d'images

Basé sur la saisie d'image ou de caractères, ou sur la tarification d'un entraînement personnalisé.

Starting at

0,0001$

Génération de texte, de chat et de code

Tous les 1 000 caractères d'entrée (requête) et tous les 1 000 caractères de sortie (réponse).

Starting at

0,0001$

pour 1 000 caractères

Entraînement, déploiement et prédiction pour les données d'image

En fonction de la durée d'entraînement par nœud-heure (qui reflète l'utilisation des ressources) et en cas de classification ou de détection d'objets.

Starting at

1,375 $

par nœud-heure

Entraînement et prédiction des données vidéo

Basé sur le prix par nœud-heure et sur la classification, le suivi des objets ou la reconnaissance d'actions, le cas échéant.

Starting at

0,462 $

par nœud-heure

Entraînement de données tabulaires et prédiction

En fonction du prix par nœud-heure et en cas de classification/régression ou de prévision. Contactez le service commercial pour obtenir des informations détaillées sur les remises et les tarifs potentiels.

Contacter le service commercial

Importation de données textuelles, entraînement, déploiement, prédiction

En fonction des taux horaires pour l'entraînement et la prédiction, pages pour l'importation de données anciennes (PDF uniquement), enregistrements texte et pages pour la prédiction.

Starting at

0,05 $

par heure

Entraînement de modèle personnalisé

En fonction du type de machine utilisé par heure, la région et des accélérateurs utilisés. Obtenez une estimation via le service commercial ou notre simulateur de coût.

Contacter le service commercial

Ressources de calcul et de stockage

Basés sur les mêmes tarifs que Compute Engine et Cloud Storage.

Consulter les produits

Frais de gestion

Outre l'utilisation des ressources ci-dessus, des frais de gestion s'appliquent en fonction de la région, des instances, des notebooks et des notebooks gérés utilisés. Voir les détails

Voir les détails

Frais d'exécution et frais supplémentaires

En fonction des tarifs d'exécution, des ressources utilisées et des éventuels frais de service supplémentaires.

Starting at

0,03 $

par exécution de pipeline

Vertex AI Vector Search

Coûts de diffusion et de compilation

En fonction de la taille de vos données, du nombre de requêtes par seconde (RPS) que vous souhaitez exécuter et du nombre de nœuds que vous utilisez. Voir un exemple

Consulter un exemple

Consultez le détail des tarifs de l'ensemble des fonctionnalités et services Vertex AI.

Commencer votre démonstration de faisabilité

Cas d'utilisation métier

Exploitez tout le potentiel de l'IA générative

"La précision de la solution d'IA générative de Google Cloud et l'aspect pratique de Vertex AI Platform nous donnent la confiance dont nous avions besoin pour mettre en œuvre cette technologie de pointe au cœur de nos activités et atteindre notre objectif à long terme : un temps de réponse de zéro minute."

Abdol Moabery, CEO de GA Telesis

Rapports d'analystes

TKTKT

Google figure parmi les leaders du marché dans le rapport The Forrester Wave™: AI Infrastructure Solutions (Solutions d'infrastructure d'IA) du premier trimestre 2024. Google a obtenu les meilleurs scores de tous les fournisseurs évalués, tant pour les offres actuelles que dans les catégories stratégiques.

Google figure parmi les leaders du marché dans le rapport "The Forrester Wave™: AI Foundation Models for Language" (Modèles de fondation d'IA pour le langage) du 2e trimestre 2024 Lire le rapport

Google figure parmi les leaders du marché dans le rapport "The Forrester Wave™: AI/ML Platforms" (Plates-formes d'IA/de ML) du 3e trimestre 2024. En savoir plus.